一.HBase的表结构和体系结构

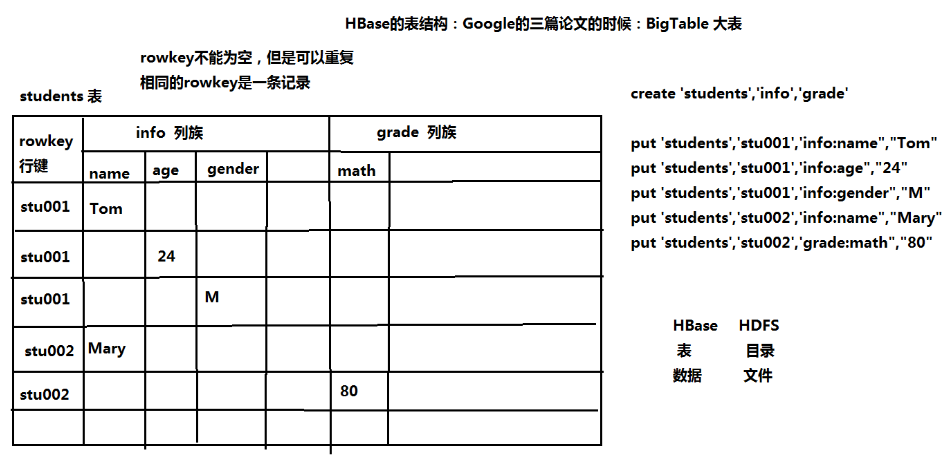

1.HBase的表结构

把所有的数据存到一张表中。通过牺牲表空间,换取良好的性能。

HBase的列以列族的形式存在。每一个列族包括若干列

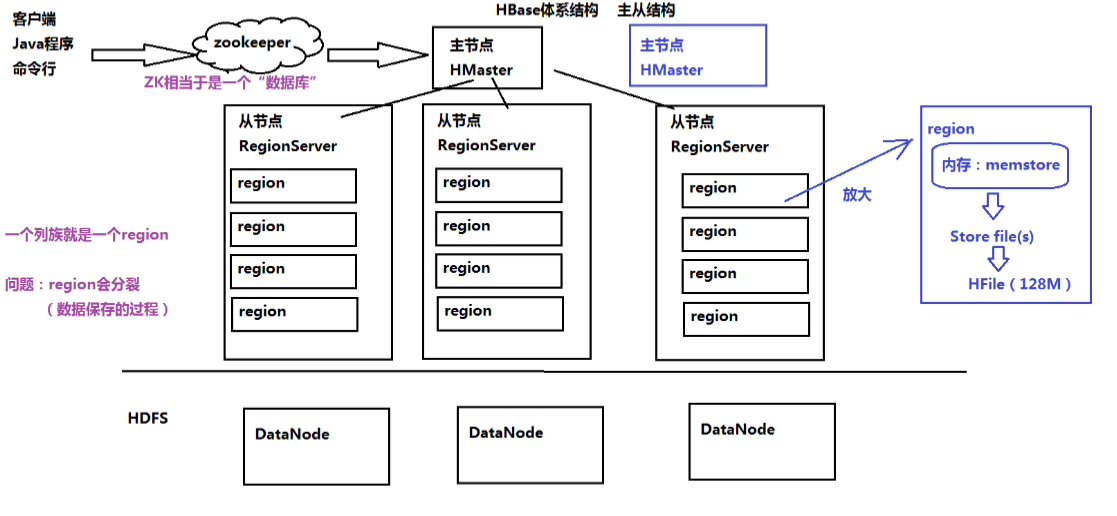

2.HBase的体系结构

主从结构:

主节点:HBase

从节点:RegionServer 包含多个Region,一个列族就是一个Region

HBase在ZK中保存数据

(*)配置信息、HBase集群结构信息

(*)表的元信息

(*)实现HBase的HA:high avaibility 高可用性

二.搭建HBase的本地模式和伪分布模式

1.解压:

tar -zxvf hbase-1.3.1-bin.tar.gz -C ~/training/

2.设置环境变量: vi ~/.bash_profile

HBASE_HOME=/root/training/hbase-1.3.1

export HBASE_HOMEPATH=$HBASE_HOME/bin:$PATH

export PATH 使文件生效:source ~/.bash_profile

本地模式 不需要HDFS、直接把数据存在操作系统

hbase-env.sh

export JAVA_HOME=/root/training/jdk1.8.0_144

hbase-site.xml

<property><name>hbase.rootdir</name><value>file:///root/training/hbase-1.3.1/data</value> </property>

伪分布模式

hbase-env.sh 添加下面这一行,使用自带的Zookeeper

export HBASE_MANAGES_ZK=true

hbase-site.xml 把本地模式的property删除,添加下列配置

<property>

<name>hbase.rootdir</name><value>hdfs://192.168.153.11:9000/hbase</value> </property><property><name>hbase.cluster.distributed</name><value>true</value> </property><property><!--Zookeeper的地址--><name>hbase.zookeeper.quorum</name><value>192.168.153.11</value> </property><property><!--数据冗余度--><name>dfs.replication</name><value>1</value> </property>

regionservers

192.168.153.11

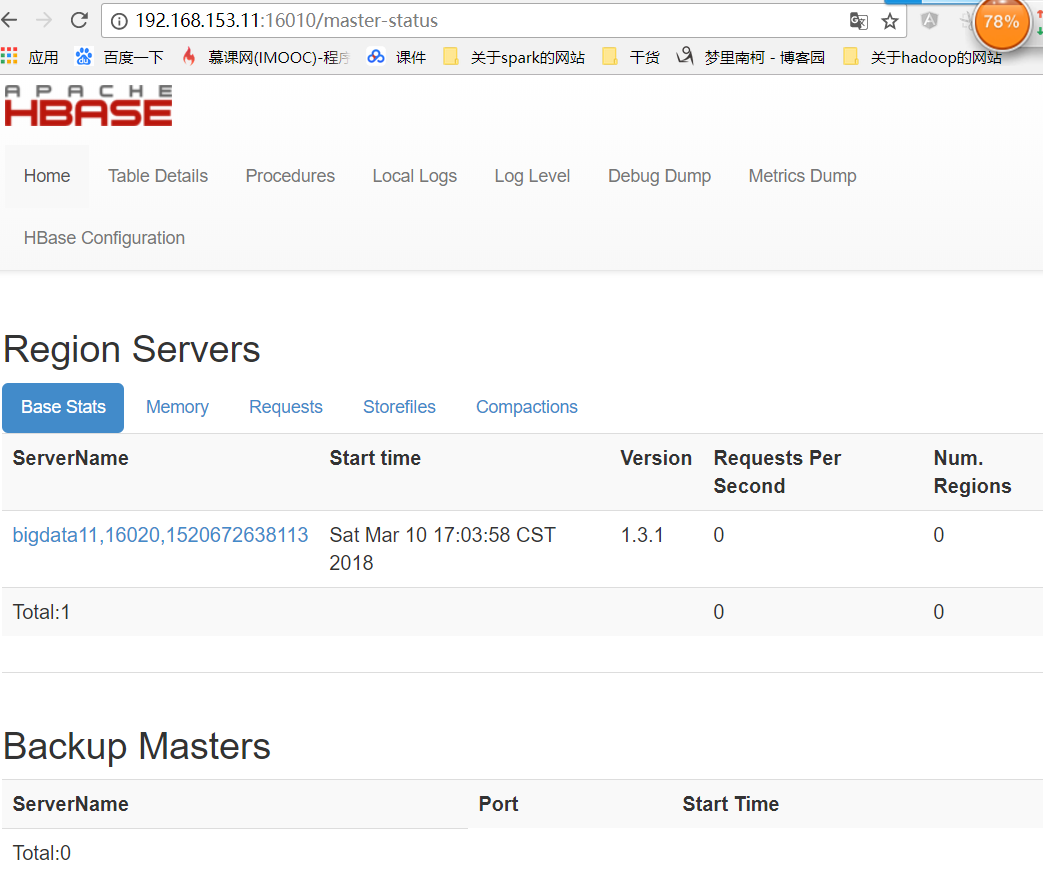

可以在web上查看

三.搭建HBase的全分布模式和HA

在putty中设置bigdata12 bigdata13 bigdata14 时间同步:date -s 2018-03-10

主节点:hbase-site.xml

<property><name>hbase.rootdir</name><value>hdfs://192.168.153.12:9000/hbase</value> </property><property><name>hbase.cluster.distributed</name><value>true</value> </property><property><name>hbase.zookeeper.quorum</name><value>192.168.153.12</value> </property><property><name>dfs.replication</name><value>2</value> </property> <property><!--解决时间不同步的问题:允许的时间误差最大值--><name>hbase.master.maxclockskew</name><value>180000</value> </property>

regionservers

192.168.154.13

192.168.153.14 拷贝到13和14上:

scp -r hbase-1.3.1/ root@bigdata13:/root/training

scp -r hbase-1.3.1/ root@bigdata14:/root/training

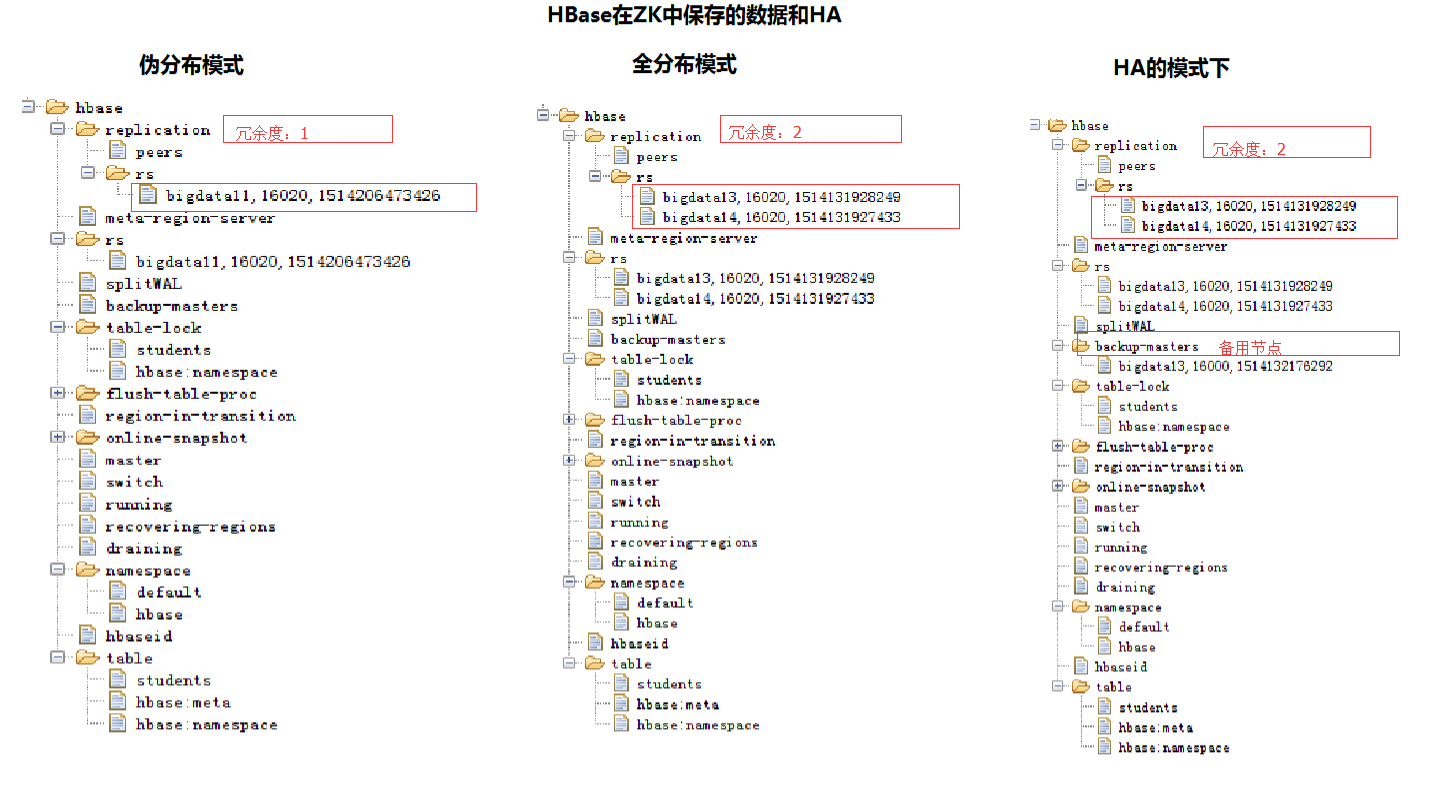

四.HBase在Zookeeper中保存的数据和HA的实现

HA的实现:

不需要额外配置,只用在其中一个从节点上单点启动Hmaster

bigdata13:hbase-daemon.sh start master

五.操作HBase

1.Web Console网页:端口:16010

2.命令行

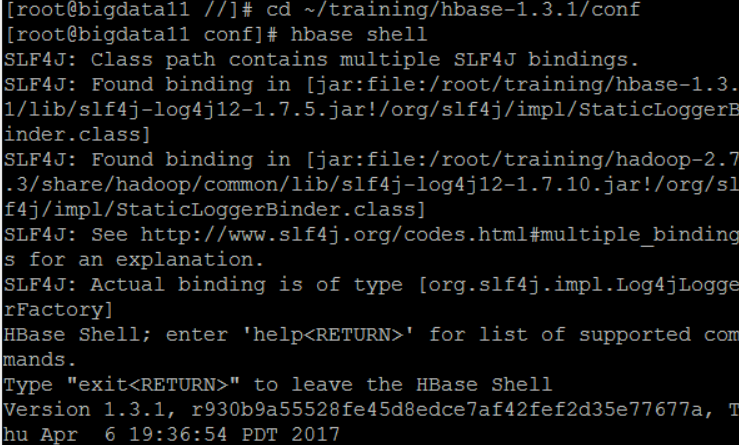

开启hbase: start-hbase.sh

开启hbase shell

建表:

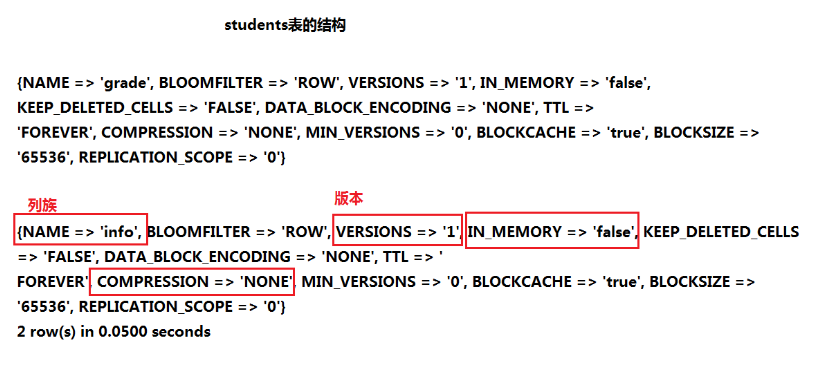

hbase(main):001:0> create 'students','info','grade' //创建表 0 row(s) in 1.7020 seconds=> Hbase::Table - students hbase(main):002:0> desc 'students' //查看表结构 Table students is ENABLED students COLUMN FAMILIES DESCRIPTION {NAME => 'grade', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODIN G => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATI ON_SCOPE => '0'} {NAME => 'info', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING=> 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATIO N_SCOPE => '0'} 2 row(s) in 0.2540 secondshbase(main):003:0> describe 'students' Table students is ENABLED students COLUMN FAMILIES DESCRIPTION {NAME => 'grade', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODIN G => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATI ON_SCOPE => '0'} {NAME => 'info', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING=> 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATIO N_SCOPE => '0'} 2 row(s) in 0.0240 seconds

desc和describe的区别:

desc是SQL*PLUS语句

describe是SQL语句

分析students表的结构

查看有哪些表:list

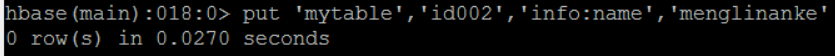

插入数据:put

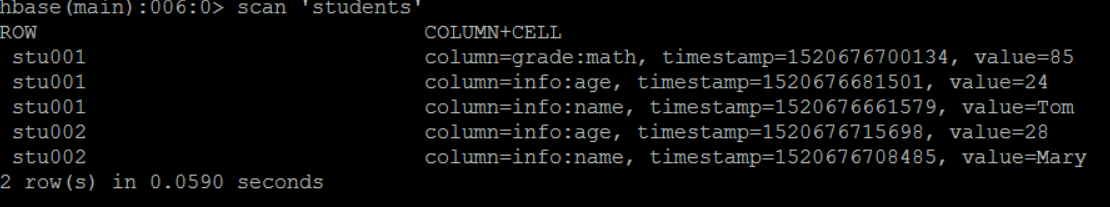

put 'students','stu001','info:name','Tom' put 'students','stu001','info:age','24' put 'students','stu001','grade:math','85' put 'students','stu002','info:name','Mary' put 'students','stu002','info:age','28'

查询数据:

scan 相当于:select * from students

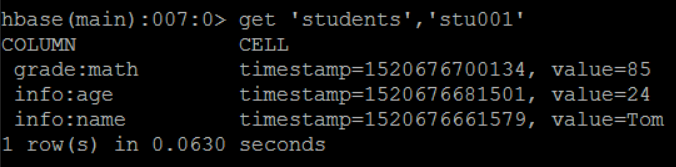

get 相当于 select * from students where rowkey=??

清空表中的数据

delete DML(可以回滚)

truncate DDL(不可以回滚)

补充:DDL:数据定义语言,如 create/alter/drop/truncate/comment/grant等

DML:数据操作语言,如select/delete/insert/update/explain plan等

DCL:数据控制语言,如commit/roollback

2、delete会产生碎片;truncate不会

3、delete不会释放空间;truncate会

4、delete可以闪回(flashback),truncate不可以闪回

truncate 'students' -----> 本质: 先删除表,再重建

日志:

Truncating 'students' table (it may take a while):

- Disabling table...

- Truncating table...

0 row(s) in 4.0840 seconds 3.JAVA API

修改etc文件:C:\Windows\System32\drivers\etc

添加一行:192.168.153.11 bigdata11

TestHBase.java

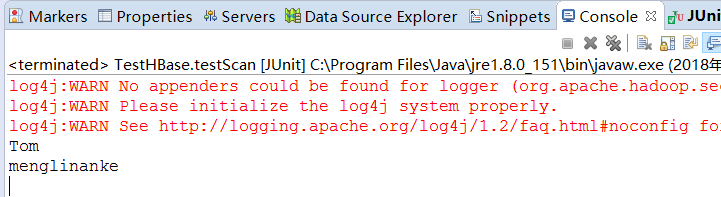

package demo;import java.io.IOException;import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.MasterNotRunningException; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.ZooKeeperConnectionException; import org.apache.hadoop.hbase.client.Get; import org.apache.hadoop.hbase.client.HBaseAdmin; import org.apache.hadoop.hbase.client.HTable; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.util.Bytes; import org.junit.Test;import io.netty.util.internal.SystemPropertyUtil;/*** 1.需要一个jar包: hamcrest-core-1.3.jar* 2.修改windows host文件* C:\Windows\System32\drivers\etc\hosts* 192.168.153.11 bigdata11* @author YOGA**/ public class TestHBase {@Testpublic void testCreateTable() throws Exception{//配置ZK的地址信息Configuration conf = new Configuration();//hbase-site.xml文件里conf.set("hbase.zookeeper.quorum", "192.168.153.11");//得到HBsase客户端HBaseAdmin client = new HBaseAdmin(conf);//创建表的描述符HTableDescriptor htd = new HTableDescriptor(TableName.valueOf("mytable"));//添加列族htd.addFamily(new HColumnDescriptor("info"));htd.addFamily(new HColumnDescriptor("grade"));//建表 client.createTable(htd);client.close();}@Testpublic void testPut() throws Exception{//配置ZK的地址信息Configuration conf = new Configuration();conf.set("hbase.zookeeper.quorum", "192.168.153.11");//得到HTable客户端HTable client = new HTable(conf, "mytable");//构造一个Put对象,参数:rowKeyPut put = new Put(Bytes.toBytes("id001"));//put.addColumn(family, //列族// qualifier, //列// value) ֵ//列对应的值put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"), Bytes.toBytes("Tom"));client.put(put);//client.put(List<Put>); client.close();}@Testpublic void testGet() throws Exception{//配置ZK的地址信息Configuration conf = new Configuration();conf.set("hbase.zookeeper.quorum", "192.168.153.11");//得到HTable客户端HTable client = new HTable(conf, "mytable");//构造一个Get对象Get get = new Get(Bytes.toBytes("id001"));//查询Result result = client.get(get);//取出数据String name = Bytes.toString(result.getValue(Bytes.toBytes("info"), Bytes.toBytes("name")));System.out.println(name);client.close();}@Testpublic void testScan() throws Exception{//配置ZK的地址信息Configuration conf = new Configuration();conf.set("hbase.zookeeper.quorum", "192.168.153.11");//得到HTable客户端HTable client = new HTable(conf, "mytable");//定义一个扫描器Scan scan = new Scan();//scan.setFilter(filter); 定义一个过滤器//通过扫描器查询数据ResultScanner rScanner = client.getScanner(scan);for (Result result : rScanner) {String name = Bytes.toString(result.getValue(Bytes.toBytes("info"), Bytes.toBytes("name")));System.out.println(name);}} }

执行以上test,结果(最后一个)

递归转非递归的思路和例子)

![bzoj3631: [JLOI2014]松鼠的新家](http://pic.xiahunao.cn/bzoj3631: [JLOI2014]松鼠的新家)

.docx)