Java theory and practice: Fixing the Java Memory Model, Part 2

译:Java 理论与实践:修复 Java 内存模型,第 2 部分

翻译自:http://www.ibm.com/developerworks/library/j-jtp03304/(原文写于2004年3月,经典永不过时)

JSR 133, which has been active for nearly three years, has recently issued its public recommendation on what to do about the Java Memory Model (JMM). In Part 1 of this series, columnist Brian Goetz focused on some of the serious flaws that were found in the original JMM, which resulted in some surprisingly difficult semantics for concepts that were supposed to be simple. This month, he reveals how the semantics of

volatileandfinalwill change under the new JMM, changes that will bring their semantics in line with most developers’ intuition. Some of these changes are already present in JDK 1.4; others will have to wait until JDK 1.5.译:JSR133 规范,已经活跃将近 3 年了,最近发布了关于要对 JMM 做哪些事情的建议。在本系列的 Part1 部分,专栏作家 Brian Goetz 详细的介绍了原来的 JMM 中被发现的一些致命的缺陷,这些缺陷导致了一些本来应该很简单的概念却有了难以理解的语法含义。这个月(2004-03)他展示了在新的 JMM 中,关键字

volatile和final的语义将会如何改变,而这些改变会使这两个关键字的语义符合大多数开发者的理解。

Writing concurrent code is hard to begin with; the language should not make it any harder. While the Java platform included support for threading from the outset, including a cross-platform memory model that was intended to provide “Write Once, Run Anywhere” guarantees for properly synchronized programs, the original memory model had some holes. And while many Java platforms provided stronger guarantees than were required by the JMM, the holes in the JMM undermined the ability to easily write concurrent Java programs that could run on any platform. So in May of 2001, JSR 133 was formed, charged with fixing the Java Memory Model. Last month, I talked about some of those holes; this month, I’ll talk about how they’ve been plugged.

译:写并发的代码本身已经十分困难了,因此语言不应该让它变的更难。尽管 Java 平台在设计之初就引入了对线程的支持,包括通过提供一个 “书与一次,到处运行” 的跨平台的内存模型来保证程序的同步,但是原始的内存模型还是存在一些漏洞。尽管许多的 Java 平台提供了比 JMM 要求的更加严格的保证,但 JMM 中的这些漏洞仍然使写并发的 JAVA 代码变得并不不容易。因此,在2001年5月,为了修复 JMM 中的这些漏洞,JSR133 规范被制定了。上个月,我谈论了这些漏洞。这个月,我要聊聊漏洞是如何被修复的。

Visibility, revisited(再探 可见性)

One of the key concepts needed to understand the JMM is that of visibility – how do you know that if thread A executes someVariable = 3, other threads will see the value 3 written there by thread A? A number of reasons exist for why another thread might not immediately see the value 3 forsomeVariable: it could be because the compiler has reordered instructions in order to execute more efficiently, or that someVariable was cached in a register, or that its value was written to the cache on the writing processor but not yet flushed to main memory, or that there is an old (or stale) value in the reading processor’s cache. It is the memory model that determines when a thread can reliably “see” writes to variables made by other threads. In particular, the memory model defines semantics for volatile, synchronized, and final that make guarantees of visibility of memory operations across threads.

译:JMM中 一个重要的概念,即可见性(visibility)—— 你怎样才能知道如果 线程A 执行了 someVariable=3,其他的线程能够看到被 线程A 写入的那个值 3?其他的线程无法立刻看到 someVariable 变量的值为 3 的原因有很多:可能是编译器为了代码的运行效率进行了指令的重排序,或者是 someVariable 被缓存在了 CPU 的寄存器中,或者是someVariable 被放到了写处理器的缓存中还没来得及刷新到主内存,又或者是读处理器的缓存中有一个旧值。内存模型决定了一个线程何时可以可靠的看到被其他线程写入的值,而且,内存模型定义了 volatile, final 和 synchronized 等关键字的语义,而这些保证了多线程操作内存时的可见性。

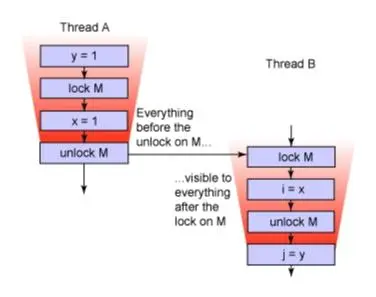

When a thread exits a synchronized block as part of releasing the associated monitor, the JMM requires that the local processor cache be flushed to main memory. (Actually, the memory model does not talk about caches – it talks about an abstraction, local memory, which encompasses caches, registers, and other hardware and compiler optimizations.) Similarly, as part of acquiring the monitor when entering a synchronized block, local caches are invalidated so that subsequent reads will go directly to main memory and not the local cache. This process guarantees that when a variable is written by one thread during a synchronized block protected by a given monitor and read by another thread during a synchronized block protected by the same monitor, the write to the variable will be visible by the reading thread. The JMM does not make this guarantee in the absence of synchronization – which is why synchronization (or its younger sibling, volatile) must be used whenever multiple threads are accessing the same variables.

译:作为释放监视器的一部分(Java中每个对象都有且仅有一个关联的监视器),当一个线程退出同步代码块时,JMM 将要求处理器缓存中的内容必须刷新到主内存。与之同理,作为获取监视器的一部分,当一个线程进入同步代码块的时候,JMM 则会要求失效本地缓存中的内容,以便之后的读操作会直接访问主内存而不是本地缓存。这样的过程就保证了,当一个线程在被监视器保护的同步代码块中写入变量,而另一个线程在被同一个监视器保护的同步代码块中读取变量时,写操作的结果对于进行读操作的线程是可见的。JMM 的这个机制只出现在同步存在的场景中,这也是为什么当多线程访问共享变量时,我们必须使用 sychronized 关键字。

New Guarantees for volatile(对于 volatile 的新保证)

The original semantics for volatile guaranteed only that reads and writes of volatile fields would be made directly to main memory, instead of to registers or the local processor cache, and that actions on volatile variables on behalf of a thread are performed in the order that the thread requested. In other words, this means that the old memory model made promises only about the visibility of the variable being read or written, and no promises about the visibility of writes to other variables. While this was easier to implement efficiently, it turned out to be less useful than initially thought.

译:原来的 volatile 的语义保证了只有对 volatile 成员变量的读写操作是直接操作主内存的,而不去操作寄存器或者处理器缓存,并且代表线程对 volatile 变量执行的操作是按照线程请求的顺序执行的。换句话说,这意味着旧的内存模型只保证正在被读写的变量的可见性,但并不保证写其他变量的可见性。尽管这样实现起来很容易,但事实证明这样实现并没有什么用处。

While reads and writes of volatile variables could not be reordered with reads and writes of other volatile variables, they could still be reordered with reads and writes of nonvolatile variables. In Part 1, you learned how the code in Listing 1 was not sufficient (under the old memory model) to guarantee that the correct value for configOptions and all the variables reachable indirectly through configOptions (such as the elements of the Map) would be visible to thread B, because the initialization of configOptions could have been reordered with the initialization of the volatileinitialized variable.

译:尽管对多个 volatil 变量的读写操作之间不会进行重排序,但是对 volatile 变量和非 volatile 变量的读写操作之间还是会发生重排序的。如下,列举 1 中的代码并不足以保证 configOptions(即 Map 元素) 的值和通过 configOptions 间接获取到的值对于 线程B 是可见的,因为对 configOptions 变量的初始化指令可能会和 initialized 变量的初始化指令重排序。

Listing 1. Using a volatile variable as a “guard”(列举1. 使用 volatile 变量作为“保证”)

Map configOptions;

char[] configText;

volatile boolean initialized = false;// In Thread A

configOptions = new HashMap();

configText = readConfigFile(fileName);

processConfigOptions(configText, configOptions);

initialized = true;// In Thread B

while (!initialized) sleep();

// use configOptions

Unfortunately, this situation is a common use case for volatile – using a volatile field as a “guard” to indicate that a set of shared variables had been initialized. The JSR 133 Expert Group decided that it would be more sensible for volatile reads and writes not to be reorderable with any other memory operations – to support precisely this and other similar use cases. Under the new memory model, when thread A writes to a volatile variable V, and thread B reads from V, any variable values that were visible to A at the time that V was written are guaranteed now to be visible to B. The result is a more useful semantics of volatile, at the cost of a somewhat higher performance penalty for accessing volatile fields.

译:不幸的是,上面的使用场景很常见。即,volatile 变量被当成一个标记以判断是否所有的变量都被进行了合理的初始化。针对这样类型的场景,JSR133 规范专家组决定,对 volatile 变量的读写操作和对非 volatile 变量的读写操作之间禁止发生指令重排序是合理的。在新的内存模型中,如果 线程A 向 volatile 变量V 写入一个值,线程B 从 变量V 读取一个值,那么任何 线程A 在写入 变量V 时可见的值,对于 线程B 都必须保证是可见的。通过这样的方式,使得 volatile关键字 的语义更加的符合使用场景,成本是相比原来访问 volatile变量 时会有一些性能的降低。

What happens before what?(happens-before 是什么?)

Actions, such as reads and writes of variables, are ordered within a thread according to what is called the “program order” – the order in which the semantics of the program say they should occur. (The compiler is actually free to take some liberties with the program order within a thread as long as as-if-serial semantics are preserved.) Actions in different threads are not necessarily ordered with respect to each other at all – if you start two threads and they each execute without synchronizing on any common monitors or touching any common volatile variables, you can predict exactly nothing about the relative order in which actions in one thread will execute (or become visible to a third thread) with respect to actions in the other thread.

译:诸如对变量的读写操作,它们在线程内会被根据一个叫做 “program order” 的东西进行排序。“program order” 代表程序的代码语义发生的顺序。编译器实际上在决定线程中的程序顺序这件事上是有一些自由的,只要保留了 as-if-serial 语义即可。在不同的线程中,操作执行的顺序在彼此之间是不确定的——如果你启动了两个线程,并且这两个线程之间没有通过共用的监视器进行同步,也没有操作同一个 volatile 变量的话,那么你对这两个线程中的各个操作执行时的相对顺序是无法进行任何推测的。

Additional ordering guarantees are created when a thread is started, a thread is joined with another thread, a thread acquires or releases a monitor (enters or exits a synchronized block), or a thread accesses a volatile variable. The JMM describes the ordering guarantees that are made when a program uses synchronization or volatile variables to coordinate activities in multiple threads. The new JMM, informally, defines an ordering called happens-before, which is a partial ordering of all actions within a program, as follows:

- Each action in a thread happens-before every action in that thread that comes later in the program order

- An unlock on a monitor happens-before every subsequent lock on that same monitor

- A write to a volatile field happens-before every subsequent read of that same volatile

- A call to

Thread.start()on a thread happens-before any actions in the started thread - All actions in a thread happen-before any other thread successfully returns from a

Thread.join()on that thread

译:当一个线程启动时,额外的顺序保证可能被创建,比如当一个线程被 joined 到另一个线程时,一个线程进入或者退出同步块时,或者一个线程访问 volatile变量 时。JMM 内存模型描述了当程序使用 synchronized 或者 volatile变量 来协调多个线程之间的行动时所需要进行的保证(也可以说需要遵守的规范)。新的 JMM,定义了一个顺序规范,即 happens-before:

- 一个线程中的每一个操作都要 happens-before 在同一个线程中的之后出现的操作(按照 program order 定义的顺序)

- 在监视器上的解锁操作要 happens-before 在之后发生的同一个监视器上的加锁操作

- 对于 volatile 变量的写操作要 happens-before 在之后发生的对于同一个 volatile 变量的读操作

- 对于一个线程的

Thread.start()的操作要 happens-before 在该已经启动的线程上的任何其他操作 - 一个线程上的所有操作要 happens-before 从当前线程的 Thread.join() 操作成功返回之后的线程上的操作

It is the third of these rules, the one governing reads and writes of volatile variables, that is new and fixes the problem with the example in Listing 1. Because the write of the volatile variable initialized happens after the initialization of configOptions, the use of configOptions happens after the read of initialized, and the read of initialized happens after the write to initialized, you can conclude that the initialization ofconfigOptions by thread A happens before the use of configOptions by thread B. Therefore, configOptions and the variables reachable through it will be visible to thread B.

译:在上述规则中的第三条,对于 volatile 变量的读写操作的规范,修复了上述 Listing 1 中提到的问题。因为对于 configOptions变量的初始化 happens-before 对于 volatile变量 initialized 的写操作,而对于 initialized变量 的写操作要 happens-before 对于 initialized 的读操作,而对于 initialized 的读操作要 happens-before 对于configOptions变量 的使用,因此保证了对于 configOptions变量 的初始化发生在使用 configOptions 之前。即,configOptions 变量的值对于线程B一定是可见的。

Figure1. Using synchronization to guarantee visibility of memory writes across threads(图1. 使用同步确保线程间内存写入的可见性)

Data races(数据竞争)

A program is said to have a data race, and therefore not be a “properly synchronized” program, when there is a variable that is read by more than one thread, written by at least one thread, and the write and the reads are not ordered by a happens-before relationship.

译:当一个变量被超过一个线程读取,被至少一个线程写入,并且读操作和写操作无法满足 happens-before 原则时,我们就说包含这个变量的程序是有数据竞争的(data race),因此这个程序也不是”正确同步”的程序。

Does this fix the double-checked locking problem?(这是否解决了双重校验锁问题?)

One of the proposed fixes to the double-checked locking problem was to make the field that holds the lazily initialized instance a volatile field. (SeeResources for a description of the double-checked locking problem and an an explanation of why the proposed algorithmic fixes don’t work.) Under the old memory model, this did not render double-checked locking thread-safe, because writes to a volatile field could still be reordered with writes to other nonvolatile fields (such as the fields of the newly constructed object), and therefore the volatile instance reference could still hold a reference to an incompletely constructed object.

译:双重校验锁(DCL)的一个修复方式就是把持有懒初始化实例的那个变量设置为 volatile变量(双重检验锁问题参考文章[2])。在老的 Java 内存模型中,双重校验锁并不是线程安全的,因为对于volatile变量的写操作可能会被和非 volatile 变量的写操作进行重排序。例如,新构造的对象因为指令重排序在初始化完成之前就被赋给了 volatile 变量,因此 volatile 变量实例引用的值可能仍然指向的是一个没有完成初始化的构造对象。

Under the new memory model, this “fix” to double-checked locking renders the idiom thread-safe. But that still doesn’t mean that you should use this idiom! The whole point of double-checked locking was that it was supposed to be a performance optimization, designed to eliminate synchronization on the common code path, largely because synchronization was relatively expensive in very early JDKs. Not only has uncontended synchronization gotten a lot cheaper since then, but the new changes to the semantics of volatile make it relatively more expensive than the old semantics on some platforms. (Effectively, each read or write to a volatile field is like “half” a synchronization – a read of a volatile has the same memory semantics as a monitor acquire, and a write of a volatile has the same semantics as a monitor release.) So if the goal of double-checked locking is supposed to offer improved performance over a more straightforward synchronized approach, this “fixed” version doesn’t help very much either.

译:在新的 Java 内存模型中,随着我们上文论述的一些修复,DCL 的使用是线程安全的了。但这并意味着你应该使用DCL。早期使用DCL的理由是使用它来进行性能优化,使用它来降低在相同代码块上进行同步时可能发生的冲突,因为在早期的 Jdk 中,同步是一件昂贵的操作。但现在,非竞争同步已经变得轻量了很多,并且 volatile 的语义的改变使得它在某些旧版本的 Java 平台上会变得很重量级。(实际上,对于 volatile 变量的每一次读写操作都相当于半个 synchronized —— 对于 volatile 变量的读和获取监视器的语义是相同的,对于 volatile 变量的写操作和释放监视器的语义是相同的)。因此,如果使用双重校验锁的目标是获取比 synchronized 更好的性能,那么使用改进语义后的 volatile 变量并不会有任何帮助。

Instead of double-checked locking, use the Initialize-on-demand Holder Class idiom, which provides lazy initialization, is thread-safe, and is faster and less confusing than double-checked locking:

译:代替使用双重校验锁,我们可以使用静态内部类的方式,这种方式也能提供懒初始化,并且也是线程安全的,并且比双重校验锁更高效也更容易理解。

Listing 2. The Initialize-On-Demand Holder Class idiom(列举2. Initialize-On-Demand Holder 类习惯用法)

private static class LazySomethingHolder {public static Something something = new Something();

}...public static Something getInstance() {return LazySomethingHolder.something;

}

This idiom derives its thread safety from the fact that operations that are part of class initialization, such as static initializers, are guaranteed to be visible to all threads that use that class, and its lazy initialization from the fact that the inner class is not loaded until some thread references one of its fields or methods.

译:上述方式是线程安全的,因为类的初始化是线程安全的。上述方式也是懒初始化的,因为内部类一直到被真正使用的时候才会被加载。

Initialization safety(初始化安全)

The new JMM also seeks to provide a new guarantee of initialization safety – that as long as an object is properly constructed (meaning that a reference to the object is not published before the constructor has completed), then all threads will see the values for its final fields that were set in its constructor, regardless of whether or not synchronization is used to pass the reference from one thread to another. Further, any variables that can be reached through a final field of a properly constructed object, such as fields of an object referenced by a final field, are also guaranteed to be visible to other threads as well. This means that if a final field contains a reference to, say, a LinkedList, in addition to the correct value of the reference being visible to other threads, also the contents of that LinkedList at construction time would be visible to other threads without synchronization. The result is a significant strengthening of the meaning of final – that final fields can be safely accessed without synchronization, and that compilers can assume that final fields will not change and can therefore optimize away multiple fetches.

译:新的 JMM 也寻求提供一种保证初始化安全的机制——只要一个对象被正确的构造出来,那么所有的线程都将能看到在其构造函数中设置的字段的值。而不管是否使用同步将引用从一个线程传递到了另一个线程。此外,通过正确构造的对象的 final 字段可以访问到的任何变量,例如 final 字段引用的对象的字段,也能够保证对其他线程可见。这意味着,如果 final 字段包含对 LinkedList 的引用,那么除了该引用的正确值对其他线程可见之外,在构造时该 LinkedList 的内容也将对其他线程可见,而无需同步。结果就是,final 字段的含义得到的显著的增强——final 字段可以在没有同步的情况下安全的访问,编译器可以认为 final字 段不会更改,因此可以进行多次优化。

Final means final(final 意味着 最终)

The mechanism by which final fields could appear to change their value under the old memory model was outlined in Part 1 – in the absence of synchronization, another thread could first see the default value for a final field and then later see the correct value.

译:在老的内存模型中,final 字段可能会出现改变其值的机制——在没有同步的情况下,另一个线程可能先看到 final 字段的默认值,然后才能看到正确值。

Under the new memory model, there is something similar to a happens-before relationship between the write of a final field in a constructor and the initial load of a shared reference to that object in another thread. When the constructor completes, all of the writes to final fields (and to variables reachable indirectly through those final fields) become “frozen,” and any thread that obtains a reference to that object after the freeze is guaranteed to see the frozen values for all frozen fields. Writes that initialize final fields will not be reordered with operations following the freeze associated with the constructor.

译:在新的内存模型中,在构造函数为 final 字段赋值和在另一个线程中读取对象的值这两个动作之间,有一个类似 happens-before 的机制。当构造函数执行完成之后,所有对于 final 字段的写操作都会被冻结,并且任何在冻结之后获取对该对象的应用的线程都被保证看到是的冻结的值。对 final 字段的初始化操作并不会和冻结之后的操作进行重排序。

Summary(总结)

JSR 133 significantly strengthens the semantics of volatile, so that volatile flags can be used reliably as indicators that the program state has been changed by another thread. As a result of making volatile more “heavyweight,” the performance cost of using volatile has been brought closer to the performance cost of synchronization in some cases, but the performance cost is still quite low on most platforms. JSR 133 also significantly strengthens the semantics of final. If an object’s reference is not allowed to escape during construction, then once a constructor has completed and a thread publishes a reference to an object, that object’s final fields are guaranteed to be visible, correct, and constant to all other threads without synchronization.

译:JSR133 显著的增强了 volatilec关键字的语义,因此,volatile 现在可以可靠的标记可被其他线程修改的程序状态。由于 volatile 在语义改变之后变得更加重量级,在某些场景下,使用 volatile 带来的性能消耗和使用 synchronization 几乎接近,但是在大多数 Java 平台中 volatile 的性能消耗仍然很低。JSR133 也显著的增强了 final 关键字的语义。如果一个对象的应用在构造期间不能逃逸,那么一旦构造函数完成,一个线程把引用给了一个对象,那么对应的 final 字段可以保证对于所有的线程都是可见的、正确的、并且固定的。

These changes greatly strengthen the utility of immutable objects in concurrent programs; immutable objects finally become inherently thread-safe (as they were intended to be all along), even if a data race is used to pass references to the immutable object between threads.

译:这些变化极大地增强了并发程序中不可变对象的实用性;不可变对象最终变得本质上是线程安全的(正如它们一直以来的意图),即使使用数据竞赛在线程之间传递对不可变对象的引用。

The one caveat with initialization safety is that the object’s reference must not “escape” its constructor – the constructor should not publish, directly or indirectly, a reference to the object being constructed. This includes publishing references to nonstatic inner classes, and generally precludes starting threads from within a constructor. For a more detailed explanation of safe construction, see Resources.

译:初始化安全的一个警告是,对象的引用不能“转义”其构造函数——构造函数不能直接或间接地发布对正在构造的对象的引用。这包括发布对非静态内部类的引用,通常不允许从构造函数内启动线程。有关安全施工的更详细解释,请参阅[参考资料]。

Resources(参考资料)

- Bill Pugh, who originally discovered many of the problems with the Java Memory Model, maintains a Java Memory Model page.

- The issues with the old memory model and a summary of the new memory model semantics can be found in the JSR 133 FAQ.

- Read more about the double-checked locking problem, and why the obvious attempts to fix it don’t work.

- Read more about why you don’t want to let a reference to an object escape during construction.

- JSR 133, charged with revising the JMM, was convened under the Java Community Process. JSR 133 has recently released its public review specification.

- If you want to see how specifications like this are made, browse the JMM mailing list archive.

- Concurrent Programming in Java by Doug Lea (Addison-Wesley, 1999) is a masterful book on the subtle issues surrounding multithreaded Java programming.

- Synchronization and the Java Memory Model summarizes the actual meaning of synchronization.

- Chapter 17 of The Java Language Specification by James Gosling, Bill Joy, Guy Steele, and Gilad Bracha (Addison-Wesley, 1996) covers the gory details of the original Java Memory Model.

- Find hundreds more Java technology resources on the developerWorks Java technology zone.

- Browse for books on these and other technical topics.