文章目录

- 一、源码分析

- 1. 默认热更新

- 2. 热更新分析

- 3. 方法分析

- 二、词库热更新

- 2.1. 导入依赖

- 2.2. 数据库

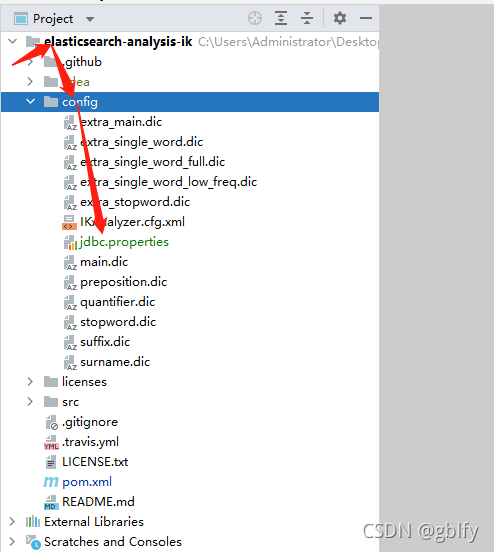

- 2.3. JDBC 配置

- 2.4. 打包配置

- 2.5. 权限策略

- 2.6. 修改 Dictionary

- 2.7. 热更新类

- 2.8. 编译打包

- 2.9. 上传

- 2.10. 修改记录

- 三、服务器操作

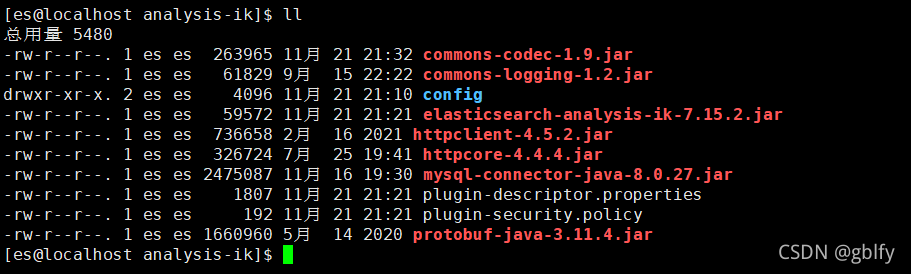

- 3.1. 分词插件目录

- 3.2. 解压es

- 3.3. 移动文件

- 3.4. 目录结构

- 3.5. 配置转移

- 3.6. 重新启动es

- 3.7. 测试分词

- 3.8. 新增分词

- 3.9. es控制台监控

- 3.10. 重新查看分词

- 3.11. 分词数据

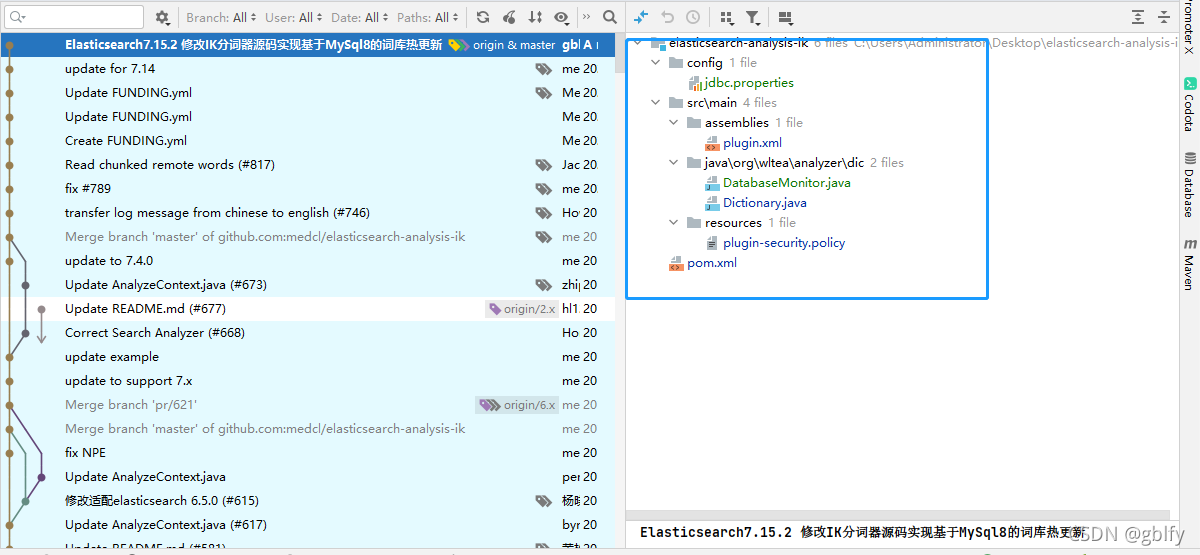

- 3.12. 修改后的源码

一、源码分析

1. 默认热更新

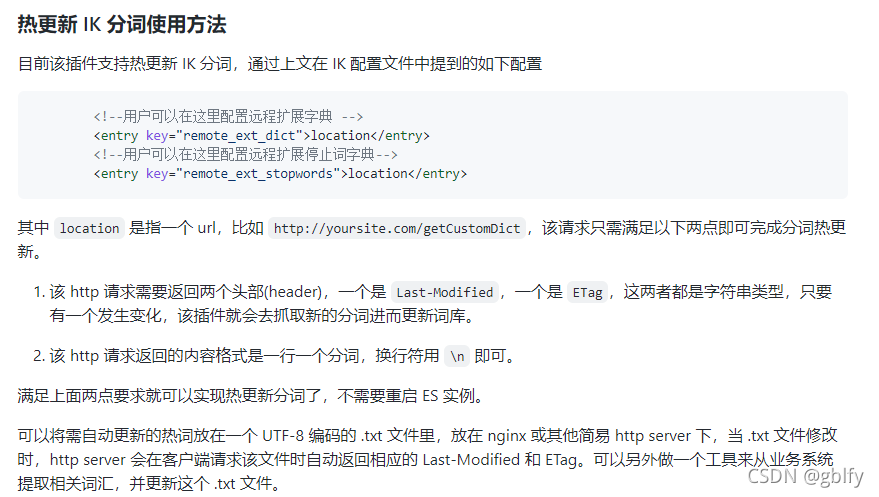

官方提供的热更新方式

https://github.com/medcl/elasticsearch-analysis-ik

2. 热更新分析

上图是官方提供的一种热更新词库的方式,是基于远程文件的,不太实用,但我们可以模仿这种方式自己实现一个基于 MySQL 的,官方提供的实现org.wltea.analyzer.dic.Monitor类中,以下是其完整代码。

- 1.向词库服务器发送Head请求

- 2.从响应中获取Last-Modify、ETags字段值,判断是否变化

- 3.如果未变化,休眠1min,返回第①步

- 4.如果有变化,调用 Dictionary#reLoadMainDict()方法重新加载词典

- 5.休眠1min,返回第①步

package org.wltea.analyzer.dic;import java.io.IOException;

import java.security.AccessController;

import java.security.PrivilegedAction;import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpHead;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.logging.log4j.Logger;

import org.elasticsearch.SpecialPermission;

import org.wltea.analyzer.help.ESPluginLoggerFactory;public class Monitor implements Runnable {private static final Logger logger = ESPluginLoggerFactory.getLogger(Monitor.class.getName());private static CloseableHttpClient httpclient = HttpClients.createDefault();/** 上次更改时间*/private String last_modified;/** 资源属性*/private String eTags;/** 请求地址*/private String location;public Monitor(String location) {this.location = location;this.last_modified = null;this.eTags = null;}public void run() {SpecialPermission.check();AccessController.doPrivileged((PrivilegedAction<Void>) () -> {this.runUnprivileged();return null;});}/*** 监控流程:* ①向词库服务器发送Head请求* ②从响应中获取Last-Modify、ETags字段值,判断是否变化* ③如果未变化,休眠1min,返回第①步* ④如果有变化,重新加载词典* ⑤休眠1min,返回第①步*/public void runUnprivileged() {//超时设置RequestConfig rc = RequestConfig.custom().setConnectionRequestTimeout(10*1000).setConnectTimeout(10*1000).setSocketTimeout(15*1000).build();HttpHead head = new HttpHead(location);head.setConfig(rc);//设置请求头if (last_modified != null) {head.setHeader("If-Modified-Since", last_modified);}if (eTags != null) {head.setHeader("If-None-Match", eTags);}CloseableHttpResponse response = null;try {response = httpclient.execute(head);//返回200 才做操作if(response.getStatusLine().getStatusCode()==200){if (((response.getLastHeader("Last-Modified")!=null) && !response.getLastHeader("Last-Modified").getValue().equalsIgnoreCase(last_modified))||((response.getLastHeader("ETag")!=null) && !response.getLastHeader("ETag").getValue().equalsIgnoreCase(eTags))) {// 远程词库有更新,需要重新加载词典,并修改last_modified,eTagsDictionary.getSingleton().reLoadMainDict();last_modified = response.getLastHeader("Last-Modified")==null?null:response.getLastHeader("Last-Modified").getValue();eTags = response.getLastHeader("ETag")==null?null:response.getLastHeader("ETag").getValue();}}else if (response.getStatusLine().getStatusCode()==304) {//没有修改,不做操作//noop}else{logger.info("remote_ext_dict {} return bad code {}" , location , response.getStatusLine().getStatusCode() );}} catch (Exception e) {logger.error("remote_ext_dict {} error!",e , location);}finally{try {if (response != null) {response.close();}} catch (IOException e) {logger.error(e.getMessage(), e);}}}}

3. 方法分析

eLoadMainDict()会调用loadMainDict(),进而调用loadRemoteExtDict()加载了远程自定义词库,同样的调用loadStopWordDict()也会同时加载远程停用词库。 reLoadMainDict()方法新创建了一个词典实例来重新加载词典,然后替换原来的词典,是一个全量替换。

void reLoadMainDict() {logger.info("重新加载词典...");// 新开一个实例加载词典,减少加载过程对当前词典使用的影响Dictionary tmpDict = new Dictionary(configuration);tmpDict.configuration = getSingleton().configuration;tmpDict.loadMainDict();tmpDict.loadStopWordDict();_MainDict = tmpDict._MainDict;_StopWords = tmpDict._StopWords;logger.info("重新加载词典完毕...");

}/*** 加载主词典及扩展词典*/

private void () {// 建立一个主词典实例_MainDict = new DictSegment((char) 0);// 读取主词典文件Path file = PathUtils.get(getDictRoot(), Dictionary.PATH_DIC_MAIN);loadDictFile(_MainDict, file, false, "Main Dict");// 加载扩展词典this.loadExtDict();// 加载远程自定义词库this.loadRemoteExtDict();

}

loadRemoteExtDict()方法的逻辑也很清晰:

- 1.获取远程词典的 URL,可能有多个

- 2.循环请求每个 URL,取回远程词典

- 3.将远程词典添加到主词典中

_MainDict.fillSegment(theWord.trim().toLowerCase().toCharArray());

这里需要重点关注的是fillSegment()方法,它的作用是将一个词加入词典,与之相反的方法是disableSegment(),屏蔽词典中的一个词。

/*** 加载远程扩展词典到主词库表*/private void loadRemoteExtDict() {List<String> remoteExtDictFiles = getRemoteExtDictionarys();for (String location : remoteExtDictFiles) {logger.info("[Dict Loading] " + location);List<String> lists = getRemoteWords(location);// 如果找不到扩展的字典,则忽略if (lists == null) {logger.error("[Dict Loading] " + location + " load failed");continue;}for (String theWord : lists) {if (theWord != null && !"".equals(theWord.trim())) {// 加载扩展词典数据到主内存词典中logger.info(theWord);_MainDict.fillSegment(theWord.trim().toLowerCase().toCharArray());}}}}/*** 加载填充词典片段* @param charArray*/void fillSegment(char[] charArray){this.fillSegment(charArray, 0 , charArray.length , 1); }/*** 屏蔽词典中的一个词* @param charArray*/void disableSegment(char[] charArray){this.fillSegment(charArray, 0 , charArray.length , 0); }

Monitor类只是一个监控程序,它是在org.wltea.analyzer.dic.Dictionary类的initial()方法被启动的,以下代码的 29~35 行。

...

...

// 线程池

private static ScheduledExecutorService pool = Executors.newScheduledThreadPool(1);

...

.../*** 词典初始化 由于IK Analyzer的词典采用Dictionary类的静态方法进行词典初始化* 只有当Dictionary类被实际调用时,才会开始载入词典, 这将延长首次分词操作的时间 该方法提供了一个在应用加载阶段就初始化字典的手段* * @return Dictionary*/

public static synchronized void initial(Configuration cfg) {if (singleton == null) {synchronized (Dictionary.class) {if (singleton == null) {singleton = new Dictionary(cfg);singleton.loadMainDict();singleton.loadSurnameDict();singleton.loadQuantifierDict();singleton.loadSuffixDict();singleton.loadPrepDict();singleton.loadStopWordDict();if(cfg.isEnableRemoteDict()){// 建立监控线程for (String location : singleton.getRemoteExtDictionarys()) {// 10 秒是初始延迟可以修改的 60是间隔时间 单位秒pool.scheduleAtFixedRate(new Monitor(location), 10, 60, TimeUnit.SECONDS);}for (String location : singleton.getRemoteExtStopWordDictionarys()) {pool.scheduleAtFixedRate(new Monitor(location), 10, 60, TimeUnit.SECONDS);}}}}}

}二、词库热更新

实现基于MySql的词库热更新

2.1. 导入依赖

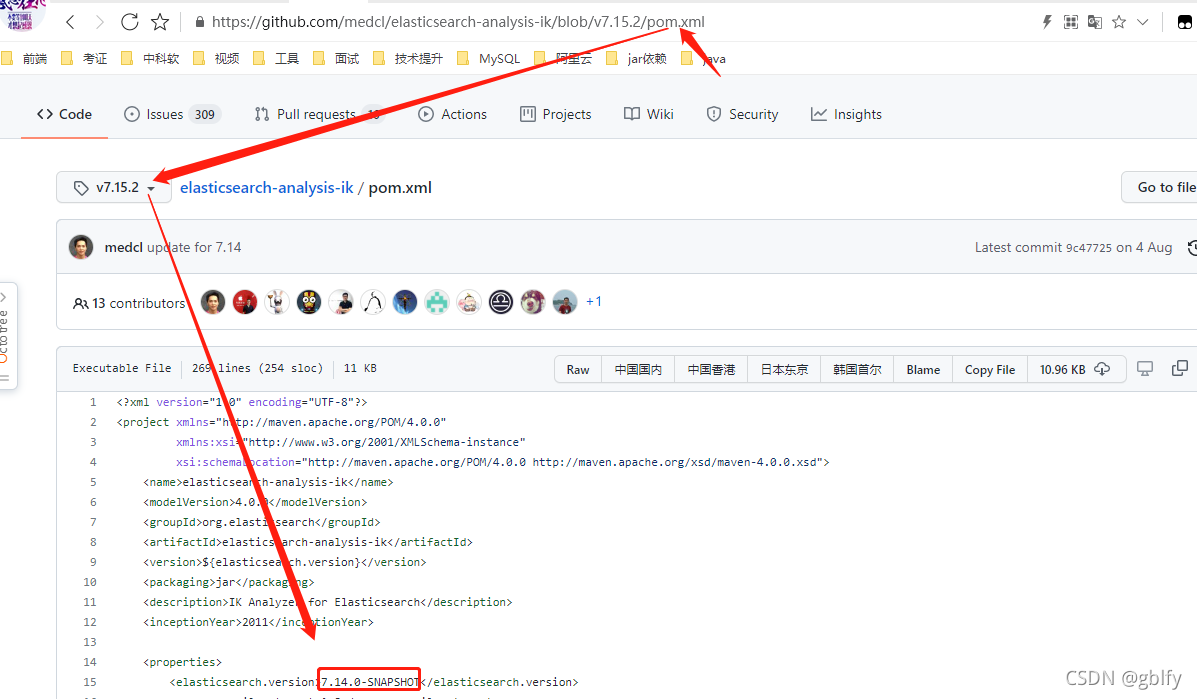

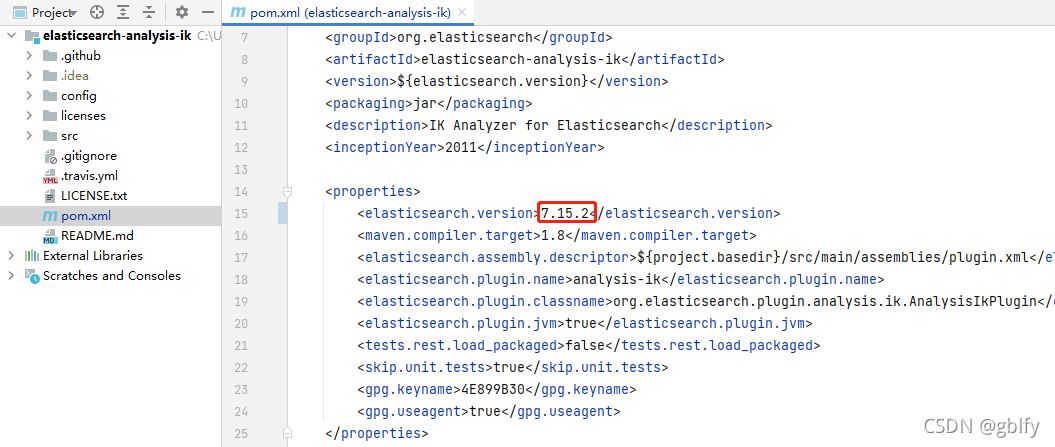

在项目根目录的pom文件中修改es的版本,以及引入mysql8.0依赖

<properties><elasticsearch.version>7.15.2</elasticsearch.version></properties><!--mysql驱动--><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>8.0.27</version></dependency>

默认是7.14.0-SNAPSHOT

调整版本为7.15.2

2.2. 数据库

创建数据库dianpingdb,初始化表结构

es_extra_main、es_extra_stopword分别为主词典和停用词典。

CREATE TABLE `es_extra_main` (`id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',`word` varchar(255) CHARACTER SET utf8mb4 NOT NULL COMMENT '词',`is_deleted` tinyint(1) NOT NULL DEFAULT '0' COMMENT '是否已删除',`update_time` timestamp(6) NOT NULL DEFAULT CURRENT_TIMESTAMP(6) ON UPDATE CURRENT_TIMESTAMP(6) COMMENT '更新时间',PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;CREATE TABLE `es_extra_stopword` (`id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键',`word` varchar(255) CHARACTER SET utf8mb4 NOT NULL COMMENT '词',`is_deleted` tinyint(1) NOT NULL DEFAULT '0' COMMENT '是否已删除',`update_time` timestamp(6) NOT NULL DEFAULT CURRENT_TIMESTAMP(6) ON UPDATE CURRENT_TIMESTAMP(6) COMMENT '更新时间',PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;2.3. JDBC 配置

在项目的config文件夹下创建jdbc.properties 文件,记录 MySQL 的 url、driver、username、password,和查询主词典、停用词典的 SQL,以及热更新的间隔秒数。从两个 SQL 可以看出我的设计是增量更新,而不是官方的全量替换。

jdbc.properties内容

jdbc.url=jdbc:mysql://192.168.92.128:3306/dianpingdb?useAffectedRows=true&characterEncoding=UTF-8&autoReconnect=true&zeroDateTimeBehavior=convertToNull&useUnicode=true&serverTimezone=GMT%2B8&allowMultiQueries=true

jdbc.username=root

jdbc.password=123456

jdbc.driver=com.mysql.cj.jdbc.Driver

jdbc.update.main.dic.sql=SELECT * FROM `es_extra_main` WHERE update_time > ? order by update_time asc

jdbc.update.stopword.sql=SELECT * FROM `es_extra_stopword` WHERE update_time > ? order by update_time asc

jdbc.update.interval=10

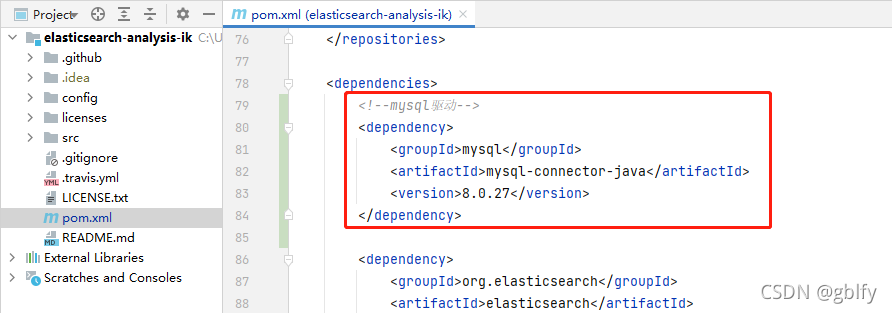

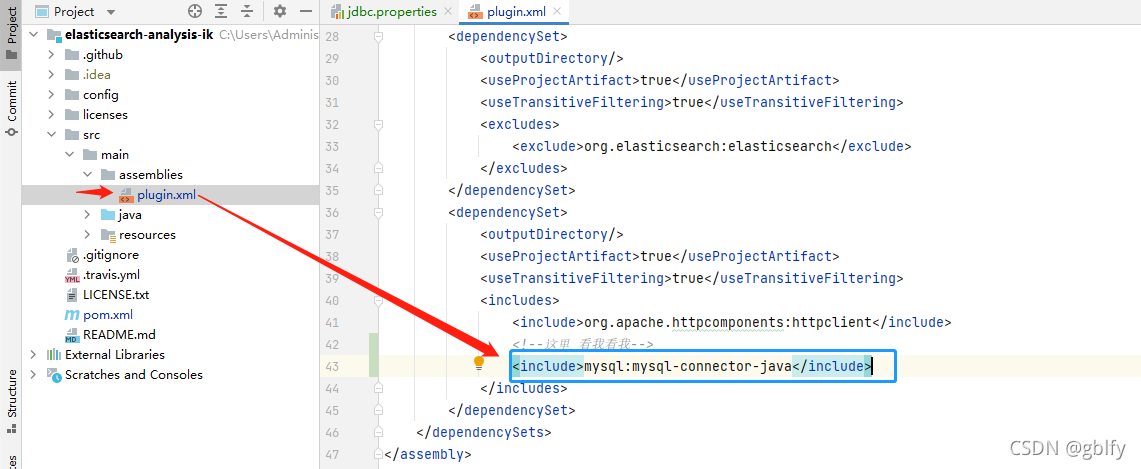

2.4. 打包配置

src/main/assemblies/plugin.xml

将 MySQL 驱动的依赖写入,否则打成 zip 后会没有 MySQL 驱动的 jar 包。

<!--这里 看我看我--><include>mysql:mysql-connector-java</include>

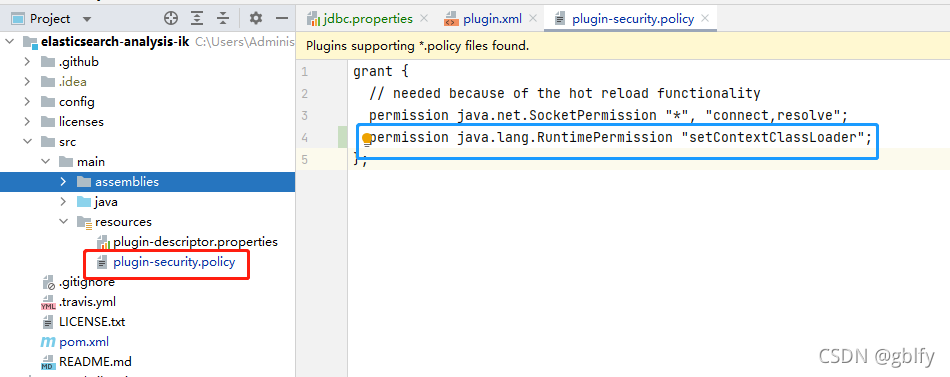

2.5. 权限策略

src/main/resources/plugin-security.policy

添加permission java.lang.RuntimePermission "setContextClassLoader";,否则会因为权限问题抛出以下异常。

grant {// needed because of the hot reload functionalitypermission java.net.SocketPermission "*", "connect,resolve";permission java.lang.RuntimePermission "setContextClassLoader";

};不添加以上配置,抛出的异常信息:

java.lang.ExceptionInInitializerError: nullat java.lang.Class.forName0(Native Method) ~[?:1.8.0_261]at java.lang.Class.forName(Unknown Source) ~[?:1.8.0_261]at com.mysql.cj.jdbc.NonRegisteringDriver.<clinit>(NonRegisteringDriver.java:97) ~[?:?]at java.lang.Class.forName0(Native Method) ~[?:1.8.0_261]at java.lang.Class.forName(Unknown Source) ~[?:1.8.0_261]at org.wltea.analyzer.dic.DatabaseMonitor.lambda$new$0(DatabaseMonitor.java:72) ~[?:?]at java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_261]at org.wltea.analyzer.dic.DatabaseMonitor.<init>(DatabaseMonitor.java:70) ~[?:?]at org.wltea.analyzer.dic.Dictionary.initial(Dictionary.java:172) ~[?:?]at org.wltea.analyzer.cfg.Configuration.<init>(Configuration.java:40) ~[?:?]at org.elasticsearch.index.analysis.IkTokenizerFactory.<init>(IkTokenizerFactory.java:15) ~[?:?]at org.elasticsearch.index.analysis.IkTokenizerFactory.getIkSmartTokenizerFactory(IkTokenizerFactory.java:23) ~[?:?]at org.elasticsearch.index.analysis.AnalysisRegistry.buildMapping(AnalysisRegistry.java:379) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.index.analysis.AnalysisRegistry.buildTokenizerFactories(AnalysisRegistry.java:189) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.index.analysis.AnalysisRegistry.build(AnalysisRegistry.java:163) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.index.IndexService.<init>(IndexService.java:164) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.index.IndexModule.newIndexService(IndexModule.java:402) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.indices.IndicesService.createIndexService(IndicesService.java:526) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.indices.IndicesService.verifyIndexMetadata(IndicesService.java:599) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.gateway.Gateway.performStateRecovery(Gateway.java:129) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.gateway.GatewayService$1.doRun(GatewayService.java:227) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:751) ~[elasticsearch-6.7.2.jar:6.7.2]at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) ~[elasticsearch-6.7.2.jar:6.7.2]at java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source) ~[?:1.8.0_261]at java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source) ~[?:1.8.0_261]at java.lang.Thread.run(Unknown Source) [?:1.8.0_261]

Caused by: java.security.AccessControlException: access denied ("java.lang.RuntimePermission" "setContextClassLoader")at java.security.AccessControlContext.checkPermission(Unknown Source) ~[?:1.8.0_261]at java.security.AccessController.checkPermission(Unknown Source) ~[?:1.8.0_261]at java.lang.SecurityManager.checkPermission(Unknown Source) ~[?:1.8.0_261]at java.lang.Thread.setContextClassLoader(Unknown Source) ~[?:1.8.0_261]at com.mysql.cj.jdbc.AbandonedConnectionCleanupThread.lambda$static$0(AbandonedConnectionCleanupThread.java:72) ~[?:?]at java.util.concurrent.ThreadPoolExecutor$Worker.<init>(Unknown Source) ~[?:1.8.0_261]at java.util.concurrent.ThreadPoolExecutor.addWorker(Unknown Source) ~[?:1.8.0_261]at java.util.concurrent.ThreadPoolExecutor.execute(Unknown Source) ~[?:1.8.0_261]at java.util.concurrent.Executors$DelegatedExecutorService.execute(Unknown Source) ~[?:1.8.0_261]at com.mysql.cj.jdbc.AbandonedConnectionCleanupThread.<clinit>(AbandonedConnectionCleanupThread.java:75) ~[?:?]... 26 more

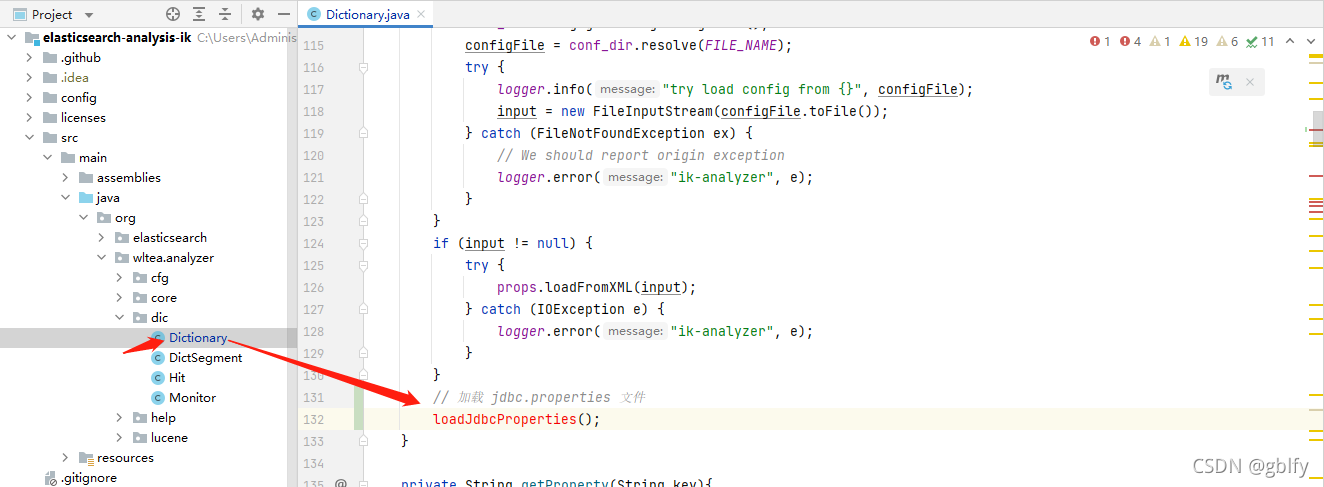

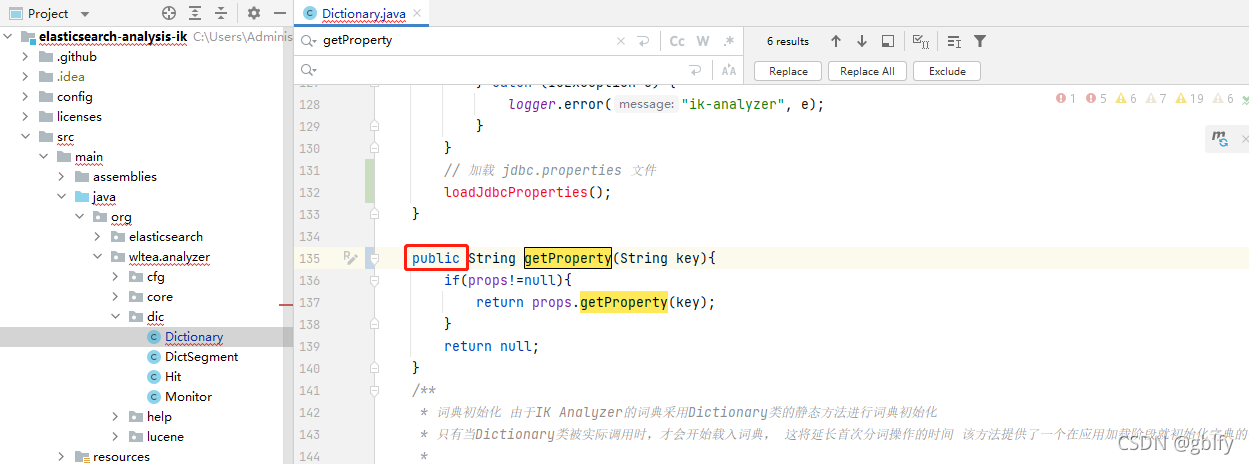

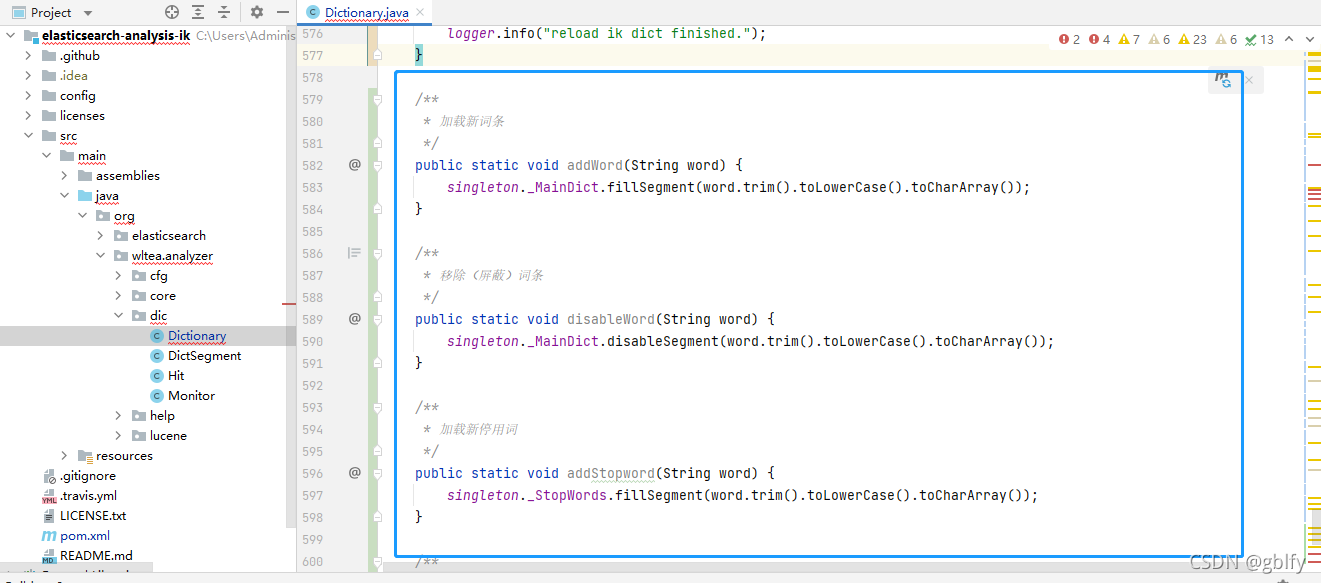

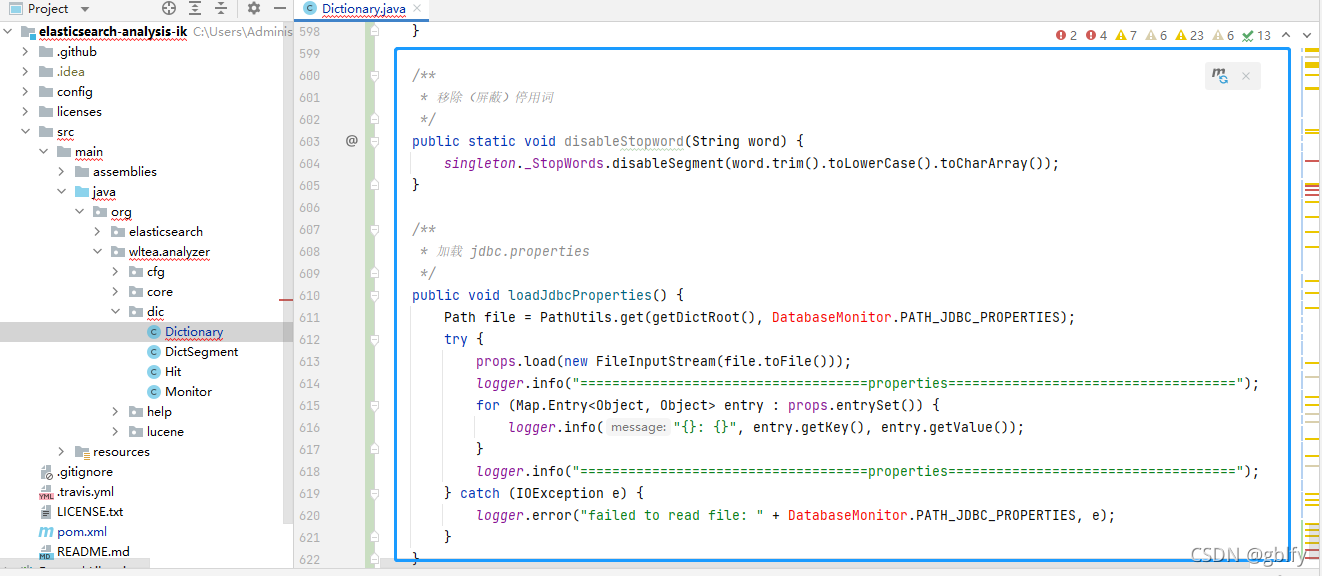

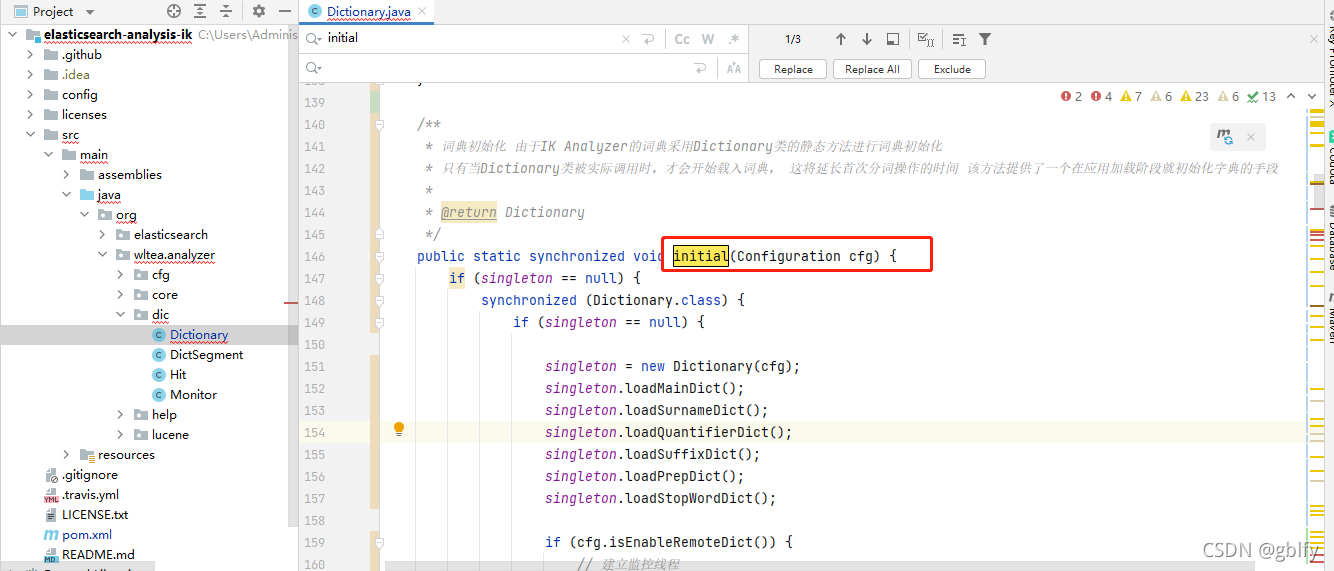

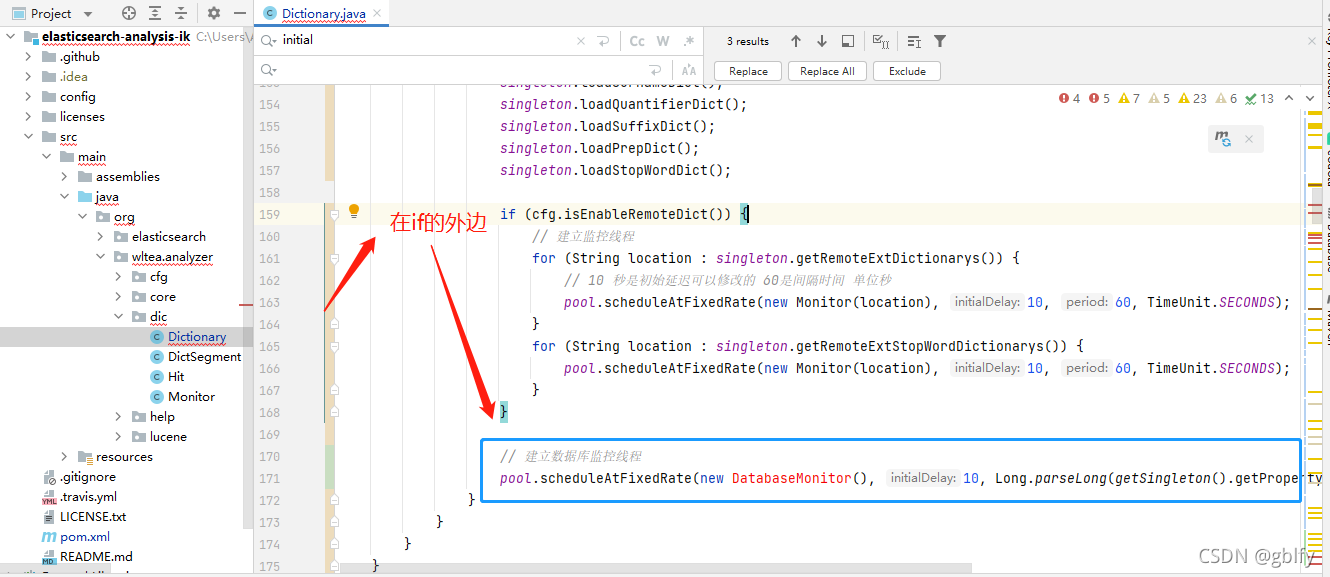

2.6. 修改 Dictionary

- 1.在构造方法中加载 jdbc.properties 文件

// 加载 jdbc.properties 文件loadJdbcProperties();

- 2.将 getProperty()改为 public

- 3.添加了几个方法,用于增删词条

在类的最后添加以下几个方法

/*** 加载新词条*/public static void addWord(String word) {singleton._MainDict.fillSegment(word.trim().toLowerCase().toCharArray());}/*** 移除(屏蔽)词条*/public static void disableWord(String word) {singleton._MainDict.disableSegment(word.trim().toLowerCase().toCharArray());}/*** 加载新停用词*/public static void addStopword(String word) {singleton._StopWords.fillSegment(word.trim().toLowerCase().toCharArray());}/*** 移除(屏蔽)停用词*/public static void disableStopword(String word) {singleton._StopWords.disableSegment(word.trim().toLowerCase().toCharArray());}/*** 加载 jdbc.properties*/public void loadJdbcProperties() {Path file = PathUtils.get(getDictRoot(), DatabaseMonitor.PATH_JDBC_PROPERTIES);try {props.load(new FileInputStream(file.toFile()));logger.info("====================================properties====================================");for (Map.Entry<Object, Object> entry : props.entrySet()) {logger.info("{}: {}", entry.getKey(), entry.getValue());}logger.info("====================================properties====================================");} catch (IOException e) {logger.error("failed to read file: " + DatabaseMonitor.PATH_JDBC_PROPERTIES, e);}}- 4.initial()启动自己实现的数据库监控线程

搜索initial(Configuration cfg)方法

// 建立数据库监控线程

pool.scheduleAtFixedRate(new DatabaseMonitor(), 10, Long.parseLong(getSingleton().getProperty(DatabaseMonitor.JDBC_UPDATE_INTERVAL)), TimeUnit.SECONDS);

2.7. 热更新类

MySQL 热更新的实现类 DatabaseMonitor

- 1.

lastUpdateTimeOfMainDic、lastUpdateTimeOfStopword记录上次处理的最后一条的updateTime - 2.查出上次处理之后新增或删除的记录

- 3.循环判断

is_deleted字段,为true则添加词条,false则删除词条

在org.wltea.analyzer.dic包下创建DatabaseMonitor类

package org.wltea.analyzer.dic;import org.apache.logging.log4j.Logger;

import org.elasticsearch.SpecialPermission;

import org.wltea.analyzer.help.ESPluginLoggerFactory;import java.security.AccessController;

import java.security.PrivilegedAction;

import java.sql.*;

import java.time.LocalDate;

import java.time.LocalDateTime;

import java.time.LocalTime;/*** 通过 mysql 更新词典** @author gblfy* @date 2021-11-21* @WebSite gblfy.com*/

public class DatabaseMonitor implements Runnable {private static final Logger logger = ESPluginLoggerFactory.getLogger(DatabaseMonitor.class.getName());public static final String PATH_JDBC_PROPERTIES = "jdbc.properties";private static final String JDBC_URL = "jdbc.url";private static final String JDBC_USERNAME = "jdbc.username";private static final String JDBC_PASSWORD = "jdbc.password";private static final String JDBC_DRIVER = "jdbc.driver";private static final String SQL_UPDATE_MAIN_DIC = "jdbc.update.main.dic.sql";private static final String SQL_UPDATE_STOPWORD = "jdbc.update.stopword.sql";/*** 更新间隔*/public final static String JDBC_UPDATE_INTERVAL = "jdbc.update.interval";private static final Timestamp DEFAULT_LAST_UPDATE = Timestamp.valueOf(LocalDateTime.of(LocalDate.of(2020, 1, 1), LocalTime.MIN));private static Timestamp lastUpdateTimeOfMainDic = null;private static Timestamp lastUpdateTimeOfStopword = null;public String getUrl() {return Dictionary.getSingleton().getProperty(JDBC_URL);}public String getUsername() {return Dictionary.getSingleton().getProperty(JDBC_USERNAME);}public String getPassword() {return Dictionary.getSingleton().getProperty(JDBC_PASSWORD);}public String getDriver() {return Dictionary.getSingleton().getProperty(JDBC_DRIVER);}public String getUpdateMainDicSql() {return Dictionary.getSingleton().getProperty(SQL_UPDATE_MAIN_DIC);}public String getUpdateStopwordSql() {return Dictionary.getSingleton().getProperty(SQL_UPDATE_STOPWORD);}/*** 加载MySQL驱动*/public DatabaseMonitor() {SpecialPermission.check();AccessController.doPrivileged((PrivilegedAction<Void>) () -> {try {Class.forName(getDriver());} catch (ClassNotFoundException e) {logger.error("mysql jdbc driver not found", e);}return null;});}@Overridepublic void run() {SpecialPermission.check();AccessController.doPrivileged((PrivilegedAction<Void>) () -> {Connection conn = getConnection();// 更新主词典updateMainDic(conn);// 更新停用词updateStopword(conn);closeConnection(conn);return null;});}public Connection getConnection() {Connection connection = null;try {connection = DriverManager.getConnection(getUrl(), getUsername(), getPassword());} catch (SQLException e) {logger.error("failed to get connection", e);}return connection;}public void closeConnection(Connection conn) {if (conn != null) {try {conn.close();} catch (SQLException e) {logger.error("failed to close Connection", e);}}}public void closeRsAndPs(ResultSet rs, PreparedStatement ps) {if (rs != null) {try {rs.close();} catch (SQLException e) {logger.error("failed to close ResultSet", e);}}if (ps != null) {try {ps.close();} catch (SQLException e) {logger.error("failed to close PreparedStatement", e);}}}/*** 主词典*/public synchronized void updateMainDic(Connection conn) {logger.info("start update main dic");int numberOfAddWords = 0;int numberOfDisableWords = 0;PreparedStatement ps = null;ResultSet rs = null;try {String sql = getUpdateMainDicSql();Timestamp param = lastUpdateTimeOfMainDic == null ? DEFAULT_LAST_UPDATE : lastUpdateTimeOfMainDic;logger.info("param: " + param);ps = conn.prepareStatement(sql);ps.setTimestamp(1, param);rs = ps.executeQuery();while (rs.next()) {String word = rs.getString("word");word = word.trim();if (word.isEmpty()) {continue;}lastUpdateTimeOfMainDic = rs.getTimestamp("update_time");if (rs.getBoolean("is_deleted")) {logger.info("[main dic] disable word: {}", word);// 删除Dictionary.disableWord(word);numberOfDisableWords++;} else {logger.info("[main dic] add word: {}", word);// 添加Dictionary.addWord(word);numberOfAddWords++;}}logger.info("end update main dic -> addWord: {}, disableWord: {}", numberOfAddWords, numberOfDisableWords);} catch (SQLException e) {logger.error("failed to update main_dic", e);// 关闭 ResultSet、PreparedStatementcloseRsAndPs(rs, ps);}}/*** 停用词*/public synchronized void updateStopword(Connection conn) {logger.info("start update stopword");int numberOfAddWords = 0;int numberOfDisableWords = 0;PreparedStatement ps = null;ResultSet rs = null;try {String sql = getUpdateStopwordSql();Timestamp param = lastUpdateTimeOfStopword == null ? DEFAULT_LAST_UPDATE : lastUpdateTimeOfStopword;logger.info("param: " + param);ps = conn.prepareStatement(sql);ps.setTimestamp(1, param);rs = ps.executeQuery();while (rs.next()) {String word = rs.getString("word");word = word.trim();if (word.isEmpty()) {continue;}lastUpdateTimeOfStopword = rs.getTimestamp("update_time");if (rs.getBoolean("is_deleted")) {logger.info("[stopword] disable word: {}", word);// 删除Dictionary.disableStopword(word);numberOfDisableWords++;} else {logger.info("[stopword] add word: {}", word);// 添加Dictionary.addStopword(word);numberOfAddWords++;}}logger.info("end update stopword -> addWord: {}, disableWord: {}", numberOfAddWords, numberOfDisableWords);} catch (SQLException e) {logger.error("failed to update main_dic", e);} finally {// 关闭 ResultSet、PreparedStatementcloseRsAndPs(rs, ps);}}

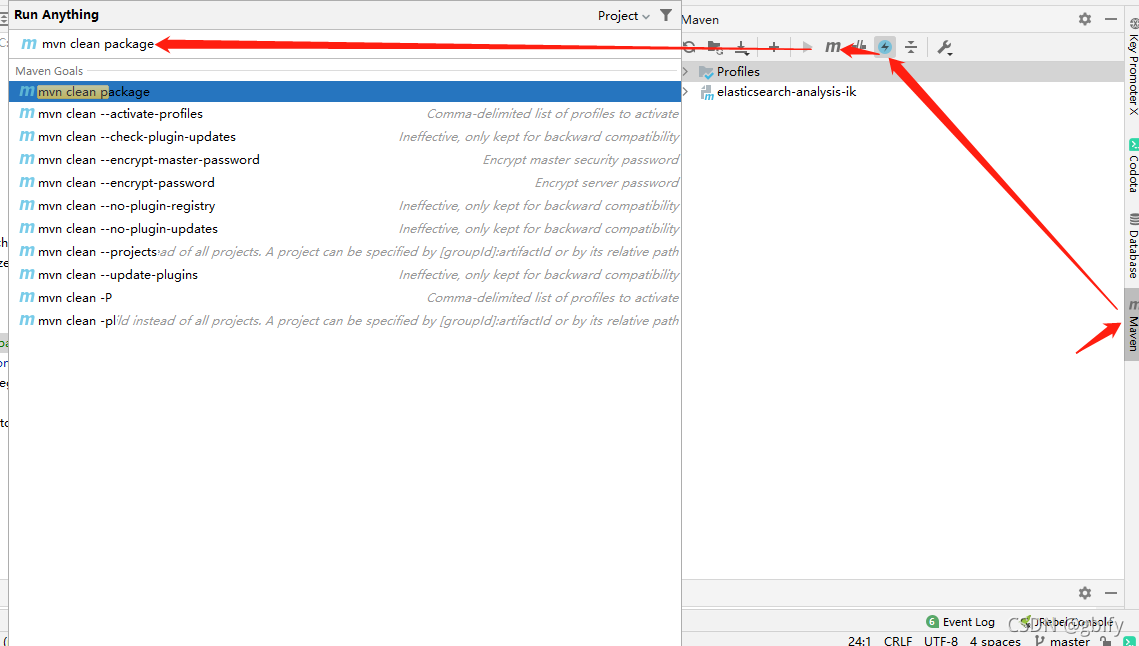

}2.8. 编译打包

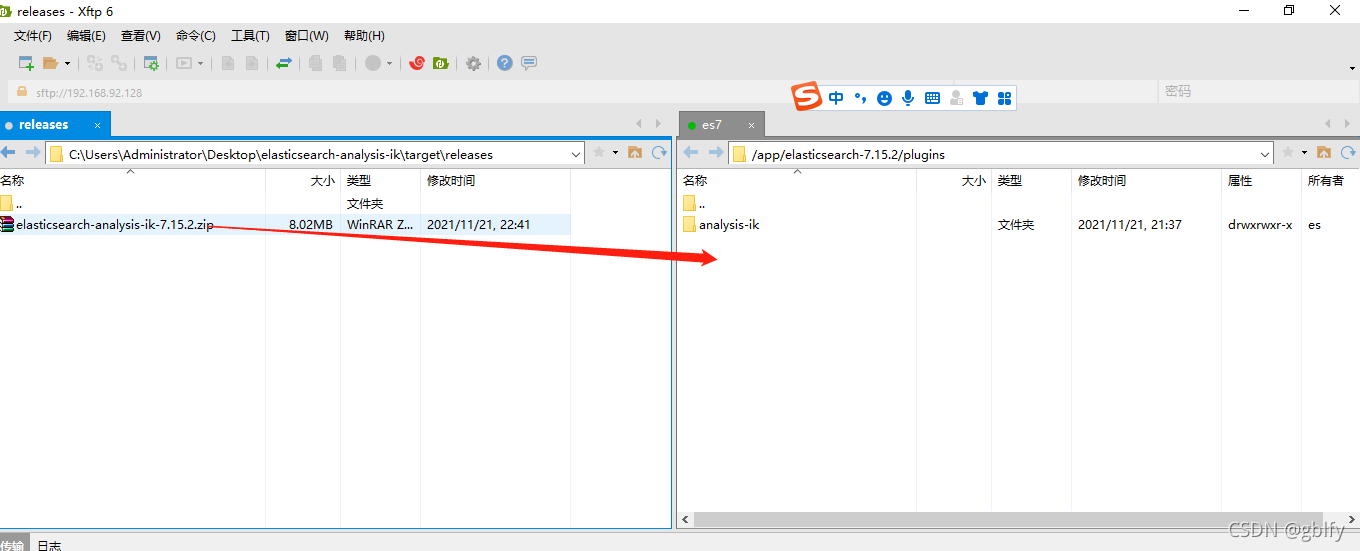

直接mvn clean package,然后在 elasticsearch-analysis-ik/target/releases目录中找到 elasticsearch-analysis-ik-7.15.2.zip 压缩包,上传到plugins目录下面(我的目录是/app/elasticsearch-7.15.2/plugins)

2.9. 上传

2.10. 修改记录

三、服务器操作

3.1. 分词插件目录

新建analysis-ik文件夹

cd /app/elasticsearch-7.15.2/plugins/

mkdir analysis-ik

3.2. 解压es

unzip elasticsearch-analysis-ik-7.15.2.zip

3.3. 移动文件

将解压后的文件都移动到 analysis-ik文件夹下面

mv *.jar plugin-* config/ analysis-ik

3.4. 目录结构

3.5. 配置转移

将jdbc复制到指定目录

启动时会加载/app/elasticsearch-7.15.2/config/analysis-ik/jdbc.properties

cd /app/elasticsearch-7.15.2/plugins/

cp analysis-ik/config/jdbc.properties /app/elasticsearch-7.15.2/config/analysis-ik/

3.6. 重新启动es

cd /app/elasticsearch-7.15.2/

bin/elasticsearch -d && tail -f logs/dianping.log

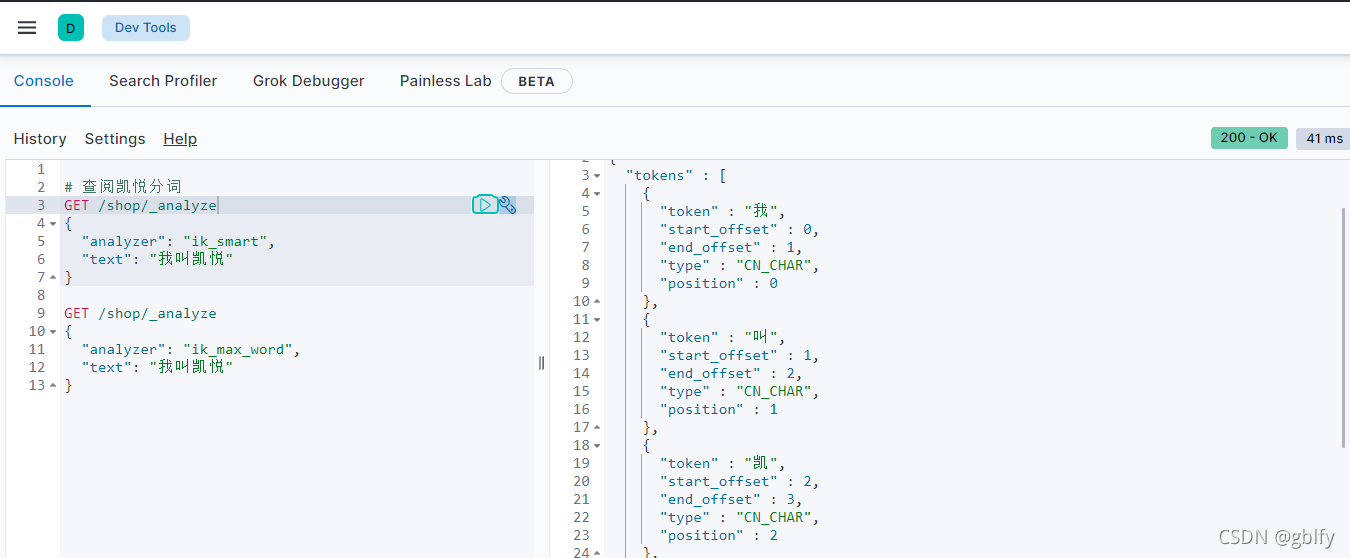

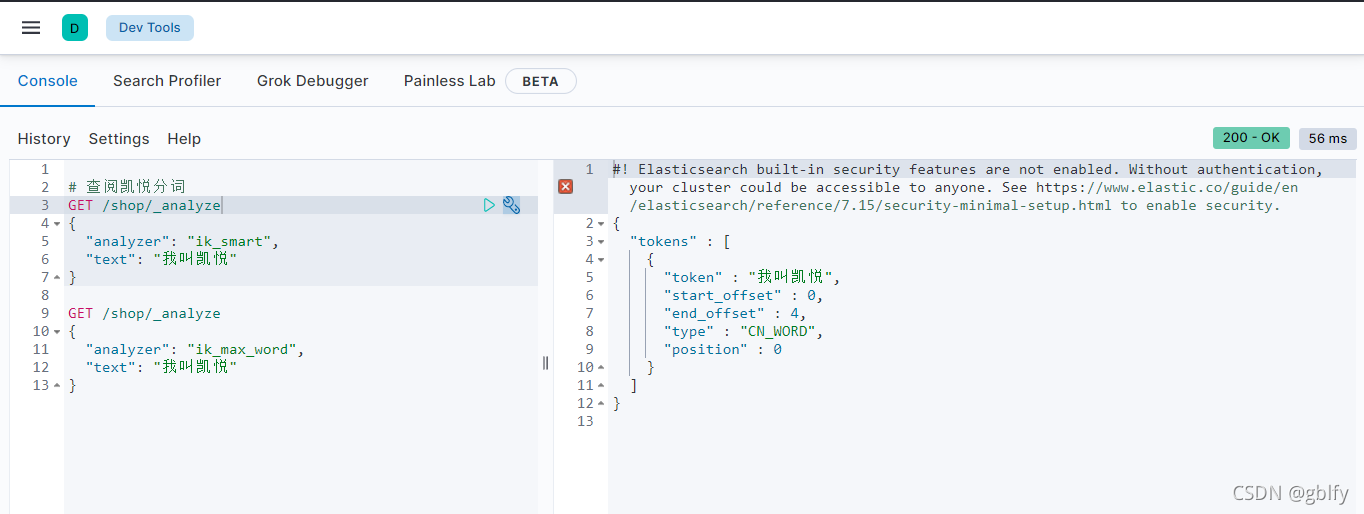

3.7. 测试分词

没有添加任何自定义分词的情况下,提前测试看效果

# 查阅凯悦分词

GET /shop/_analyze

{"analyzer": "ik_smart","text": "我叫凯悦"

}GET /shop/_analyze

{"analyzer": "ik_max_word","text": "我叫凯悦"

}

搜索结果:把我叫凯悦分词成了单字组合形式

{"tokens" : [{"token" : "我","start_offset" : 0,"end_offset" : 1,"type" : "CN_CHAR","position" : 0},{"token" : "叫","start_offset" : 1,"end_offset" : 2,"type" : "CN_CHAR","position" : 1},{"token" : "凯","start_offset" : 2,"end_offset" : 3,"type" : "CN_CHAR","position" : 2},{"token" : "悦","start_offset" : 3,"end_offset" : 4,"type" : "CN_CHAR","position" : 3}]

}

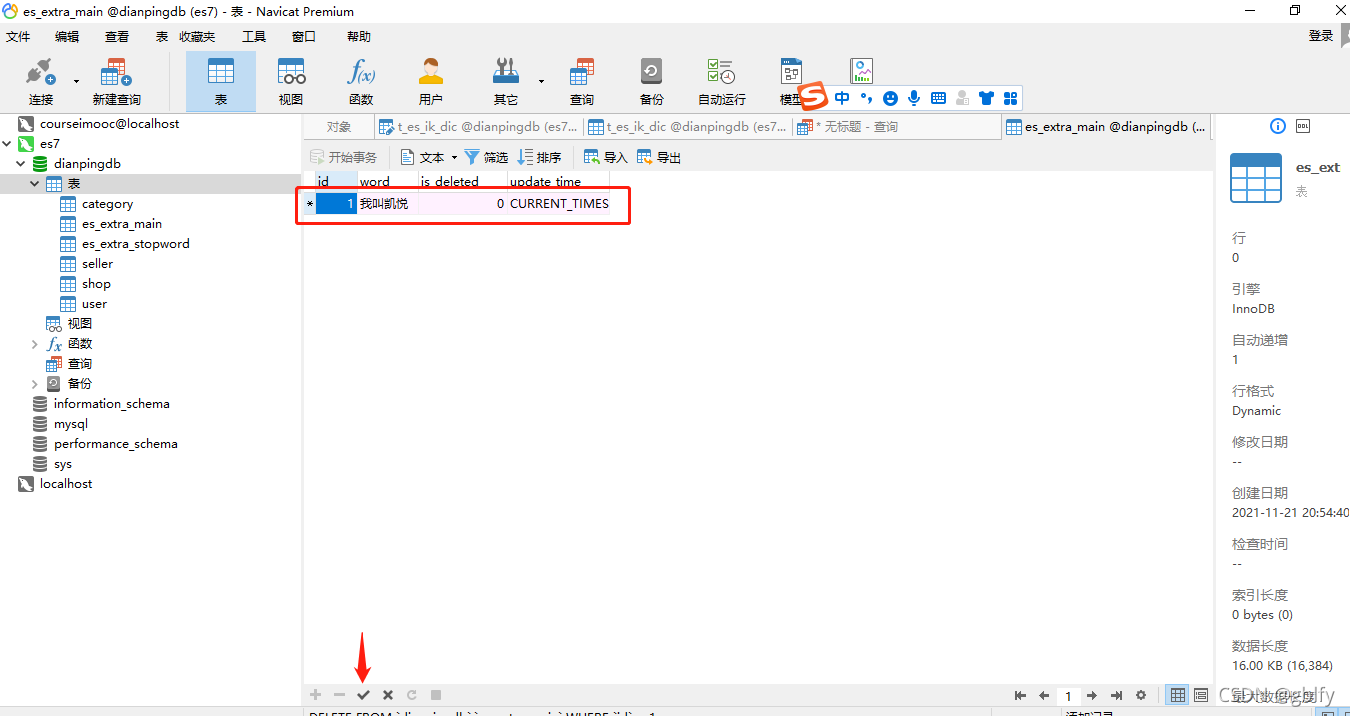

3.8. 新增分词

在是数据库中的es_extra_main表中添加自定义分析“我叫凯瑞” ,提交事务

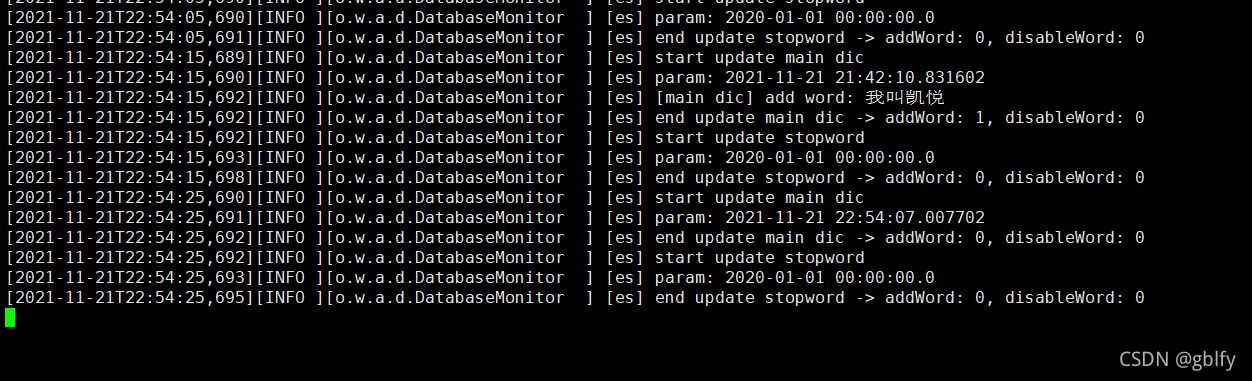

3.9. es控制台监控

从下面截图中更可以看出,已经加载到咱么刚才添加的自定义“我叫凯瑞”分词了

3.10. 重新查看分词

# 查阅凯悦分词

GET /shop/_analyze

{"analyzer": "ik_smart","text": "我叫凯悦"

}GET /shop/_analyze

{"analyzer": "ik_max_word","text": "我叫凯悦"

}

3.11. 分词数据

从截图中可以看出,把 “我叫凯瑞”作为一个整体的分词了

3.12. 修改后的源码

https://gitee.com/gb_90/elasticsearch-analysis-ik

)

——中台架构详解(下))

)