最近阅读了有关文本分类的文章,其中有一篇名为《Adversarail Training for Semi-supervised Text Classification》, 其主要思路实在文本训练时增加了一个扰动因子,即在embedding层加入一个小的扰动,发现训练的结果比不加要好很多。

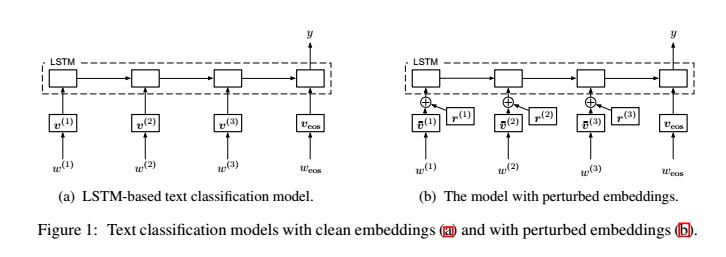

模型的网络结构如下图:

下面就介绍一下这个对抗因子r的生成过程:

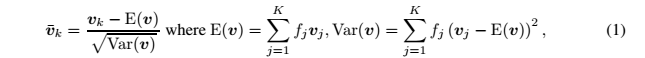

在进入lstm网络前先进行从w到v的计算,即将wordembedding 归一化:

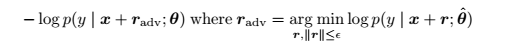

然后定义模型的损失函数,令输入为x,参数为θ,Radv为对抗训练因子,损失函数为:

其中一个细节,虽然θˆ 是θ的复制,但是它是计算扰动的过程,不会参与到计算梯度的反向传播算法中。

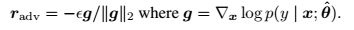

然后就是求扰动:

先对表达式求导得到倒数g,然后对倒数g进行l2正则化的线性变换。

至此扰动则计算完成然后加入之前的wordembedding中参与模型训练。

下面则是模型的代码部分:

#构建adversarailLSTM模型class AdversarailLSTM(object):def __init__(self, config, wordEmbedding, indexFreqs):#定义输入self.inputX = tf.placeholder(tf.int32, [None, config.sequenceLength], name="inputX")self.inputY = tf.placeholder(tf.float32, [None, 1], name="inputY")self.dropoutKeepProb = tf.placeholder(tf.float32, name="dropoutKeepProb")#根据词频计算权重indexFreqs[0], indexFreqs[1] = 20000, 10000weights = tf.cast(tf.reshape(indexFreqs / tf.reduce_sum(indexFreqs), [1, len(indexFreqs)]), dtype=tf.float32)#词嵌入层with tf.name_scope("wordEmbedding"):#利用预训练的词向量初始化词嵌入矩阵normWordEmbedding = self._normalize(tf.cast(wordEmbedding, dtype=tf.float32, name="word2vec"), weights)#self.W = tf.Variable(tf.cast(wordEmbedding, dtype=tf.float32, name="word2vec"), name="W")self.embeddedWords = tf.nn.embedding_lookup(normWordEmbedding, self.inputX)#计算二元交叉熵损失with tf.name_scope("loss"):with tf.variable_scope("Bi-LSTM", reuse=None):self.predictions = self._Bi_LSTMAttention(self.embeddedWords)self.binaryPreds = tf.cast(tf.greater_equal(self.predictions, 0.5), tf.float32, name="binaryPreds")losses = tf.nn.sigmoid_cross_entropy_with_logits(logits=self.predictions, labels=self.inputY)loss = tf.reduce_mean(losses)with tf.name_scope("perturloss"):with tf.variable_scope("Bi-LSTM", reuse=True):perturWordEmbedding = self._addPerturbation(self.embeddedWords, loss)print("perturbSize:{}".format(perturWordEmbedding))perturPredictions = self._Bi_LSTMAttention(perturWordEmbedding)perturLosses = tf.nn.sigmoid_cross_entropy_with_logits(logits=perturPredictions, labels=self.inputY)perturLoss = tf.reduce_mean(perturLosses)self.loss = loss + perturLossdef _Bi_LSTMAttention(self, embeddedWords):#定义两层双向LSTM的模型结构with tf.name_scope("Bi-LSTM"):fwHiddenLayers = []bwHiddenLayers = []for idx, hiddenSize in enumerate(config.model.hiddenSizes):with tf.name_scope("Bi-LSTM" + str(idx)):#定义前向网络结构lstmFwCell = tf.nn.rnn_cell.DropoutWrapper(tf.nn.rnn_cell.LSTMCell(num_units=hiddenSize, state_is_tuple=True),output_keep_prob=self.dropoutKeepProb)#定义反向网络结构lstmBwCell = tf.nn.rnn_cell.DropoutWrapper(tf.nn.rnn_cell.LSTMCell(num_units=hiddenSize, state_is_tuple=True),output_keep_prob=self.dropoutKeepProb)fwHiddenLayers.append(lstmFwCell)bwHiddenLayers.append(lstmBwCell)# 实现多层的LSTM结构, state_is_tuple=True,则状态会以元祖的形式组合(h, c),否则列向拼接fwMultiLstm = tf.nn.rnn_cell.MultiRNNCell(cells=fwHiddenLayers, state_is_tuple=True)bwMultiLstm = tf.nn.rnn_cell.MultiRNNCell(cells=bwHiddenLayers, state_is_tuple=True)#采用动态rnn,可以动态地输入序列的长度,若没有输入,则取序列的全长#outputs是一个元组(output_fw, output_bw), 其中两个元素的维度都是[batch_size, max_time, hidden_size], fw和bw的hiddensize一样#self.current_state是最终的状态,二元组(state_fw, state_bw), state_fw=[batch_size, s], s是一个元组(h, c)outputs, self.current_state = tf.nn.bidirectional_dynamic_rnn(fwMultiLstm, bwMultiLstm,self.embeddedWords, dtype=tf.float32,scope="bi-lstm" + str(idx))#在bi-lstm+attention论文中,将前向和后向的输出相加with tf.name_scope("Attention"):H = outputs[0] + outputs[1]#得到attention的输出output = self.attention(H)outputSize = config.model.hiddenSizes[-1]print("outputSize:{}".format(outputSize))#全连接层的输出with tf.name_scope("output"):outputW = tf.get_variable("outputW",shape=[outputSize, 1],initializer=tf.contrib.layers.xavier_initializer())outputB = tf.Variable(tf.constant(0.1, shape=[1]), name="outputB")predictions = tf.nn.xw_plus_b(output, outputW, outputB, name="predictions")return predictionsdef attention(self, H):"""利用Attention机制得到句子的向量表示"""#获得最后一层lstm神经元的数量hiddenSize = config.model.hiddenSizes[-1]#初始化一个权重向量,是可训练的参数W = tf.Variable(tf.random_normal([hiddenSize], stddev=0.1))#对bi-lstm的输出用激活函数做非线性转换M = tf.tanh(H)#对W和M做矩阵运算,W=[batch_size, time_step, hidden_size], 计算前做维度转换成[batch_size * time_step, hidden_size]#newM = [batch_size, time_step, 1], 每一个时间步的输出由向量转换成一个数字newM = tf.matmul(tf.reshape(M, [-1, hiddenSize]), tf.reshape(W, [-1, 1]))#对newM做维度转换成[batch_size, time_step]restoreM = tf.reshape(newM, [-1, config.sequenceLength])#用softmax做归一化处理[batch_size, time_step]self.alpha = tf.nn.softmax(restoreM)#利用求得的alpha的值对H进行加权求和,用矩阵运算直接操作r = tf.matmul(tf.transpose(H, [0, 2, 1]), tf.reshape(self.alpha, [-1, config.sequenceLength, 1]))#将三维压缩成二维sequeezeR = [batch_size, hissen_size]sequeezeR = tf.squeeze(r)sentenceRepren = tf.tanh(sequeezeR)#对attention的输出可以做dropout处理output = tf.nn.dropout(sentenceRepren, self.dropoutKeepProb)return outputdef _normalize(self, wordEmbedding, weights):"""对word embedding 结合权重做标准化处理"""mean = tf.matmul(weights, wordEmbedding)powWordEmbedding = tf.pow(wordEmbedding -mean, 2.)var = tf.matmul(weights, powWordEmbedding)stddev = tf.sqrt(1e-6 + var)return (wordEmbedding - mean) / stddevdef _addPerturbation(self, embedded, loss):"""添加波动到word embedding"""grad, =tf.gradients(loss,embedded,aggregation_method=tf.AggregationMethod.EXPERIMENTAL_ACCUMULATE_N)grad = tf.stop_gradient(grad)perturb = self._scaleL2(grad, config.model.epsilon)#print("perturbSize:{}".format(embedded+perturb))return embedded + perturbdef _scaleL2(self, x, norm_length):#shape(x) = [batch, num_step, d]#divide x by max(abs(x)) for a numerically stable L2 norm#2norm(x) = a * 2norm(x/a)#scale over the full sequence, dim(1, 2)alpha = tf.reduce_max(tf.abs(x), (1, 2), keep_dims=True) + 1e-12l2_norm = alpha * tf.sqrt(tf.reduce_sum(tf.pow(x/alpha, 2), (1, 2), keep_dims=True) + 1e-6)x_unit = x / l2_normreturn norm_length * x_unit

代码是在双向lstm+attention的基础上增加adversarial training,训练数据为imdb电影评论数据,最后的结果发现确实很快就能达到最优值,但是训练所占的空间比较大(电脑跑了几十步就停止了),每一步的时间也稍微长一点。

)

)

{ }排序的实现过程)

)