python数据建模数据集

There are useful Python packages that allow loading publicly available datasets with just a few lines of code. In this post, we will look at 5 packages that give instant access to a range of datasets. For each package, we will look at how to check out its list of available datasets and how to load an example dataset to a pandas dataframe.

有一些有用的Python程序包,仅需几行代码即可加载公共可用的数据集。 在本文中,我们将介绍5个可立即访问一系列数据集的软件包。 对于每个包,我们将研究如何检查其可用数据集列表以及如何将示例数据集加载到pandas数据框 。

0. Python设置🔧 (0. Python setup 🔧)

I assume the reader (👀 yes, you!) has access to and is familiar with Python including installing packages, defining functions and other basic tasks. If you are new to Python, this is a good place to get started.

我假设读者(👀,是的,您!)可以访问并熟悉Python,包括安装软件包,定义函数和其他基本任务。 如果您不熟悉Python,那么这是一个入门的好地方。

I have used and tested the scripts in Python 3.7.1 in Jupyter Notebook. Let’s make sure you have the relevant packages installed before we dive in:

我在Jupyter Notebook中使用并测试了Python 3.7.1中的脚本。 在我们深入研究之前,请确保您已安装相关的软件包:

◼️ ️pydataset: Dataset package,◼️ ️seaborn: Data Visualisation package,◼️ ️sklearn: Machine Learning package,◼️ ️statsmodel: Statistical Model package and◼️ ️nltk: Natural Language Tool Kit package

◼️️pydataset:数据集包,◼️️seaborn:数据可视化包装,◼️️sklearn:机器学习包,◼️️statsmodel:统计模型包and◼️️NLTK:自然语言工具套件包

For each package, we will inspect the shape, head and tail of an example dataset. To avoid repeating ourselves, let’s quickly make a function:

对于每个包装,我们将检查示例数据集的shape , head和tail 。 为了避免重复自己,让我们快速创建一个函数:

# Create a function to glimpse the data

def glimpse(df):

print(f"{df.shape[0]} rows and {df.shape[1]} columns")

display(df.head())

display(df.tail())Alright, we are ready to dive in! 🐳

好了,我们准备潜水了! 🐳

1. PyDataset📚 (1. PyDataset 📚)

The first package we are going look at is PyDataset. It’s easy to use and gives access to over 700 datasets. The package was inspired by ease of accessing datasets in R and aimed to bring that ease in Python. Let’s check out the list of datasets:

我们要看的第一个包是PyDataset 。 它易于使用,可访问700多个数据集。 该软件包的灵感来自于轻松访问R中的数据集,并旨在将这种便利引入Python。 让我们查看数据集列表:

# Import package

from pydataset import data# Check out datasets

data()

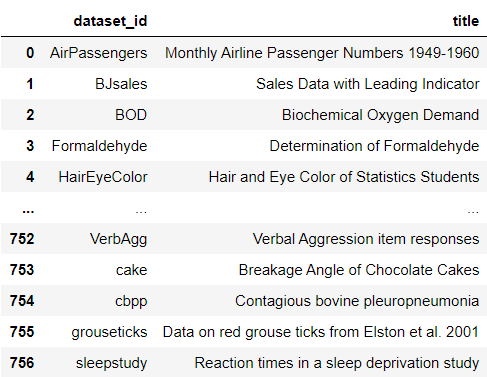

This returns a dataframe containing dataset_id and title for all datasets which you can browse through. Currently, there are 757 datasets. Now, let’s load the famous iris dataset as an example:

这将返回一个数据集,其中包含可浏览的所有数据集的dataset_id和标题 。 当前,有757个数据集。 现在,让我们以著名的虹膜数据集为例:

# Load as a dataframe

df = data('iris')

glimpse(df)

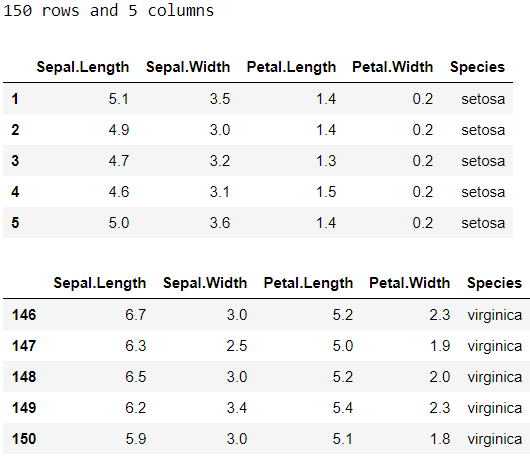

Loading a dataset to a dataframe takes only one line once we import the package. So simple, right? Something to note is that row index starts from 1 as opposed to 0 in this dataset.

导入包后,将数据集加载到数据框仅需一行。 很简单,对吧? 需要注意的是,该数据集中的行索引从1开始,而不是0。

🔗 To learn more, check out PyDataset’s GitHub repository.

learn要了解更多信息,请查看PyDataset的GitHub存储库 。

2. Seaborn🌊 (2. Seaborn 🌊)

Seaborn is another package that provides easy access to example datasets. To find the full list of datasets, you can browse the GitHub repository or you can check it in Python like this:

Seaborn是另一个可以轻松访问示例数据集的软件包。 要查找数据集的完整列表,可以浏览GitHub存储库 ,也可以像这样在Python中进行检查:

# Import seaborn

import seaborn as sns# Check out available datasets

print(sns.get_dataset_names())

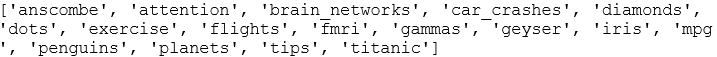

Currently, there are 17 datasets available. Let’s load iris dataset as an example:

当前,有17个数据集可用。 让我们以加载虹膜数据集为例:

# Load as a dataframe

df = sns.load_dataset('iris')

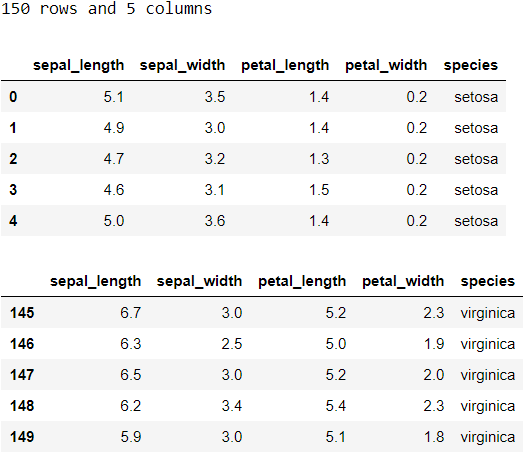

glimpse(df)

It also takes only one line to load a dataset as a dataframe after importing the package.

导入数据包后,只需一行即可将数据集作为数据框加载。

🔗 To learn more, check out documentation page for load_dataset.

learn要了解更多信息,请查看文档页面以获取load_dataset 。

3. Scikit学习arn (3. Scikit-learn 📓)

Not only is scikit-learn awesome for feature engineering and building models, it also comes with toy datasets and provides easy access to download and load real world datasets. The list of toy and real datasets as well as other details are available here. You can find out more details about a dataset by scrolling through the link or referring to the individual documentation for functions. It’s worth mentioning that among the datasets, there are some toy and real image datasets such as digits dataset and Olivetti faces dataset.

s cikit学习不仅对于功能工程和构建模型非常棒,而且还包含玩具数据集,并提供了轻松访问下载和加载现实世界数据集的便利。 玩具和真实数据集的列表以及其他详细信息可在此处获得 。 您可以通过滚动链接或参考各个函数的文档来查找有关数据集的更多详细信息。 值得一提的是,在这些数据集中,有一些玩具和真实图像数据集 例如数字数据集和Olivetti人脸数据集 。

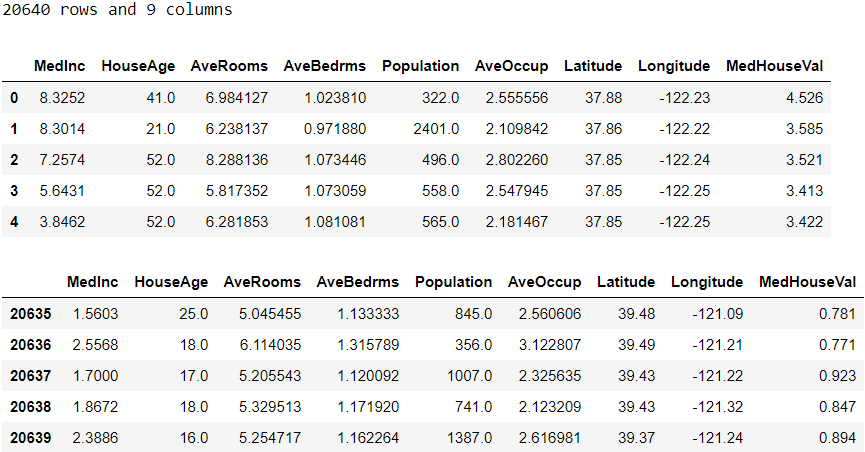

Now, let’s look at how to load real dataset with an example:

现在,让我们来看一个示例如何加载真实数据集:

# Import package

from sklearn.datasets import fetch_california_housing# Load data (will download the data if it's the first time loading)

housing = fetch_california_housing(as_frame=True)# Create a dataframe

df = housing['data'].join(housing['target'])

glimpse(df)

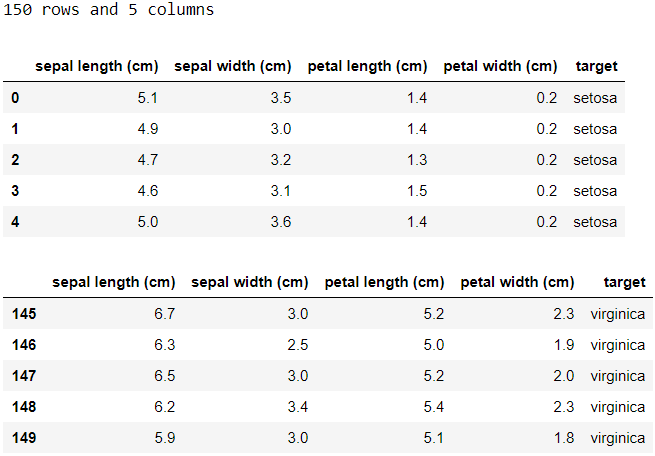

Here’s how to load an example toy dataset, iris:

这是如何加载示例玩具数据集iris的方法 :

# Import package

from sklearn.datasets import load_iris# Load data

iris = load_iris(as_frame=True)# Create a dataframe

df = iris['data'].join(iris['target'])# Map target names (only for categorical target)

df['target'].replace(dict(zip(range(len(iris['target_names'])), iris['target_names'])), inplace=True)

glimpse(df)

💡 If you get an error regarding the as_frame argument, either update your sklearn version to 0.23 or higher or use the script below:

💡如果在as_frame参数方面遇到错误,请将sklearn版本更新为0.23或更高版本,或使用以下脚本:

# Import packages

import pandas as pd

from sklearn.datasets import load_iris# Load data

iris = load_iris()# Create a dataframe

X = pd.DataFrame(iris['data'], columns=iris['feature_names'])

y = pd.DataFrame(iris['target'], columns=['target'])

df = X.join(y)# Map target names (only for categorical target)

df['target'].replace(dict(zip(range(len(iris['target_names'])), iris['target_names'])), inplace=True)

glimpse(df)🔗 For more information, check out scikit-learn’s documentation page.

🔗有关更多信息,请查看scikit-learn的文档页面 。

4.统计模型📔 (4. Statsmodels 📔)

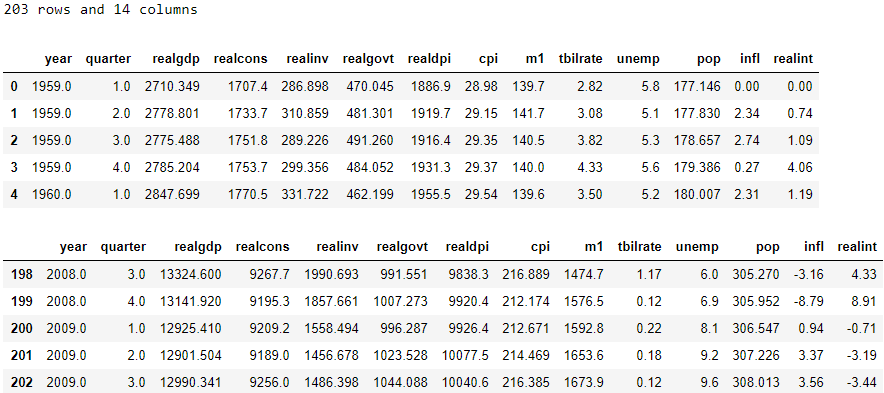

Another package through which we can access data is statsmodels. Available built-in datasets are listed here on their website. Let’s pick ‘United States Macroeconomic data’ as an example and load it:

statsmodels是我们可以通过其访问数据的另一个包。 可用内置的数据集列在这里他们的网站上。 让我们以“美国宏观经济数据 ”为例并加载它:

# Import package

import statsmodels.api as sm# Load data as a dataframe

df = sm.datasets.macrodata.load_pandas()['data']

glimpse(df)

As you may have noticed, the name we used to access ‘United States Macroeconomic data’ is macrodata. To find the equivalent name for other datasets, have a look at the end of the URL for that dataset documentation. For instance, if you click on ‘United States Macroeconomic data’ in Available Dataset section and look at the address bar in your browser, you will see ‘macrodata.html’ at the end of URL.

您可能已经注意到,我们用来访问“美国宏观经济数据 ”的名称是macrodata 。 要查找其他数据集的等效名称,请查看该数据集文档的URL末尾。 例如,如果单击“ 可用数据集”部分中的“美国宏观经济数据”,然后在浏览器中查看地址栏,则URL末尾将显示“ macrodata.html” 。

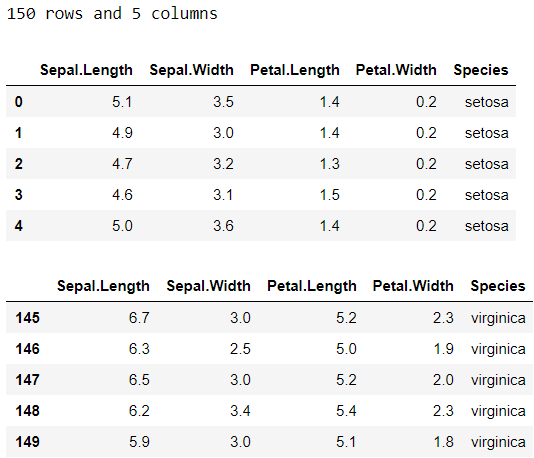

Statsmodels also allows loading datasets from R with the get_rdataset function. The list of available datasets are here. Using iris dataset as an example, here is how we can load the data:

Statsmodels还允许使用get_rdataset函数从R加载数据集。 可用数据集列表在此处 。 以鸢尾花数据集为例,以下是我们如何加载数据的方法:

# Load data as a dataframe

df = sm.datasets.get_rdataset(dataname='iris', package='datasets')['data']

glimpse(df)

🔗 For more information, check out documentation page for datasets.

🔗有关更多信息,请查看文档页面以获取数据集。

5.自然语言工具包| NLTK📜 (5. Natural Language Toolkit | NLTK 📜)

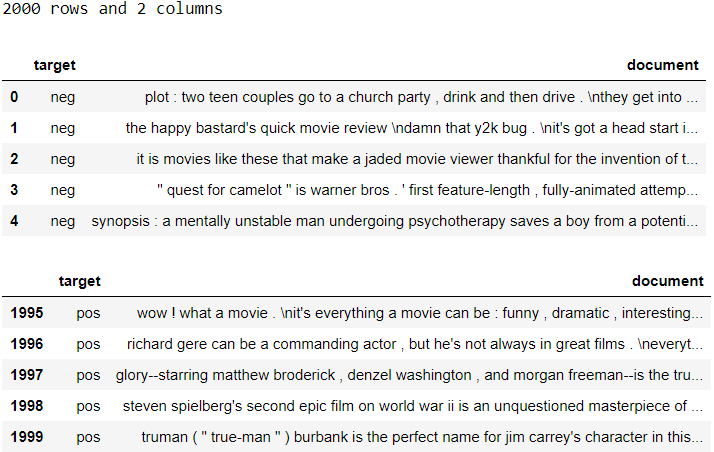

This package is slightly different from the rest because it provides access only to text datasets. Here’s the list of text datasets available (Psst, please note some items in that list are models). Using the id, we can access the relevant text dataset from NLTK. Let’s take Sentiment Polarity Dataset as an example. Its id is movie_reviews. Let’s first download it with the following script:

该软件包与其他软件包略有不同,因为它仅提供对文本数据集的访问。 这是可用的文本数据集的列表(Psst,请注意该列表中的某些项目是模型)。 使用id ,我们可以从NLTK访问相关的文本数据集。 让我们以“ 情感极性数据集 ”为例。 它的id是movie_reviews 。 首先使用以下脚本下载它:

# Import package

import nltk# Download the corpus (only need to do once)

nltk.download('movie_reviews')If it is already downloaded, running this will notify that you have done so. Once downloaded, we can load the data to a dataframe like this:

如果已经下载,运行此命令将通知您已完成下载。 下载后,我们可以将数据加载到这样的数据框中:

# Import packages

import pandas as pd

from nltk.corpus import movie_reviews# Convert to dataframe

documents = []

for fileid in movie_reviews.fileids():

tag, filename = fileid.split('/')

documents.append((tag, movie_reviews.raw(fileid)))

df = pd.DataFrame(documents, columns=['target', 'document'])

glimpse(df)

There is no one size fits all approach when converting text data from NLTK to a dataframe. This means you will need to look up the appropriate way to convert to a dataframe on a case-by-case basis.

将文本数据从NLTK转换为数据框时,没有一种适合所有情况的方法。 这意味着您将需要根据具体情况查找适当的方法以转换为数据框。

🔗 For more information, check out this resource on accessing text corpora and lexical resources.

🔗欲了解更多信息,请查看该资源的访问语料库和词汇资源。

There you have it, 5 packages that allow easy access to datasets. Now you know how to load datasets from any of these packages. It’s possible that datasets available in these packages could change in future but you know how to find all the available datasets, anyway! 🙆

那里有5个软件包,可轻松访问数据集。 现在您知道了如何从任何一个软件包中加载数据集。 这些软件包中可用的数据集将来可能会更改,但是您仍然知道如何查找所有可用的数据集! 🙆

Thank you for reading my post. Hope you found something useful ✂️. If you are interested, here are the links to some of my other posts:◼️️ 5 tips for pandas users◼️️️️ How to transform variables in a pandas DataFrame◼️ TF-IDF explained◼️ Supervised text classification model in Python

感谢您阅读我的帖子。 希望你找到了有用的✂️。 如果您有兴趣,这里是指向我其他一些文章的链接:◼️️ 给熊猫用户的5条提示 ◼️️️️ 如何在熊猫DataFrame中转换变量 ◼️TF -IDF解释了 ◼️Python中的监督文本分类模型

Bye for now 🏃💨

再见for

翻译自: https://towardsdatascience.com/datasets-in-python-425475a20eb1

python数据建模数据集

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/388123.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!

)