BP神经网络的原理在网上有很详细的说明,这里就不打算细说,这篇文章主要简单的方式设计及实现BP神经网络,并简单测试下在恒等计算(编码)作测试。

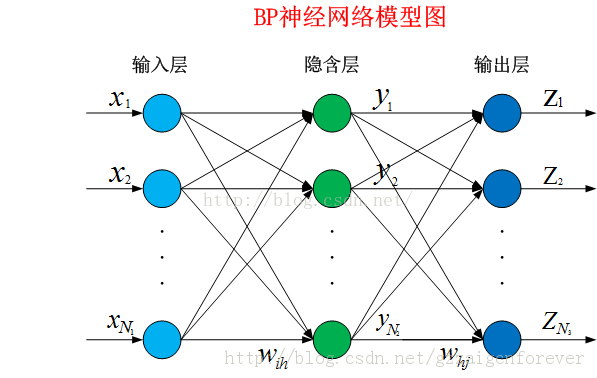

BP神经网络模型图如下

BP神经网络基本思想

BP神经网络学习过程由信息的下向传递和误差的反向传播两个过程组成

正向传递:由模型图中的数据x从输入层到最后输出层z的过程。

反向传播:在训练阶段,如果正向传递过程中发现输出的值与期望的传有误差,由将误差从输出层返传回输入层的过程。返回的过程主要是修改每一层每个连接的权值w,达到减少误的过程。

BP神经网络设计

设计思路是将神经网络分为神经元、网络层及整个网络三个层次。

首先是定义使用sigmoid函数作为激活函数

- def logistic(x):

- return 1 / (1 + np.exp(-x))

- def logistic_derivative(x):

- return logistic(x) * (1 - logistic(x))

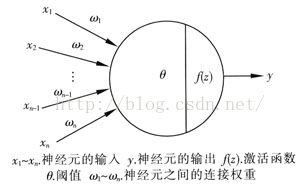

神经元的设计

由神经元的设计图可知,BP神经网络可拆解成是神经元的集合。

神经元主要功能:

- 计算数据,输出结果。

- 更新各连接权值。

- 向上一层反馈权值更新值,实现反馈功能。

注意到:

- weight_add = self.input * self.deltas_item * learning_rate + 0.9 * self.last_weight_add#添加冲量

神经元设计代码如下:

- class Neuron:

- def __init__(self, len_input):

- # 输入的初始参数, 随机取很小的值(<0.1)

- self.weights = np.random.random(len_input) * 0.1

- # 当前实例的输入

- self.input = np.ones(len_input)

- # 对下一层的输出值

- self.output = 1

- # 误差项

- self.deltas_item = 0

- # 上一次权重增加的量,记录起来方便后面扩展时可考虑增加冲量

- self.last_weight_add = 0

- def calc_output(self, x):

- # 计算输出值

- self.input = x

- self.output = logistic(np.dot(self.weights.T, self.input))

- return self.output

- def get_back_weight(self):

- # 获取反馈差值

- return self.weights * self.deltas_item

- def update_weight(self, target=0, back_weight=0, learning_rate=0.1, layer="OUTPUT"):

- # 更新权传

- if layer == "OUTPUT":

- self.deltas_item = (target - self.output) * logistic_derivative(self.output)

- elif layer == "HIDDEN":

- self.deltas_item = back_weight * logistic_derivative(self.output)

- weight_add = self.input * self.deltas_item * learning_rate + 0.9 * self.last_weight_add#添加冲量

- self.weights += weight_add

- self.last_weight_add = weight_add

网络层设计

管理一个网络层的代码,分为隐藏层和输出层。 (输入层可直接用输入数据,不简单实现。)

网络层主要管理自己层的神经元,所以封装的结果与神经元的接口一样。对向实现自己的功能。

同时为了方便处理,添加了他下一层的引用。

- class NetLayer:

- '''''

- 网络层封装

- 管理当前网络层的神经元列表

- '''

- def __init__(self, len_node, in_count):

- '''''

- :param len_node: 当前层的神经元数

- :param in_count: 当前层的输入数

- '''

- # 当前层的神经元列表

- self.neurons = [Neuron(in_count) for _ in range(len_node)]

- # 记录下一层的引用,方便递归操作

- self.next_layer = None

- def calc_output(self, x):

- output = np.array([node.calc_output(x) for node in self.neurons])

- if self.next_layer is not None:

- return self.next_layer.calc_output(output)

- return output

- def get_back_weight(self):

- return sum([node.get_back_weight() for node in self.neurons])

- def update_weight(self, learning_rate, target):

- '''''

- 更新当前网络层及之后层次的权重

- 使用了递归来操作,所以要求外面调用时必须从网络层的第一层(输入层的下一层)来调用

- :param learning_rate: 学习率

- :param target: 输出值

- '''

- layer = "OUTPUT"

- back_weight = np.zeros(len(self.neurons))

- if self.next_layer is not None:

- back_weight = self.next_layer.update_weight(learning_rate, target)

- layer = "HIDDEN"

- for i, node in enumerate(self.neurons):

- <span style="white-space:pre"> </span>target_item = 0 if len(target) <= i else target[i]

- node.update_weight(target=<span style="font-family: Arial, Helvetica, sans-serif;">target_item</span><span style="font-family: Arial, Helvetica, sans-serif;">, back_weight=back_weight[i], learning_rate=learning_rate, layer=layer)</span>

- return self.get_back_weight()

BP神经网络实现

管理整个网络,对外提供训练接口及预测接口。

构建网络参数为一列表, 第一个元素代码输入参数个数, 最后一个代码输出神经元个数,中间的为各个隐藏层中的神经元的个数。

由于各层间代码链式存储, 所以layers[0]操作就代码了整个网络。

- class NeuralNetWork:

- def __init__(self, layers):

- self.layers = []

- self.construct_network(layers)

- pass

- def construct_network(self, layers):

- last_layer = None

- for i, layer in enumerate(layers):

- if i == 0:

- continue

- cur_layer = NetLayer(layer, layers[i-1])

- self.layers.append(cur_layer)

- if last_layer is not None:

- last_layer.next_layer = cur_layer

- last_layer = cur_layer

- def fit(self, x_train, y_train, learning_rate=0.1, epochs=100000, shuffle=False):

- '''''

- 训练网络, 默认按顺序来训练

- 方法 1:按训练数据顺序来训练

- 方法 2: 随机选择测试

- :param x_train: 输入数据

- :param y_train: 输出数据

- :param learning_rate: 学习率

- :param epochs:权重更新次数

- :param shuffle:随机取数据训练

- '''

- indices = np.arange(len(x_train))

- for _ in range(epochs):

- if shuffle:

- np.random.shuffle(indices)

- for i in indices:

- self.layers[0].calc_output(x_train[i])

- self.layers[0].update_weight(learning_rate, y_train[i])

- pass

- def predict(self, x):

- return self.layers[0].calc_output(x)

测试代码

测试数据中输出数据和输出数据一样。测试AutoEncoder自动编码器。(AutoEncoder不了解的可网上找一下。)

- if __name__ == '__main__':

- print("test neural network")

- data = np.array([[1, 0, 0, 0, 0, 0, 0, 0],

- [0, 1, 0, 0, 0, 0, 0, 0],

- [0, 0, 1, 0, 0, 0, 0, 0],

- [0, 0, 0, 1, 0, 0, 0, 0],

- [0, 0, 0, 0, 1, 0, 0, 0],

- [0, 0, 0, 0, 0, 1, 0, 0],

- [0, 0, 0, 0, 0, 0, 1, 0],

- [0, 0, 0, 0, 0, 0, 0, 1]])

- np.set_printoptions(precision=3, suppress=True)

- for item in range(10):

- network = NeuralNetWork([8, 3, 8])

- # 让输入数据与输出数据相等

- network.fit(data, data, learning_rate=0.1, epochs=10000)

- print("\n\n", item, "result")

- for item in data:

- print(item, network.predict(item))

结果输出

效果还不错,达到了预想的结果。

问题:可测试结果中有 0.317(已经标红), 是由于把8个数据编码成3个数据有点勉强。 如果网络改成[8,4,8]就能够不出现这样的结果。 大家可以试一下。

- /Library/Frameworks/Python.framework/Versions/3.4/bin/python3.4 /XXXX/机器学习/number/NeuralNetwork.py

- test neural network

- 0 result

- [1 0 0 0 0 0 0 0] [ 0.987 0. 0.005 0. 0. 0.01 0.004 0. ]

- [0 1 0 0 0 0 0 0] [ 0. 0.985 0. 0.006 0. 0.025 0. 0.008]

- [0 0 1 0 0 0 0 0] [ 0.007 0. 0.983 0. 0.007 0.027 0. 0. ]

- [0 0 0 1 0 0 0 0] [ 0. 0.005 0. 0.985 0.007 0.02 0. 0. ]

- [0 0 0 0 1 0 0 0] [ 0. 0. 0.005 0.005 0.983 0.013 0. 0. ]

- [0 0 0 0 0 1 0 0] [ 0.016 0.017 0.02 0.018 0.018 <span style="color:#ff0000;">0.317</span> 0.023 0.017]

- [0 0 0 0 0 0 1 0] [ 0.006 0. 0. 0. 0. 0.026 0.984 0.006]

- [0 0 0 0 0 0 0 1] [ 0. 0.005 0. 0. 0. 0.01 0.004 0.985]

- 1 result

- [1 0 0 0 0 0 0 0] [ 0.983 0. 0. 0.007 0.007 0. 0. 0.027]

- [0 1 0 0 0 0 0 0] [ 0. 0.986 0.004 0. 0. 0. 0.005 0.01 ]

- [0 0 1 0 0 0 0 0] [ 0. 0.005 0.985 0. 0.005 0. 0. 0.026]

- [0 0 0 1 0 0 0 0] [ 0.005 0. 0. 0.983 0. 0.006 0. 0.015]

- [0 0 0 0 1 0 0 0] [ 0.005 0. 0.004 0. 0.987 0. 0. 0.01 ]

- [0 0 0 0 0 1 0 0] [ 0. 0. 0. 0.006 0. 0.984 0.005 0.018]

- [0 0 0 0 0 0 1 0] [ 0. 0.008 0. 0. 0. 0.006 0.984 0.027]

- [0 0 0 0 0 0 0 1] [ 0.018 0.017 0.025 0.018 0.016 0.018 0.017 <span style="color:#ff0000;">0.317]</span>

- 2 result

- [1 0 0 0 0 0 0 0] [ 0.966 0. 0.016 0.014 0. 0. 0. 0. ]

- [0 1 0 0 0 0 0 0] [ 0. 0.969 0. 0.016 0. 0. 0. 0.014]

- [0 0 1 0 0 0 0 0] [ 0.012 0. 0.969 0. 0. 0.013 0. 0. ]

- [0 0 0 1 0 0 0 0] [ 0.014 0.014 0. 0.969 0. 0. 0. 0. ]

- [0 0 0 0 1 0 0 0] [ 0. 0. 0. 0. 0.962 0.016 0.02 0. ]

- [0 0 0 0 0 1 0 0] [ 0. 0. 0.02 0. 0.016 0.963 0. 0. ]

- [0 0 0 0 0 0 1 0] [ 0. 0. 0. 0. 0.012 0. 0.969 0.011]

- [0 0 0 0 0 0 0 1] [ 0. 0.014 0. 0. 0. 0. 0.016 0.966]

- 3 result

- [1 0 0 0 0 0 0 0] [ 0.983 0. 0. 0.007 0.027 0. 0. 0.007]

- [0 1 0 0 0 0 0 0] [ 0. 0.986 0.004 0. 0.01 0.005 0. 0. ]

- [0 0 1 0 0 0 0 0] [ 0. 0.006 0.984 0.006 0.026 0. 0. 0. ]

- [0 0 0 1 0 0 0 0] [ 0.005 0. 0.004 0.987 0.01 0. 0. 0. ]

- [0 0 0 0 1 0 0 0] [ 0.019 0.017 0.024 0.016 <span style="color:#ff0000;">0.317</span> 0.017 0.018 0.018]

- [0 0 0 0 0 1 0 0] [ 0. 0.008 0. 0. 0.026 0.984 0.006 0. ]

- [0 0 0 0 0 0 1 0] [ 0. 0. 0. 0. 0.019 0.005 0.984 0.007]

- [0 0 0 0 0 0 0 1] [ 0.005 0. 0. 0. 0.014 0. 0.005 0.983]

- 4 result

- [1 0 0 0 0 0 0 0] [ 0.969 0.014 0. 0. 0. 0. 0.014 0. ]

- [0 1 0 0 0 0 0 0] [ 0.014 0.966 0.016 0. 0. 0. 0. 0. ]

- [0 0 1 0 0 0 0 0] [ 0. 0.011 0.969 0. 0. 0.012 0. 0. ]

- [0 0 0 1 0 0 0 0] [ 0. 0. 0. 0.966 0. 0. 0.013 0.016]

- [0 0 0 0 1 0 0 0] [ 0. 0. 0. 0. 0.963 0.016 0. 0.02 ]

- [0 0 0 0 0 1 0 0] [ 0. 0. 0.02 0. 0.016 0.963 0. 0. ]

- [0 0 0 0 0 0 1 0] [ 0.016 0. 0. 0.014 0. 0. 0.969 0. ]

- [0 0 0 0 0 0 0 1] [ 0. 0. 0. 0.011 0.012 0. 0. 0.969]

- 5 result

- [1 0 0 0 0 0 0 0] [ 0.966 0. 0.016 0. 0. 0.018 0. 0. ]

- [0 1 0 0 0 0 0 0] [ 0. 0.969 0.012 0. 0. 0. 0.011 0. ]

- [0 0 1 0 0 0 0 0] [ 0.015 0.018 0.964 0. 0. 0. 0. 0. ]

- [0 0 0 1 0 0 0 0] [ 0. 0. 0. 0.968 0.013 0. 0. 0.013]

- [0 0 0 0 1 0 0 0] [ 0. 0. 0. 0.015 0.965 0.015 0. 0. ]

- [0 0 0 0 0 1 0 0] [ 0.013 0. 0. 0. 0.013 0.968 0. 0. ]

- [0 0 0 0 0 0 1 0] [ 0. 0.018 0. 0. 0. 0. 0.965 0.014]

- [0 0 0 0 0 0 0 1] [ 0. 0. 0. 0.018 0. 0. 0.015 0.967]

- 6 result

- [1 0 0 0 0 0 0 0] [ 0.983 0.006 0. 0.005 0. 0. 0. 0.016]

- [0 1 0 0 0 0 0 0] [ 0.006 0.983 0. 0. 0. 0.005 0. 0.017]

- [0 0 1 0 0 0 0 0] [ 0. 0. 0.987 0.005 0. 0. 0.004 0.01 ]

- [0 0 0 1 0 0 0 0] [ 0.007 0. 0.007 0.983 0. 0. 0. 0.027]

- [0 0 0 0 1 0 0 0] [ 0. 0. 0. 0. 0.987 0.005 0.004 0.01 ]

- [0 0 0 0 0 1 0 0] [ 0. 0.007 0. 0. 0.008 0.983 0. 0.027]

- [0 0 0 0 0 0 1 0] [ 0. 0. 0.005 0. 0.005 0. 0.985 0.026]

- [0 0 0 0 0 0 0 1] [ 0.018 0.018 0.017 0.017 0.017 0.017 0.025 <span style="color:#ff0000;">0.317</span>]

- 7 result

- [1 0 0 0 0 0 0 0] [ 0.969 0. 0. 0. 0.014 0. 0. 0.015]

- [0 1 0 0 0 0 0 0] [ 0. 0.963 0.02 0. 0. 0.017 0. 0. ]

- [0 0 1 0 0 0 0 0] [ 0. 0.012 0.969 0. 0. 0. 0.011 0. ]

- [0 0 0 1 0 0 0 0] [ 0. 0. 0. 0.969 0.011 0.013 0. 0. ]

- [0 0 0 0 1 0 0 0] [ 0.014 0. 0. 0.016 0.966 0. 0. 0. ]

- [0 0 0 0 0 1 0 0] [ 0. 0.016 0. 0.02 0. 0.962 0. 0. ]

- [0 0 0 0 0 0 1 0] [ 0. 0. 0.016 0. 0. 0. 0.966 0.014]

- [0 0 0 0 0 0 0 1] [ 0.015 0. 0. 0. 0. 0. 0.014 0.969]

- 8 result

- [1 0 0 0 0 0 0 0] [ 0.966 0.016 0.013 0. 0. 0. 0. 0. ]

- [0 1 0 0 0 0 0 0] [ 0.011 0.969 0. 0. 0. 0. 0.012 0. ]

- [0 0 1 0 0 0 0 0] [ 0.014 0. 0.969 0.015 0. 0. 0. 0. ]

- [0 0 0 1 0 0 0 0] [ 0. 0. 0.015 0.969 0. 0.014 0. 0. ]

- [0 0 0 0 1 0 0 0] [ 0. 0. 0. 0. 0.963 0. 0.016 0.02 ]

- [0 0 0 0 0 1 0 0] [ 0. 0. 0. 0.013 0. 0.966 0. 0.016]

- [0 0 0 0 0 0 1 0] [ 0. 0.02 0. 0. 0.016 0. 0.963 0. ]

- [0 0 0 0 0 0 0 1] [ 0. 0. 0. 0. 0.012 0.011 0. 0.969]

- 9 result

- [1 0 0 0 0 0 0 0] [ 0.969 0. 0. 0. 0. 0.011 0. 0.011]

- [0 1 0 0 0 0 0 0] [ 0. 0.968 0. 0. 0. 0. 0.018 0.015]

- [0 0 1 0 0 0 0 0] [ 0. 0. 0.965 0. 0.015 0. 0.015 0. ]

- [0 0 0 1 0 0 0 0] [ 0. 0. 0. 0.966 0.018 0.016 0. 0. ]

- [0 0 0 0 1 0 0 0] [ 0. 0. 0.013 0.013 0.968 0. 0. 0. ]

- [0 0 0 0 0 1 0 0] [ 0.018 0. 0. 0.014 0. 0.964 0. 0. ]

- [0 0 0 0 0 0 1 0] [ 0. 0.013 0.013 0. 0. 0. 0.968 0. ]

- [0 0 0 0 0 0 0 1] [ 0.018 0.014 0. 0. 0. 0. 0. 0.965]

- Process finished with exit code 0

(一))

Exception)

的人脸识别)

---Float)

)

、模块数据)