0x01 基础

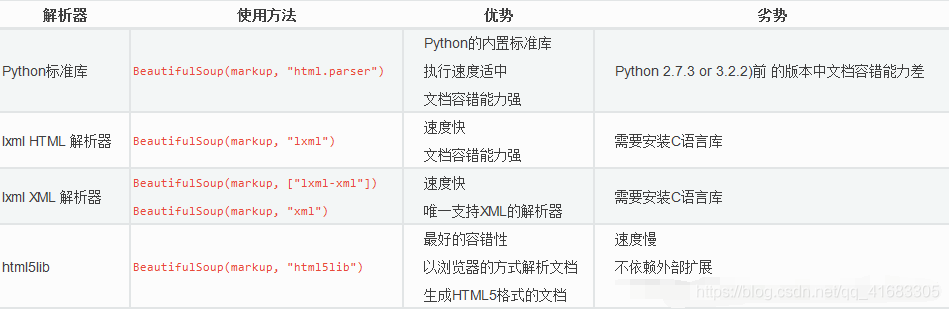

使用bs4首先要安装,安装后导入

import bs4

bs对象有两个方法,一个是find,另一个是find_all

- find(标签名,属性值):只返回一个,返回也是bs对象,可以继续用find和find_all方法

find(name='table',attrs={'class':'hq_table'})

- find_all(标签名,属性值):返回所有符合条件,返回也是bs对象,可以继续用find和find_all方法

find_all(name='tr')

0x02 案例

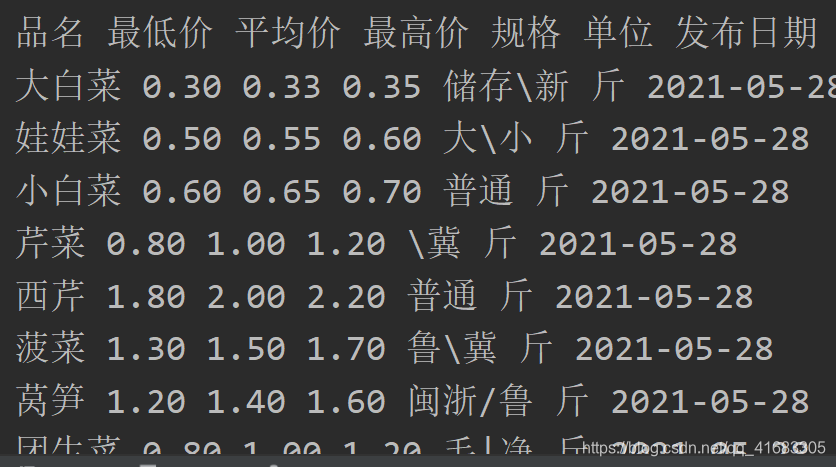

爬取http://www.xinfadi.com.cn/marketanalysis/0/list/1.shtml这个页面菜价相关信息

程序:

import requests

import bs4url = 'http://www.xinfadi.com.cn/marketanalysis/0/list/1.shtml'response = requests.get(url)

page_content = response.text

#print(page_content)

bs_page = bs4.BeautifulSoup(page_content,'html.parser')

table = bs_page.find(name='table',attrs={'class':'hq_table'})

trs = table.find_all(name='tr')

for tr in trs:tds = tr.find_all(name='td')for td in tds:print(td.text,end=' ')print()page_content是我们获取网页的源码,bs4.BeautifulSoup(page_content,'html.parser'),html.parser是告诉BeautifulSoup解析什么文件

运行结果:

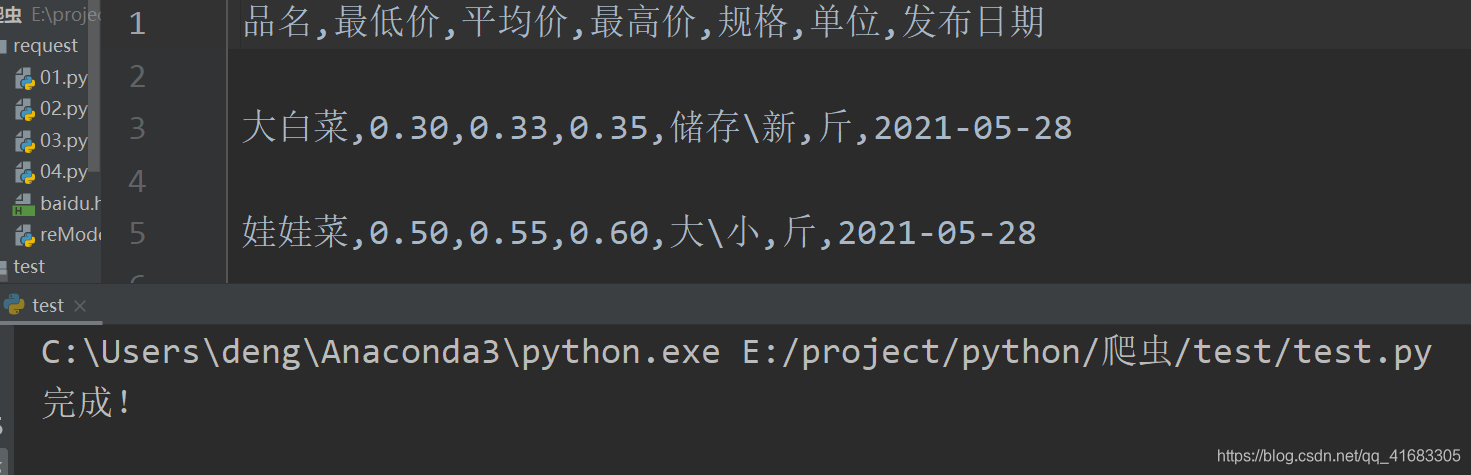

我们将这些数据存储到文件中

程序:

import requests

import bs4

import csv

url = 'http://www.xinfadi.com.cn/marketanalysis/0/list/1.shtml'fl = open('菜价.csv','w',encoding='utf-8')

csvwrite = csv.writer(fl)response = requests.get(url)

page_content = response.text

#print(page_content)

bs_page = bs4.BeautifulSoup(page_content,'html.parser')

table = bs_page.find(name='table',attrs={'class':'hq_table'})

trs = table.find_all(name='tr')

for tr in trs:tds = tr.find_all(name='td')name = tds[0].textprice_low = tds[1].textprice_ave = tds[2].textprice_high = tds[3].textnorm = tds[4].textunit = tds[5].textdata = tds[6].textcsvwrite.writerow([name,price_low,price_ave,price_high,norm,unit,data])fl.close()

print('完成!')结果:

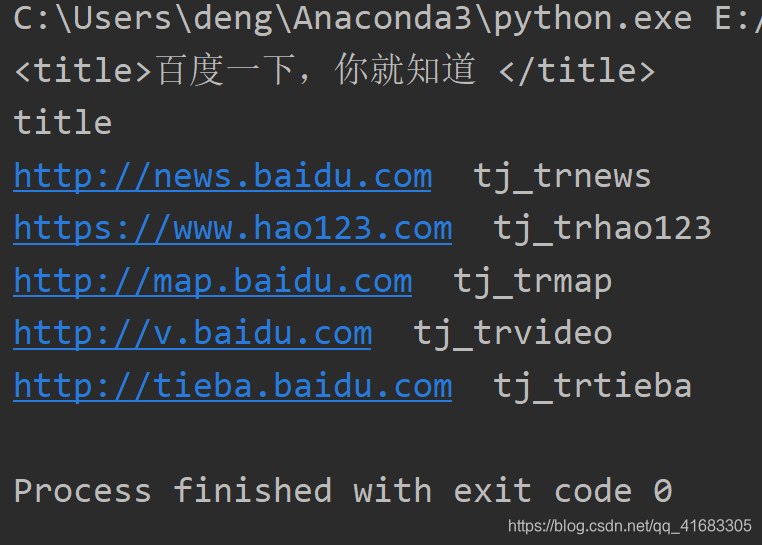

0x03 获取标签的属性值

页面:获取a标签的href值和name值

<!DOCTYPE html>

<html>

<head><meta content="text/html;charset=utf-8" http-equiv="content-type" /><meta content="IE=Edge" http-equiv="X-UA-Compatible" /><meta content="always" name="referrer" /><link href="https://ss1.bdstatic.com/5eN1bjq8AAUYm2zgoY3K/r/www/cache/bdorz/baidu.min.css" rel="stylesheet" type="text/css" /><title>百度一下,你就知道 </title>

</head>

<body link="#0000cc"><div id="wrapper"><div id="head"><div class="head_wrapper"><div id="u1"><a class="mnav" href="http://news.baidu.com" name="tj_trnews">新闻 </a><a class="mnav" href="https://www.hao123.com" name="tj_trhao123">hao123 </a><a class="mnav" href="http://map.baidu.com" name="tj_trmap">地图 </a><a class="mnav" href="http://v.baidu.com" name="tj_trvideo">视频 </a><a class="mnav" href="http://tieba.baidu.com" name="tj_trtieba">贴吧 </a><a class="bri" href="//www.baidu.com/more/" name="tj_briicon" style="display: block;">更多产品 </a></div></div></div></div>

</body>

</html>

- 获取标签的属性,可以再我们获取标签时,再标签后面加入属性值,比如说a就是我们获得标签,a[‘href’]就是其链接内容

程序:

from bs4 import BeautifulSoupwith open('1.html','r',encoding='utf-8') as f:html = f.read()

soup = BeautifulSoup(html,'html.parser')print(soup.title)#获取title标签包含的内容

print(soup.title.name)a = soup.find_all(name='a',attrs={'class':'mnav'})

for i in a:print(i['href'],i['name'],sep=' ')运行结果:

方法与示例)

)

方法(带示例))

)

方法与示例)