1. 引入

Apache Hudi支持多种分区方式数据集,如多级分区、单分区、时间日期分区、无分区数据集等,用户可根据实际需求选择合适的分区方式,下面来详细了解Hudi如何配置何种类型分区。

2. 分区处理

为说明Hudi对不同分区类型的处理,假定写入Hudi的Schema如下

{ "type" : "record", "name" : "HudiSchemaDemo", "namespace" : "hoodie.HudiSchemaDemo", "fields" : [ { "name" : "age", "type" : [ "long", "null" ] }, { "name" : "location", "type" : [ "string", "null" ] }, { "name" : "name", "type" : [ "string", "null" ] }, { "name" : "sex", "type" : [ "string", "null" ] }, { "name" : "ts", "type" : [ "long", "null" ] }, { "name" : "date", "type" : [ "string", "null" ] } ]}其中一条具体数据如下

{ "name": "zhangsan", "ts": 1574297893837, "age": 16, "location": "beijing", "sex":"male", "date":"2020/08/16"}2.1 单分区

单分区表示使用一个字段表示作为分区字段的场景,可具体分为非日期格式字段(如location)和日期格式字段(如date)

2.1.1 非日期格式字段分区

如使用上述location字段做为分区字段,在写入Hudi并同步至Hive时配置如下

df.write().format("org.apache.hudi"). options(getQuickstartWriteConfigs()). option(DataSourceWriteOptions.TABLE_TYPE_OPT_KEY(), "COPY_ON_WRITE"). option(DataSourceWriteOptions.PRECOMBINE_FIELD_OPT_KEY(), "ts"). option(DataSourceWriteOptions.RECORDKEY_FIELD_OPT_KEY(), "name"). option(DataSourceWriteOptions.PARTITIONPATH_FIELD_OPT_KEY(), partitionFields). option(DataSourceWriteOptions.KEYGENERATOR_CLASS_OPT_KEY(), keyGenerator). option(TABLE_NAME, tableName). option("hoodie.datasource.hive_sync.enable", true). option("hoodie.datasource.hive_sync.table", tableName). option("hoodie.datasource.hive_sync.username", "root"). option("hoodie.datasource.hive_sync.password", "123456"). option("hoodie.datasource.hive_sync.jdbcurl", "jdbc:hive2://localhost:10000"). option("hoodie.datasource.hive_sync.partition_fields", hivePartitionFields). option("hoodie.datasource.write.table.type", "COPY_ON_WRITE"). option("hoodie.embed.timeline.server", false). option("hoodie.datasource.hive_sync.partition_extractor_class", hivePartitionExtractorClass). mode(saveMode). save(basePath);值得注意如下几个配置项

DataSourceWriteOptions.PARTITIONPATH_FIELD_OPT_KEY()配置为location;hoodie.datasource.hive_sync.partition_fields配置为location,与写入Hudi的分区字段相同;DataSourceWriteOptions.KEYGENERATOR_CLASS_OPT_KEY()配置为org.apache.hudi.keygen.SimpleKeyGenerator,或者不配置该选项,默认为org.apache.hudi.keygen.SimpleKeyGenerator;hoodie.datasource.hive_sync.partition_extractor_class配置为org.apache.hudi.hive.MultiPartKeysValueExtractor;

Hudi同步到Hive创建的表如下

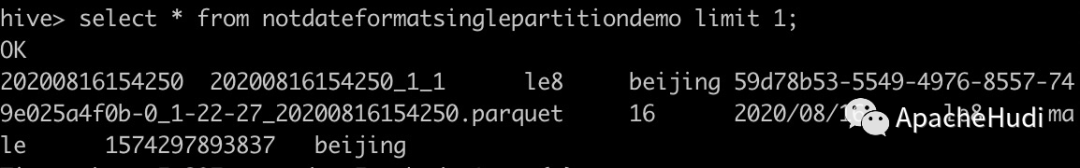

CREATE EXTERNAL TABLE `notdateformatsinglepartitiondemo`( `_hoodie_commit_time` string, `_hoodie_commit_seqno` string, `_hoodie_record_key` string, `_hoodie_partition_path` string, `_hoodie_file_name` string, `age` bigint, `date` string, `name` string, `sex` string, `ts` bigint)PARTITIONED BY ( `location` string)ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'STORED AS INPUTFORMAT 'org.apache.hudi.hadoop.HoodieParquetInputFormat'OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'LOCATION 'file:/tmp/hudi-partitions/notDateFormatSinglePartitionDemo'TBLPROPERTIES ( 'last_commit_time_sync'='20200816154250', 'transient_lastDdlTime'='1597563780')查询表notdateformatsinglepartitiondemo

tips: 查询时请先将hudi-hive-sync-bundle-xxx.jar包放入$HIVE_HOME/lib下

2.1.2 日期格式分区

如使用上述date字段做为分区字段,核心配置项如下

DataSourceWriteOptions.PARTITIONPATH_FIELD_OPT_KEY()配置为date;hoodie.datasource.hive_sync.partition_fields配置为date,与写入Hudi的分区字段相同;DataSourceWriteOptions.KEYGENERATOR_CLASS_OPT_KEY()配置为org.apache.hudi.keygen.SimpleKeyGenerator,或者不配置该选项,默认为org.apache.hudi.keygen.SimpleKeyGenerator;hoodie.datasource.hive_sync.partition_extractor_class配置为org.apache.hudi.hive.SlashEncodedDayPartitionValueExtractor;

Hudi同步到Hive创建的表如下

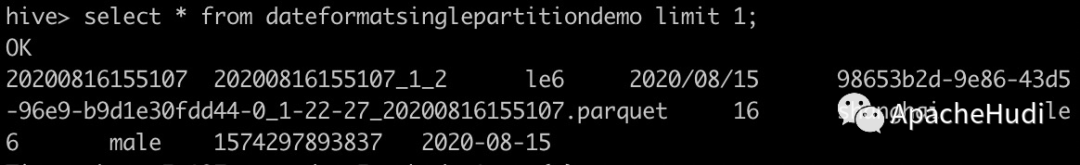

CREATE EXTERNAL TABLE `dateformatsinglepartitiondemo`( `_hoodie_commit_time` string, `_hoodie_commit_seqno` string, `_hoodie_record_key` string, `_hoodie_partition_path` string, `_hoodie_file_name` string, `age` bigint, `location` string, `name` string, `sex` string, `ts` bigint)PARTITIONED BY ( `date` string)ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'STORED AS INPUTFORMAT 'org.apache.hudi.hadoop.HoodieParquetInputFormat'OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'LOCATION 'file:/tmp/hudi-partitions/dateFormatSinglePartitionDemo'TBLPROPERTIES ( 'last_commit_time_sync'='20200816155107', 'transient_lastDdlTime'='1597564276')查询表dateformatsinglepartitiondemo

2.2 多分区

多分区表示使用多个字段表示作为分区字段的场景,如上述使用location字段和sex字段,核心配置项如下

DataSourceWriteOptions.PARTITIONPATH_FIELD_OPT_KEY()配置为location,sex;hoodie.datasource.hive_sync.partition_fields配置为location,sex,与写入Hudi的分区字段相同;DataSourceWriteOptions.KEYGENERATOR_CLASS_OPT_KEY()配置为org.apache.hudi.keygen.ComplexKeyGenerator;hoodie.datasource.hive_sync.partition_extractor_class配置为org.apache.hudi.hive.MultiPartKeysValueExtractor;

Hudi同步到Hive创建的表如下

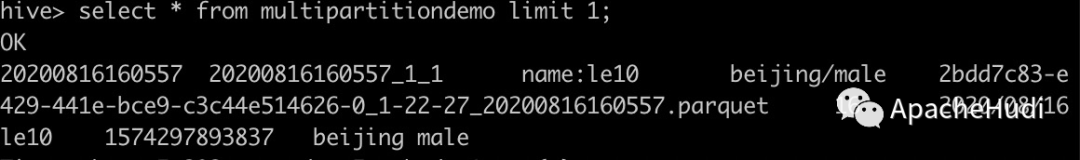

CREATE EXTERNAL TABLE `multipartitiondemo`( `_hoodie_commit_time` string, `_hoodie_commit_seqno` string, `_hoodie_record_key` string, `_hoodie_partition_path` string, `_hoodie_file_name` string, `age` bigint, `date` string, `name` string, `ts` bigint)PARTITIONED BY ( `location` string, `sex` string)ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'STORED AS INPUTFORMAT 'org.apache.hudi.hadoop.HoodieParquetInputFormat'OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'LOCATION 'file:/tmp/hudi-partitions/multiPartitionDemo'TBLPROPERTIES ( 'last_commit_time_sync'='20200816160557', 'transient_lastDdlTime'='1597565166')查询表multipartitiondemo

2.3 无分区

无分区场景是指无分区字段,写入Hudi的数据集无分区。核心配置如下

DataSourceWriteOptions.PARTITIONPATH_FIELD_OPT_KEY()配置为空字符串;hoodie.datasource.hive_sync.partition_fields配置为空字符串,与写入Hudi的分区字段相同;DataSourceWriteOptions.KEYGENERATOR_CLASS_OPT_KEY()配置为org.apache.hudi.keygen.NonpartitionedKeyGenerator;hoodie.datasource.hive_sync.partition_extractor_class配置为org.apache.hudi.hive.NonPartitionedExtractor;

Hudi同步到Hive创建的表如下

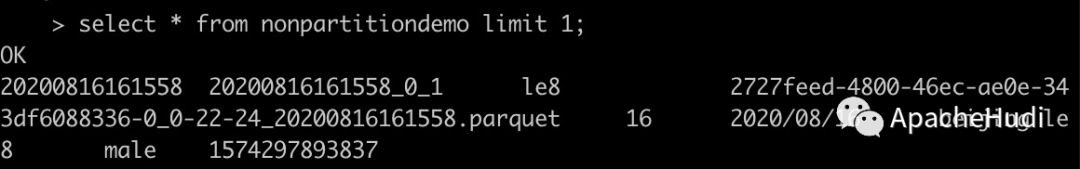

CREATE EXTERNAL TABLE `nonpartitiondemo`( `_hoodie_commit_time` string, `_hoodie_commit_seqno` string, `_hoodie_record_key` string, `_hoodie_partition_path` string, `_hoodie_file_name` string, `age` bigint, `date` string, `location` string, `name` string, `sex` string, `ts` bigint)ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'STORED AS INPUTFORMAT 'org.apache.hudi.hadoop.HoodieParquetInputFormat'OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'LOCATION 'file:/tmp/hudi-partitions/nonPartitionDemo'TBLPROPERTIES ( 'last_commit_time_sync'='20200816161558', 'transient_lastDdlTime'='1597565767')查询表nonpartitiondemo

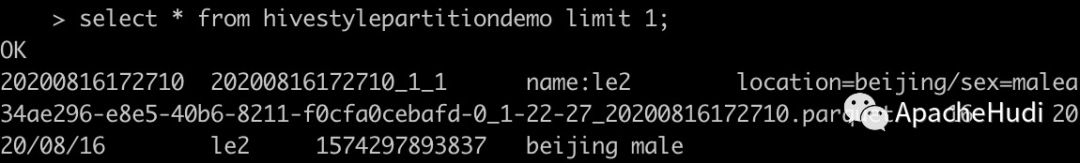

2.4 Hive风格分区

除了上述几种常见的分区方式,还有一种Hive风格分区格式,如location=beijing/sex=male格式,以location,sex作为分区字段,核心配置如下

DataSourceWriteOptions.PARTITIONPATH_FIELD_OPT_KEY()配置为location,sex;hoodie.datasource.hive_sync.partition_fields配置为location,sex,与写入Hudi的分区字段相同;DataSourceWriteOptions.KEYGENERATOR_CLASS_OPT_KEY()配置为org.apache.hudi.keygen.ComplexKeyGenerator;hoodie.datasource.hive_sync.partition_extractor_class配置为org.apache.hudi.hive.SlashEncodedDayPartitionValueExtractor;DataSourceWriteOptions.HIVE_STYLE_PARTITIONING_OPT_KEY()配置为true;

生成的Hudi数据集目录结构会为如下格式

/location=beijing/sex=maleHudi同步到Hive创建的表如下

CREATE EXTERNAL TABLE `hivestylepartitiondemo`( `_hoodie_commit_time` string, `_hoodie_commit_seqno` string, `_hoodie_record_key` string, `_hoodie_partition_path` string, `_hoodie_file_name` string, `age` bigint, `date` string, `name` string, `ts` bigint)PARTITIONED BY ( `location` string, `sex` string)ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'STORED AS INPUTFORMAT 'org.apache.hudi.hadoop.HoodieParquetInputFormat'OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'LOCATION 'file:/tmp/hudi-partitions/hiveStylePartitionDemo'TBLPROPERTIES ( 'last_commit_time_sync'='20200816172710', 'transient_lastDdlTime'='1597570039')查询表hivestylepartitiondemo

3. 总结

本篇文章介绍了Hudi如何处理不同分区场景,上述配置的分区类配置可以满足绝大多数场景,当然Hudi非常灵活,还支持自定义分区解析器,具体可查看KeyGenerator和PartitionValueExtractor类,其中所有写入Hudi的分区字段生成器都是KeyGenerator的子类,所有同步至Hive的分区值解析器都是PartitionValueExtractor的子类。上述示例代码都已经上传至https://github.com/leesf/hudi-demos,该仓库会持续补充各种使用Hudi的Demo,方便开发者快速了解Hudi,构建企业级数据湖,欢迎star & fork。

推荐阅读

Apache Hudi表自动同步至阿里云数据湖分析DLA

Apache Hudi + AWS S3 + Athena实践

官宣!AWS Athena正式可查Apache Hudi数据集

生态 | Apache Hudi插上Alluxio的翅膀

Apache Hudi重磅RFC解读之存量表高效迁移机制

`

)

:跨域CORS(上))