1、直接上官方代码,调整过的,方可使用

package com.test import org.apache.spark.{SparkConf, SparkContext} import org.apache.spark.mllib.classification.{LogisticRegressionModel, LogisticRegressionWithLBFGS} import org.apache.spark.mllib.evaluation.MulticlassMetrics import org.apache.spark.mllib.regression.LabeledPoint import org.apache.spark.mllib.util.MLUtilsobject logsitiRcongin {def main(args: Array[String]): Unit = {val conf = new SparkConf().setMaster("local").setAppName("df")val sc = new SparkContext(conf)// Load training data in LIBSVM format.val data = MLUtils.loadLibSVMFile(sc, "E:\\spackLearn\\spark-2.3.3-bin-hadoop2.7\\data\\mllib\\sample_libsvm_data.txt")// Split data into training (60%) and test (40%).val splits = data.randomSplit(Array(0.6, 0.4), seed = 11L)val training = splits(0).cache()val test = splits(1)// Run training algorithm to build the modelval model = new LogisticRegressionWithLBFGS().setNumClasses(10).run(training)// Compute raw scores on the test set.val predictionAndLabels = test.map { case LabeledPoint(label, features) =>val prediction = model.predict(features)(prediction, label)}// Get evaluation metrics.val metrics = new MulticlassMetrics(predictionAndLabels)val accuracy = metrics.accuracyprintln(s"最后的得分:Accuracy = $accuracy")// Save and load modelmodel.save(sc, "data/model/scalaLogisticRegressionWithLBFGSModel")val sameModel = LogisticRegressionModel.load(sc, "data/model/scalaLogisticRegressionWithLBFGSModel")while (true){}} }

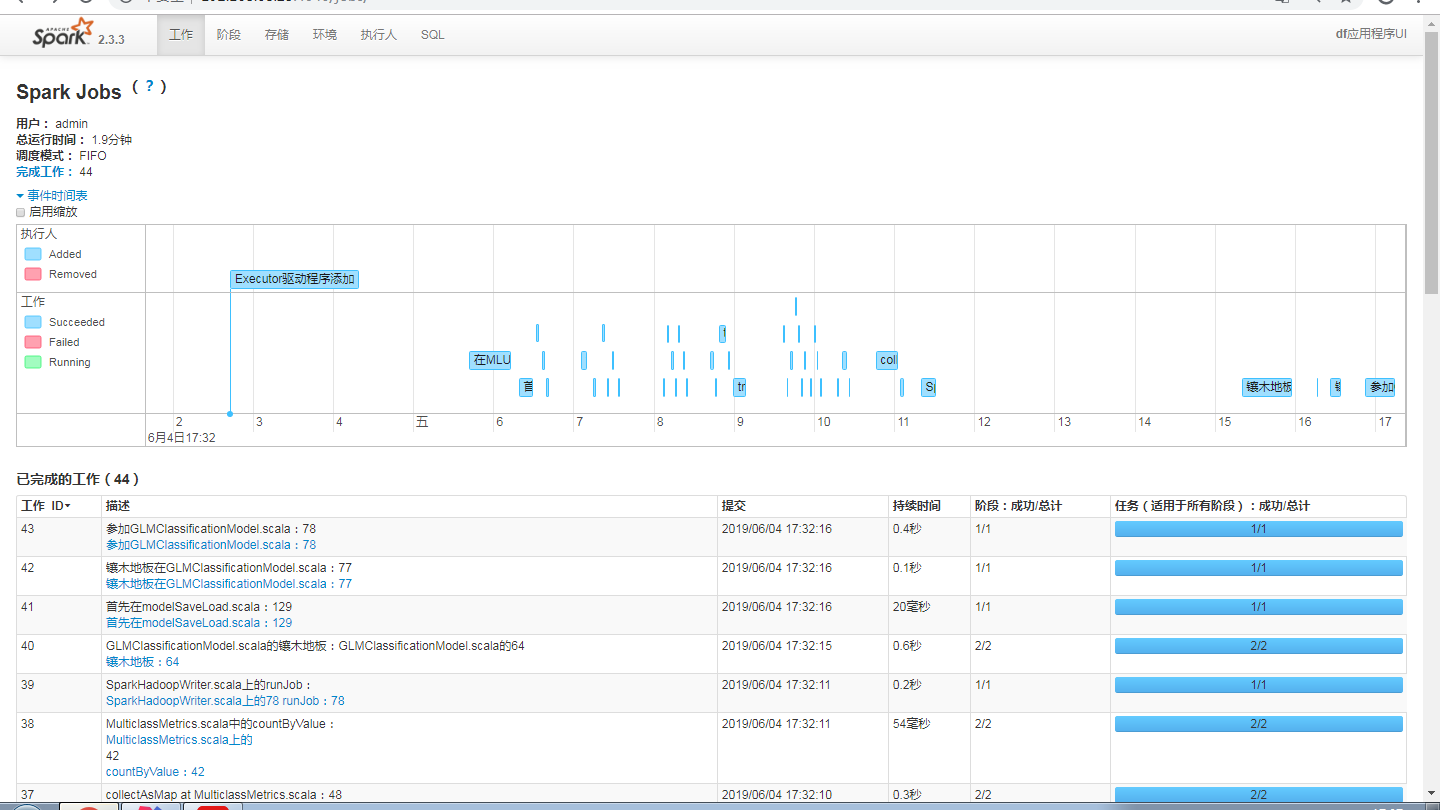

最后查看任务调度

)

![Parameter 'userName' not found. Available parameters are [1, 0, param1, param2]](http://pic.xiahunao.cn/Parameter 'userName' not found. Available parameters are [1, 0, param1, param2])