原文链接:添加链接描述

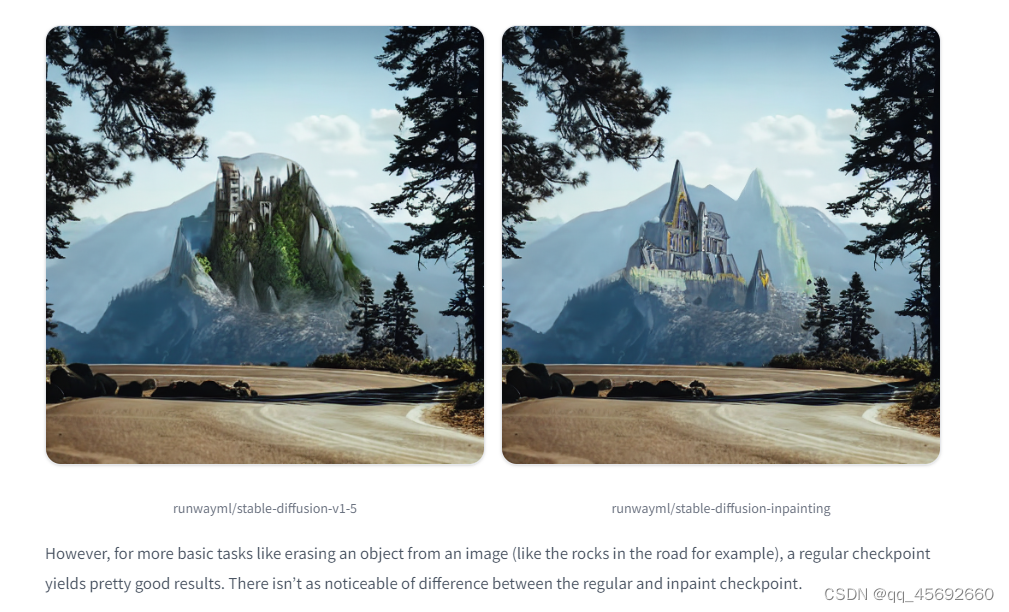

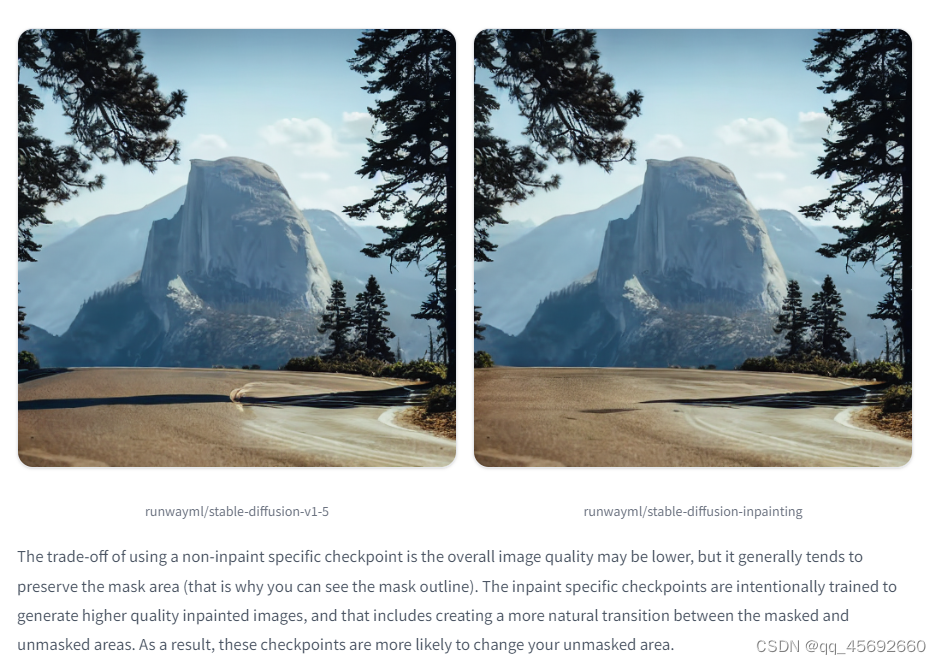

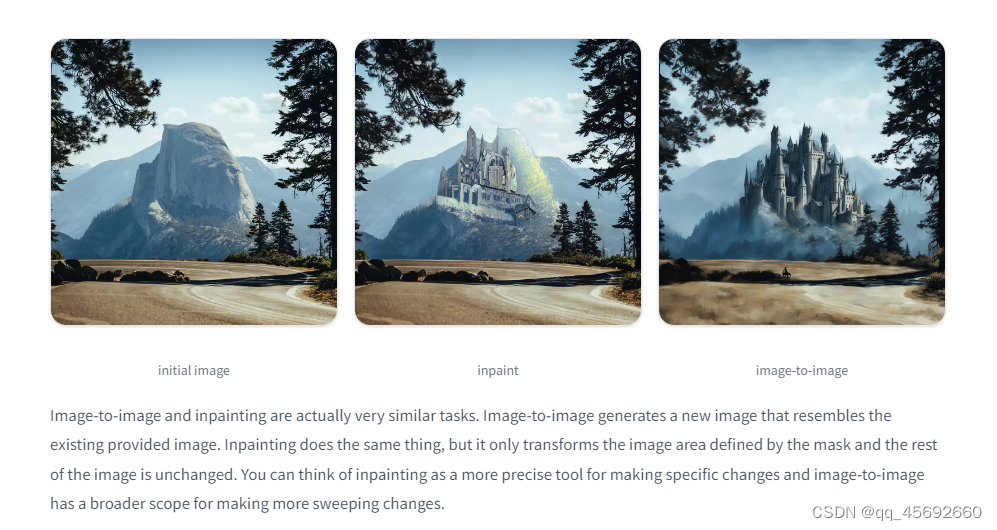

白色mask区域仅使用生成出来的,非白色mask区域使用原始影像,但是图像有点不平滑

import PIL

import numpy as np

import torchfrom diffusers import AutoPipelineForInpainting

from diffusers.utils import load_image, make_image_griddevice = "cuda"

pipeline = AutoPipelineForInpainting.from_pretrained("runwayml/stable-diffusion-inpainting",torch_dtype=torch.float16,

)

pipeline = pipeline.to(device)img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"init_image = load_image(img_url).resize((512, 512))

mask_image = load_image(mask_url).resize((512, 512))prompt = "Face of a yellow cat, high resolution, sitting on a park bench"

repainted_image = pipeline(prompt=prompt, image=init_image, mask_image=mask_image).images[0]

repainted_image.save("repainted_image.png")# Convert mask to grayscale NumPy array

mask_image_arr = np.array(mask_image.convert("L"))

# Add a channel dimension to the end of the grayscale mask

mask_image_arr = mask_image_arr[:, :, None]

# Binarize the mask: 1s correspond to the pixels which are repainted

mask_image_arr = mask_image_arr.astype(np.float32) / 255.0

mask_image_arr[mask_image_arr < 0.5] = 0

mask_image_arr[mask_image_arr >= 0.5] = 1# Take the masked pixels from the repainted image and the unmasked pixels from the initial image

unmasked_unchanged_image_arr = (1 - mask_image_arr) * init_image + mask_image_arr * repainted_image

unmasked_unchanged_image = PIL.Image.fromarray(unmasked_unchanged_image_arr.round().astype("uint8"))

unmasked_unchanged_image.save("force_unmasked_unchanged.png")

make_image_grid([init_image, mask_image, repainted_image, unmasked_unchanged_image], rows=2, cols=2)

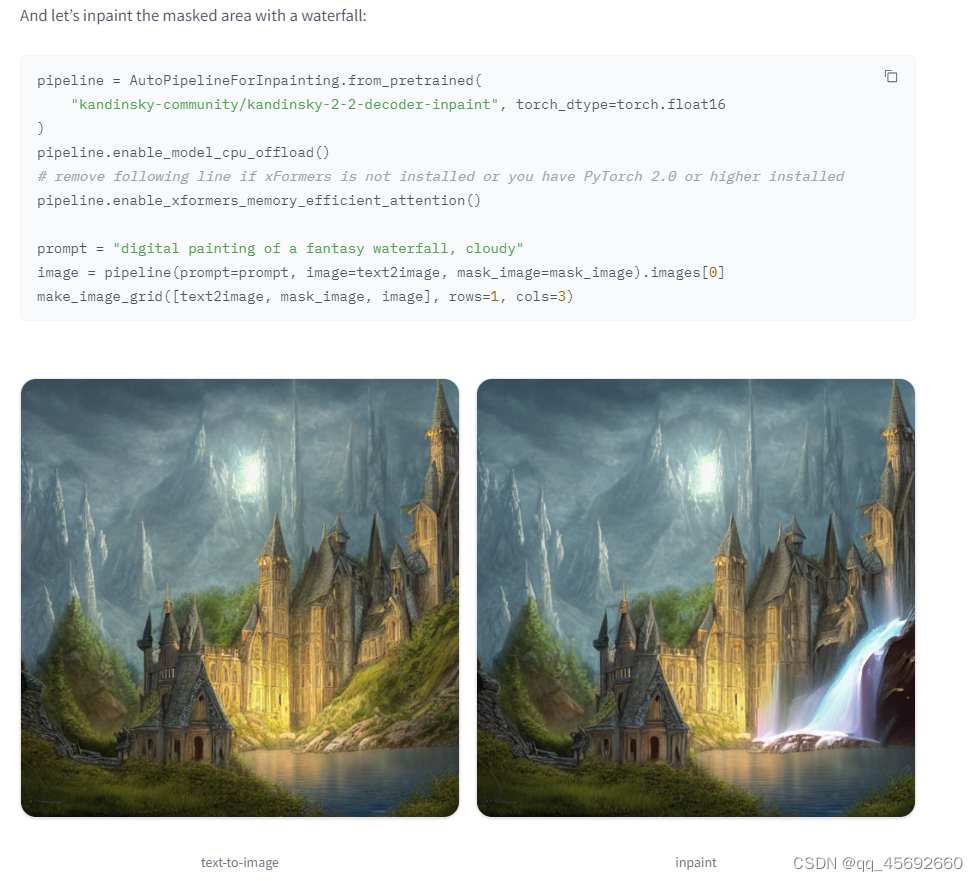

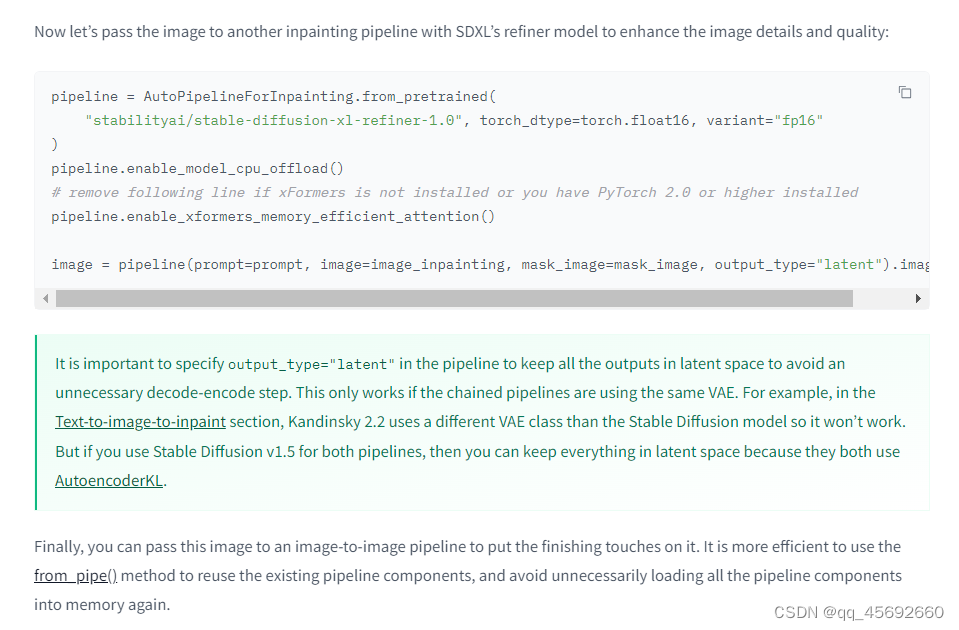

两个pipeline不是使用同一个VAE,否则第一个pipeline的输出可以是latent

)