Elasticsearch 是一个分布式、高扩展、高实时的搜索与数据分析引擎。它能很方便的使大量数据具有搜索、分析和探索的能力。

-

put/post请求:

http://localhost:9200/索引库名称{"settings":{"index":{"number_of_shards":1, # 分片数量,存储到不同的节点,提高处理能力和高可用性 刚开始是一个 这里没有集成"number_of_replicas":0 # 每个节点的副本数量,提高 高可用性}} } get http://localhost:9200/索引库名称 查询创建索引的信息

2.post http://localhost:9200/索引库名称/类型名称/_mappingpost 请求:http://localhost:9200/xc_course/doc/_mapping~~~java{"properties": {"name": {"type": "text" // varchar},"description": {"type": "text"},"studymodel": {"type": "keyword"}}}~~~3.put 或Post http://localhost:9200/xc_course/doc/id值 (如果不指定id值ES会自动生成ID)http://localhost:9200/xc_course/doc/1~~~java{ "name":"Bootstrap开发框架","description":"Bootstrap是由Twitter推出的一个前台页面开发框架,在行业之中使用较为广泛。此开发框架包 含了大量的CSS、JS程序代码,可以帮助开发者(尤其是不擅长页面开发的程序人员)轻松的实现一个不受浏览器限制的 精美界面效果。", "studymodel":"201001" }~~~4.根据课程id查询文档 发送:get http://localhost:9200/xc_course/doc/1

5. 发送 get http://localhost:9200/xc_course/doc/_search 查询全部的数据6. 查询名称中包括spring 关键字的的记录 发送:get http://localhost:9200/xc_course/doc/_search?q=name:bootstrap

7.post 发送:localhost:9200/_analyze

{"text":"郭树翔", --这里没有写到main.dic文件中去就是一个一个的字"analyzer":"ik_max_word"

}

~~~java{"text":"测试分词器,后边是测试内容:spring cloud实战","analyzer":"ik_max_word" -- 分词器 精确一点"analyzer":"ik_smart" -- 分词器 大体一下}

8.GET: http://localhost:9200/(不止一个index那就要指定名字)_mapping就是查看index 所有的属性和字段

{"gsx_frank": {"mappings": {"doc": {"properties": {"description": {"type": "text","fields": {"keyword": {"type": "keyword","ignore_above": 256}}},"name": {"type": "text","fields": {"keyword": {"type": "keyword","ignore_above": 256}}},"studymodel": {"type": "text","fields": {"keyword": {"type": "keyword","ignore_above": 256}}}}}}}

}body 请求体中的 application/json

~~~

9.delete请求:`http://localhost:9200/索引库名称`

```java

analyzer和search_analyzer 的区别

说明

分析器(analyzer)主要有两种情况会被使用:

插入文档时,将text类型的字段做分词然后插入倒排索引,。

在查询时,先对要查询的text类型的输入做分词,再去倒排索引搜索。

在索引(即插入文档)时,只会去看字段有没有定义analyzer,有定义的话就用定义的,没定义就用ES预设的。

在查询时,会先去看字段有没有定义search_analyzer,如果没有定义,就去看有没有analyzer,再没有定义,才会去使用ES预设的。

10. index

通过index属性指定是否索引。

默认为index=true,即要进行索引,只有进行索引才可以从索引库搜索到。

但是也有一些内容不需要索引,比如:商品图片地址只被用来展示图片,不进行搜索图片,此时可以将index设置为false。

删除索引,重新创建映射,将pic的index设置为false,尝试根据pic去搜索,结果搜索不到数据

"pic": { "type": "text", "index":false -- 这个字段就不可以被查到了

}

11. keyword关键字字段

上边介绍的text文本字段在映射时要设置分词器,keyword字段为关键字字段,通常搜索keyword是按照整体搜索,所以创建keyword字段的索引时是不进行分词的,比如:邮政编码、手机号码、身份证等。keyword字段通常用于过虑、排序、聚合等。 "price": { "type": "scaled_float", "scaling_factor": 100 比例因子 price*100 四首五入的去数值

},

took:本次操作花费的时间,单位为毫秒。 timed_out:请求是否超时 _shards:说明本次操作共搜索了哪些分片 hits:搜索命中的记录 hits.total : 符合条件的文档总数 hits.hits :匹配度较高的前N个文档 hits.max_score:文档匹配得分,这里为最高分 _score:每个文档都有一个匹配度得分,按照降序排列。 _source:显示了文档的原始内容。

package com.guoshuxiang.service;

import org.elasticsearch.action.admin.indices.create.CreateIndexRequest;

import org.elasticsearch.action.admin.indices.create.CreateIndexResponse;

import org.elasticsearch.action.admin.indices.delete.DeleteIndexRequest;

import org.elasticsearch.action.delete.DeleteRequest;

import org.elasticsearch.action.delete.DeleteResponse;

import org.elasticsearch.action.get.GetRequest;

import org.elasticsearch.action.get.GetResponse;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.action.index.IndexResponse;

import org.elasticsearch.action.support.master.AcknowledgedResponse;

import org.elasticsearch.action.update.UpdateRequest;

import org.elasticsearch.action.update.UpdateResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.settings.Settings;

import org.elasticsearch.common.xcontent.XContentType;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

@Service

public class EsService {@Autowiredprivate RestHighLevelClient client;

/*** index 和添加映射放 在 一起的** @throws Exception*/public void createIndex() throws Exception {// 创建一个indexCreateIndexRequest createIndexRequest = new CreateIndexRequest("zg_love");// 设置一个 微服务数量初始 1 备份数量 初始 0createIndexRequest.settings(Settings.builder().put("number_of_shards", "1").put("number_of_replicas", "0"));createIndexRequest.mapping("doc", "{\n" +"\t\"properties\": {\n" +"\t\t\"name\": {\n" +"\t\t\t\"type\": \"text\",\n" +"\t\t\t\"analyzer\": \"ik_max_word\",\n" +"\t\t\t\"search_analyzer\": \"ik_smart\"\n" +"\t\t},\n" +"\t\t\"description\": {\n" +"\t\t\t\"type\": \"text\",\n" +"\t\t\t\"analyzer\": \"ik_max_word\",\n" +"\t\t\t\"search_analyzer\": \"ik_smart\"\n" +"\t\t},\n" +"\t\t\"studymodel\": {\n" +"\t\t\t\"type\": \"keyword\"\n" +"\t\t},\n" +"\t\t\"price\": {\n" +"\t\t\t\"type\": \"float\"\n" +"\t\t},\n" +"\t\t\"timestamp\": {\n" +"\t\t\t\"type\": \"date\",\n" +"\t\t\t\"format\": \"yyyy-MM-dd HH:mm:ss||yyyy-MM-dd||epoch_millis\"\n" +"\t\t}\n" +"\t}\n" +"}", XContentType.JSON); // 指定传过的条件是json的格式CreateIndexResponse createIndexResponse = client.indices().create(createIndexRequest, RequestOptions.DEFAULT);System.out.println(createIndexResponse.isAcknowledged()); // 看看索引是否创建成功

}

/*** 删除索引** @throws Exception*/public void deleteIndex() throws Exception {DeleteIndexRequest indexRequest = new DeleteIndexRequest("zg_love");AcknowledgedResponse delete = client.indices().delete(indexRequest, RequestOptions.DEFAULT);System.out.println(delete.isAcknowledged());}

/*** 创建index 和 删除 index 都要用到* XXXXIndexRequest* 直接对映射表操作的话就直接使用* Get delete index update Request 的请求对(index)进行操作*//*** 添加一条数据* @throws Exception* CreateIndexRequest IndexRequest* 前者是用来创建并配置索引的,后者是将数据与索引相关联,并且让数据可以被搜索。*/public void addDoc() throws Exception {Map<String,Object> jsonMap = new HashMap<>();jsonMap.put("name", "spring cloud实战");jsonMap.put("description", "本课程主要从四个章节进行讲解: 1.微服务架构入门 2.spring cloud基础入门 3.实战Spring Boot 4.注册中心eureka。");jsonMap.put("studymodel", "201001");SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");jsonMap.put("timestamp", dateFormat.format(new Date()));jsonMap.put("price", 5.6f);// 添加数据的请求 index doc 1 不指定的话会自动添加一个IndexRequest indexRequest = new IndexRequest("zg_love","doc","1");indexRequest.source(jsonMap); // 数据源要放进去 要把条件放进去IndexResponse indexResponse = client.index(indexRequest, RequestOptions.DEFAULT);

System.out.println(indexResponse.status());}

/*** 修改一条数据* @throws Exception*/public void update() throws Exception {UpdateRequest updateRequest = new UpdateRequest("zg_love","doc","1");Map<String,Object> jsonMap = new HashMap<>();jsonMap.put("name","spring love you");updateRequest.doc(jsonMap); // 更新是doc的方法来使用的UpdateResponse updateResponse = client.update(updateRequest, RequestOptions.DEFAULT);System.out.println(updateResponse.status());}

/*** 得到一条数据* @throws Exception*/public void get() throws Exception{GetRequest getRequest = new GetRequest("zg_love","doc","1");// index 中存一条数据的就是 document -> 里面有好多 field的 字段GetResponse documentFields = client.get(getRequest, RequestOptions.DEFAULT);Map<String, Object> sourceAsMap = documentFields.getSourceAsMap();System.out.println(sourceAsMap);}

/*** 删除一条数据* @throws Exception*/public void delete() throws Exception{DeleteRequest deleteRequest = new DeleteRequest("zg_love","doc","1");DeleteResponse deleteResponse = client.delete(deleteRequest, RequestOptions.DEFAULT);System.out.println(deleteResponse.status());}

}

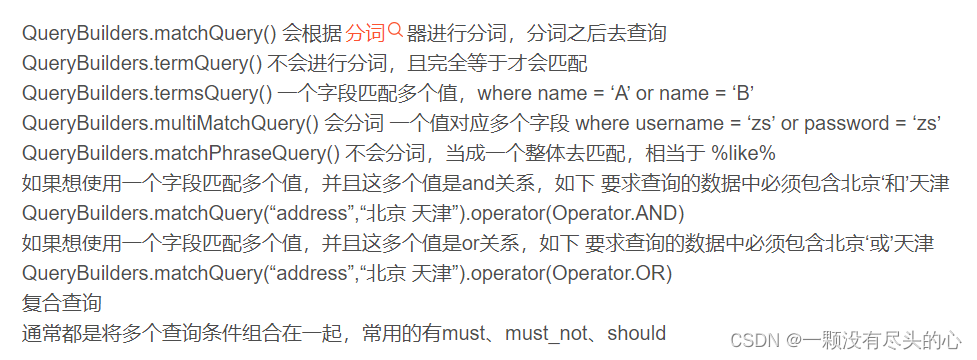

下面开始使用post 请求来查询数据 都是json 格式的形式来操作的

查询指定索引库指定类型下的文档。(通过使用此方法)

发送:post http://localhost:9200/xc_course/doc/_search

{ "query": { "match_all": {} // 换成java 代码来使用 在这里}

}

{"from": 0,"size": 1,"query": {"match_all": {}},"_source": ["name", "studymodel"]

}

//设置分页参数 这几个参数是同级的 {}

searchSourceBuilder.from((index - 1) * size);

searchSourceBuilder.size(size);

{"query": {"term": {"name": "java" //%java%}},"_source": ["name", "studymodel"]

}

//设置查询方式 (精准查询) %201002%

TermQueryBuilder termQueryBuilder = QueryBuilders.termQuery("studymodel", "201002");

{"query": {"terms": {"price": [38.6,68.6] // 可以有多个值}},"_source": ["name", "studymodel"]

}

// 这里有可变参数,不止一条数据 单个字段的多值查询

QueryBuilders.termsQuery("studymodel", stus); // 这里放的是数组

{ "query": {"match": {"description": {"query": "spring框架","operator": "or","minimum_should_match": "80%"}}}

}

MatchQueryBuilder matchQueryBuilder = QueryBuilders.matchQuery("description", "spring开发框架");// 分词是中文和英文的状态小 , text 类型 并且设置了 ik分词器才可以的

matchQueryBuilder.operator(Operator.OR); // 指定操作是 or 多个条件满足一个就行了

matchQueryBuilder.minimumShouldMatch("80%"); // 提高精准度

{"query": {"multi_match": {"query": "spring css","minimum_should_match": "50%","fields": ["name^10", "description"]}}

//设置查询方式 (分词查询)MultiMatchQueryBuilder multiMatchQueryBuilder = QueryBuilders .multiMatchQuery("spring框架", "name", "description"); // text field 参数// 给field 增加 权重multiMatchQueryBuilder.field("name", 10);

{"query": {"bool": {"must": [{ // 还有可能是 should"multi_match": {"query": "spring框架","minimum_should_match": "50%","fields": ["name^10", "description"]}}, {"term": {"studymodel": "201002"}}]}}

}

// 构建boolean的条件BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();//条件一if (!StringUtils.isEmpty(keyword)) {// 多列查询MultiMatchQueryBuilder multiMatchQueryBuilder = QueryBuilders.multiMatchQuery(keyword, "name", "description");// 设置权重multiMatchQueryBuilder.minimumShouldMatch("50%");// 分词反满一个就行multiMatchQueryBuilder.operator(Operator.OR);// and 的关系 是并列下一个条件的boolQueryBuilder.must(multiMatchQueryBuilder);}//条件二if (!StringUtils.isEmpty(studymodel)) {// 精确查询结果TermQueryBuilder termQueryBuilder = QueryBuilders.termQuery("studymodel", studymodel);// and 构建多条件boolQueryBuilder.must(termQueryBuilder);}

{// 这么多要操作的filed"_source": ["name", "studymodel", "description", "price"],"query": {"bool": {"must": [{"multi_match": {"query": "spring框架","minimum_should_match": "50%","fields": ["name^10", "description"]}}],"filter": [{"term": {"studymodel": "201001"}}, {"range": {"price": {"gte": 60,"lte": 100}}}]}}

}

RangeQueryBuilder rangeQueryBuilder = QueryBuilders.rangeQuery("price"); // 这里是查询条件

rangeQueryBuilder.gte(min).lte(max); // 这里是最大最小的小于最大的

boolQueryBuilder.filter(rangeQueryBuilder); // 添加到过滤其中

"highlight": { // 这里和条件是一个级别的 最后都要放到 条件收集器中"pre_tags": ["<span style=’color:red;’>"],"post_tags": ["</span>"],"fields": {"name": {},"description": {}}}

//设置高亮对象HighlightBuilder highlightBuilder = new HighlightBuilder(); // 开头标签

highlightBuilder.preTags("<span style='color:red;'>");

highlightBuilder.postTags("</span>"); // 后面的标签

highlightBuilder.fields().add(new HighlightBuilder.Field("name")); // 添加一个给高亮的条件

highlightBuilder.fields().add(new HighlightBuilder.Field("description"));

searchSourceBuilder.highlighter(highlightBuilder); //最后将高亮并列在查询条件中

//获取高亮数据Map<String, HighlightField> fieldMap = hit.getHighlightFields(); // 获取到键值对HighlightField nameField = fieldMap.get("name"); //获取到关键的字if (nameField != null) { // 不为空的StringBuffer nameSbf = new StringBuffer(); //Text[] fragments = nameField.fragments(); // 取到那个一满足的字段for (Text text : fragments) {nameSbf.append(text.toString()); // 循环满足的去拼接字符}course.setName(nameSbf.toString()); // 最后添加到字段类型中去}

"sort": [{"studymodel": "desc"}, {"price": "asc"}]

//添加排序

searchSourceBuilder.sort(new FieldSortBuilder("studymodel").order(SortOrder.DESC));

// 排序调用 sort() filed的条件对象 在调用 升序和降序

searchSourceBuilder.sort(new FieldSortBuilder("price").order(SortOrder.DESC));

searchSourceBuilder.aggregation(AggregationBuilders.terms("brandGroup").field("brand_name").size(50));

public void all() throws Exception {SearchRequest searchRequest = new SearchRequest("zg_love");searchRequest.types("doc");SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();MatchAllQueryBuilder matchAllQueryBuilder = QueryBuilders.matchAllQuery();sourceBuilder.query(matchAllQueryBuilder);searchRequest.source(sourceBuilder);SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);SearchHits hits = searchResponse.getHits();long totalHits = hits.getTotalHits(); //一共有多少条数据System.out.println("总的记录是" + totalHits);SearchHit[] searchHits = hits.getHits();//具体存放数据的地方for (SearchHit hit : searchHits) {String id = hit.getId();Map<String, Object> sourceAsMap = hit.getSourceAsMap();sourceAsMap.put("id", id);System.out.println(sourceAsMap);}}/*** 获取分页的数据 分页 limit 是一个大的函数 和条件是同级关系** @param index 初始页码* @param size 每页大小* @throws Exception*/public void page(Integer index, Integer size) throws Exception {SearchRequest searchRequest = new SearchRequest("zg_love");searchRequest.types("doc");// 构建大的条件SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();searchSourceBuilder.from((index - 1) * size);searchSourceBuilder.size(size);MatchAllQueryBuilder matchAllQueryBuilder = QueryBuilders.matchAllQuery();searchSourceBuilder.query(matchAllQueryBuilder); // 封装的条件searchRequest.source(searchSourceBuilder); // 最后条件放进去SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);SearchHits hits = searchResponse.getHits();long totalHits = hits.getTotalHits();System.out.println("总的条数" + totalHits);SearchHit[] hitsHits = hits.getHits();for (SearchHit hit : hitsHits) {String id = hit.getId();Map<String, Object> sourceAsMap = hit.getSourceAsMap();sourceAsMap.put("id", id);System.out.println(sourceAsMap);}}/*** 精确查询就是这个数据必须在一起,才可以查到一条数据不然是找到不到数据的** @throws Exception*/public void term() throws Exception {SearchRequest searchRequest = new SearchRequest("zg_love");searchRequest.types("doc");SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();TermQueryBuilder termQueryBuilder = QueryBuilders.termQuery("name", "spring");searchSourceBuilder.query(termQueryBuilder);// 指定要查询的字段searchSourceBuilder.fetchSource(new String[]{"name", "studymodel", "price"}, null);searchRequest.source(searchSourceBuilder); // 最后一定要带上条件啊不然就会查出全部的数据SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);SearchHits hits = searchResponse.getHits();long totalHits = hits.getTotalHits();System.out.println("总的数据 : " + totalHits + "条");SearchHit[] hitsHits = hits.getHits();for (SearchHit hit : hitsHits) {String id = hit.getId();Map<String, Object> sourceAsMap = hit.getSourceAsMap();sourceAsMap.put("id", id);System.out.println(sourceAsMap);}}/*** 单个列的精确查询** @throws Exception*/public void terms() throws Exception {SearchRequest searchRequest = new SearchRequest("zg_love");searchRequest.types("doc");SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();// 多条件精确查询数据 字段 具体的字 ... 可变参数 这里的数据TermsQueryBuilder termsQueryBuilder = QueryBuilders.termsQuery("name", "cloud");searchSourceBuilder.query(termsQueryBuilder);searchRequest.source(searchSourceBuilder);SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);SearchHits hits = searchResponse.getHits();long totalHits = hits.getTotalHits();System.out.println("总数据量:" + totalHits);SearchHit[] searchHits = hits.getHits();for (SearchHit hit : searchHits) {String id = hit.getId();Map<String, Object> sourceAsMap = hit.getSourceAsMap();sourceAsMap.put("id", id);System.out.println(sourceAsMap);}}/*** 分词查询数据 必须是text 类型的数据才可以的** @throws Exception*/public void match() throws Exception {SearchRequest searchRequest = new SearchRequest("zg_love");searchRequest.types("doc");SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();// 分子是match 来查询的MatchQueryBuilder matchQueryBuilder = QueryBuilders.matchQuery("description", "spring开发入门");matchQueryBuilder.operator(Operator.OR); // 默认就是 or的 关系 这里是分开的词有一个满足就行了matchQueryBuilder.minimumShouldMatch("80%"); // 3 * 0.8 = 2.4 向下取整 2 至少有两个关键词才可以的searchSourceBuilder.query(matchQueryBuilder);searchRequest.source(searchSourceBuilder);//执行请求获取响应SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);SearchHits hits = searchResponse.getHits();long totalHits = hits.getTotalHits();System.out.println("总数据量:" + totalHits);SearchHit[] searchHits = hits.getHits();for (SearchHit hit : searchHits) {String id = hit.getId();Map<String, Object> sourceAsMap = hit.getSourceAsMap();sourceAsMap.put("id", id);System.out.println(sourceAsMap);}}/*** 多列分词查询** @throws Exception*/public void mutilMatch() throws Exception {SearchRequest searchRequest = new SearchRequest("zg_love");searchRequest.types("doc");SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();// 这个词在两个关键字中来写啊MultiMatchQueryBuilder multiMatchQueryBuilder = QueryBuilders.multiMatchQuery("实战语言", "name", "description");multiMatchQueryBuilder.field("name", 10); // 扩大数据提高分数 优先在最前面searchSourceBuilder.query(multiMatchQueryBuilder);searchRequest.source(searchSourceBuilder);//执行请求获取响应SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);SearchHits hits = searchResponse.getHits();long totalHits = hits.getTotalHits();System.out.println("总数据量:" + totalHits);SearchHit[] searchHits = hits.getHits();for (SearchHit hit : searchHits) {String id = hit.getId();float score = hit.getScore();Map<String, Object> sourceAsMap = hit.getSourceAsMap();sourceAsMap.put("id", id);sourceAsMap.put("score", score);System.out.println(sourceAsMap);}}/*** 注意:range 和 term一次只能对一个 Field 设置范围过虑。 不可以是多个* @param keyword 分词的关键字* @param studymodel 精确查询的关键字* @throws Exception*/public void bool(String keyword, String studymodel) throws Exception {SearchRequest searchRequest = new SearchRequest("zg_love");searchRequest.types("doc");SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();// boolean 这是大条件BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();// 这里逻辑相反if (!StringUtils.isEmpty(keyword)) { //多列分词查询MultiMatchQueryBuilder multiMatchQueryBuilder = QueryBuilders.multiMatchQuery(keyword, "name", "description");multiMatchQueryBuilder.minimumShouldMatch("50%");multiMatchQueryBuilder.operator(Operator.OR);multiMatchQueryBuilder.field("description",10);boolQueryBuilder.must(multiMatchQueryBuilder); //} // must 是全都满足条件的 上下两个条件都满足才行的if (!StringUtils.isEmpty(studymodel)) { // 精确查询TermsQueryBuilder termQueryBuilder = QueryBuilders.termsQuery("studymodel", studymodel);boolQueryBuilder.must(termQueryBuilder);}searchSourceBuilder.query(boolQueryBuilder);searchRequest.source(searchSourceBuilder);//执行请求获取响应SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);SearchHits hits = searchResponse.getHits();long totalHits = hits.getTotalHits();System.out.println("总数据量:" + totalHits);SearchHit[] searchHits = hits.getHits();for (SearchHit hit : searchHits) {String id = hit.getId();float score = hit.getScore();Map<String, Object> sourceAsMap = hit.getSourceAsMap();sourceAsMap.put("id", id);sourceAsMap.put("score",score);System.out.println(sourceAsMap);}}public List<Course> filter(String keyword, String studymodel, Double min, Double max) throws Exception{SearchRequest searchRequest = new SearchRequest("zg_love");searchRequest.types("doc");SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();

// 这里是原始数据 在这个数据上开始过滤数据if (! StringUtils.isEmpty(keyword)){MultiMatchQueryBuilder multiMatchQueryBuilder = QueryBuilders.multiMatchQuery(keyword, "name", "description");multiMatchQueryBuilder.minimumShouldMatch("50%");multiMatchQueryBuilder.field("description",10);boolQueryBuilder.must(multiMatchQueryBuilder);}if(!StringUtils.isEmpty(studymodel)){TermsQueryBuilder termsQueryBuilder = QueryBuilders.termsQuery("studymodel", studymodel);boolQueryBuilder.filter(termsQueryBuilder);}if (min != null && max != null){RangeQueryBuilder rangeQueryBuilder = QueryBuilders.rangeQuery("price");rangeQueryBuilder.gte(min).lte(max);boolQueryBuilder.filter(rangeQueryBuilder);}// 排序这是一个方法 在这里 所以直接写在 大的条件构造器中searchSourceBuilder.sort(new FieldSortBuilder("studymodel").order(SortOrder.DESC));searchSourceBuilder.sort(new FieldSortBuilder("price").order(SortOrder.ASC));HighlightBuilder highlightBuilder = new HighlightBuilder();highlightBuilder.preTags("<span style='color:red;'>");highlightBuilder.postTags("</span>");

//

// //搜索 数据 另一种写法

// SearchRequestBuilder searchRequestBuilder = client.prepareSearch("blog2").

// setTypes("article").setQuery(QueryBuilders.termQuery("title","搜索 "));

// //高亮定义

// searchRequestBuilder.addHighlightedField("title");//对title字段进行高亮显示

// searchRequestBuilder.setHighlighterPreTags("<em>");//前置元素

// searchRequestBuilder.setHighlighterPostTags("</em>");//后置元素

// SearchResponse searchResponse = searchRequestBuilder.get();// 这里用自己还是不行的 还要用内名对象来操作highlightBuilder.fields().add(new HighlightBuilder.Field("name"));highlightBuilder.fields().add(new HighlightBuilder.Field("description"));searchSourceBuilder.query(boolQueryBuilder);searchSourceBuilder.highlighter(highlightBuilder); // 将高亮放进去searchRequest.source(searchSourceBuilder);SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);// 如果要写前端的话这里的展示数据就不要写了SearchHits hits = searchResponse.getHits();long totalHits = hits.getTotalHits();System.out.println("总数据量: " + totalHits);SearchHit[] searchHits = hits.getHits();List<Course> list = new ArrayList<>();for (SearchHit hit : searchHits) {String id = hit.getId();String json = hit.getSourceAsString();// 里面的字段一一对应赋值Course course = JSON.parseObject(json, Course.class);// 这里是满足高亮的数据Map<String, HighlightField> highlightFields = hit.getHighlightFields();HighlightField nameField = highlightFields.get("name");if (nameField != null){StringBuffer nameBuf = new StringBuffer();// 获取到原有内容中 每个高亮显示 集中位置fragment就是高亮片段 可能不止有一处高亮Text[] fragments = nameField.fragments();//for (Text text : fragments) {nameBuf.append(text.toString());}course.setName(nameBuf.toString());}HighlightField description = highlightFields.get("description");if (description != null){StringBuffer dSbf = new StringBuffer();// text 类型的数据 文本类型要转化为String 在java中使用Text[] text = description.fragments();for (Text t : text) {dSbf.append(t.toString());}course.setName(dSbf.toString());}course.setId(id);list.add(course);}return list;}

EMD-LSTM-Attention模型)

-洞穴探险)

-k8s核心对象IngressController)

——匿名函数)