文章目录

- 图像分割的基本概念

- 实战-分水岭法(一)

- 实战-分水岭法(二)

- GrabCut基本原理

- 实战-GrabCut主体程序的实现

- 实战-GrabCut鼠标事件的处理

- 实战-调用GrabCut实现图像分割

- meanshift图像分割

- 视频前后景分离

- 其它对视频前后影分离的方法

- 图像修复

图像分割是计算机视觉中的一个重要领域,通过它我们可以做物体的统计,背景的变换等许多操作,而图像的修复可以说是它的逆运算

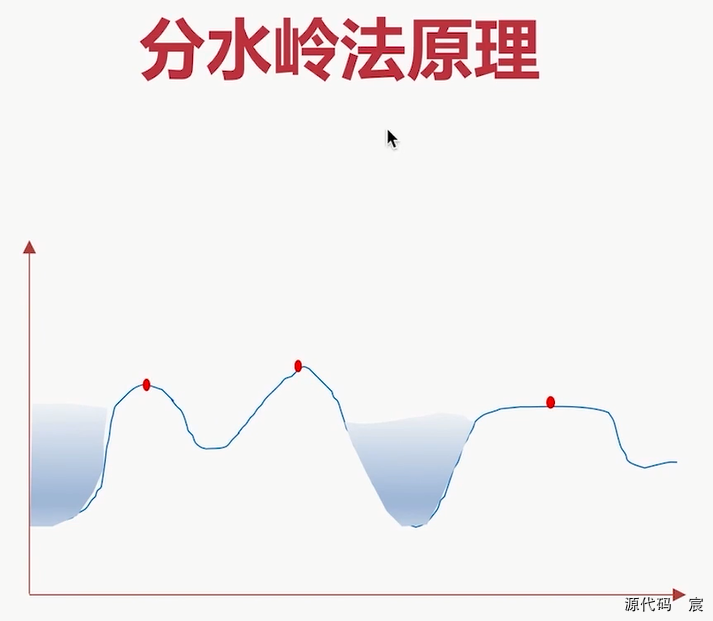

图像分割的基本概念

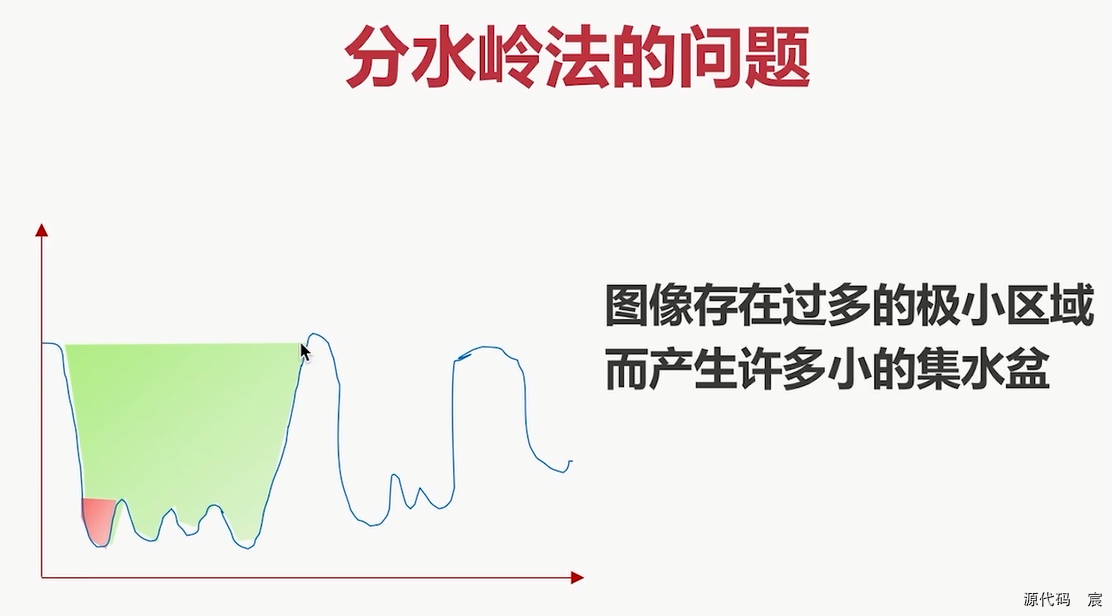

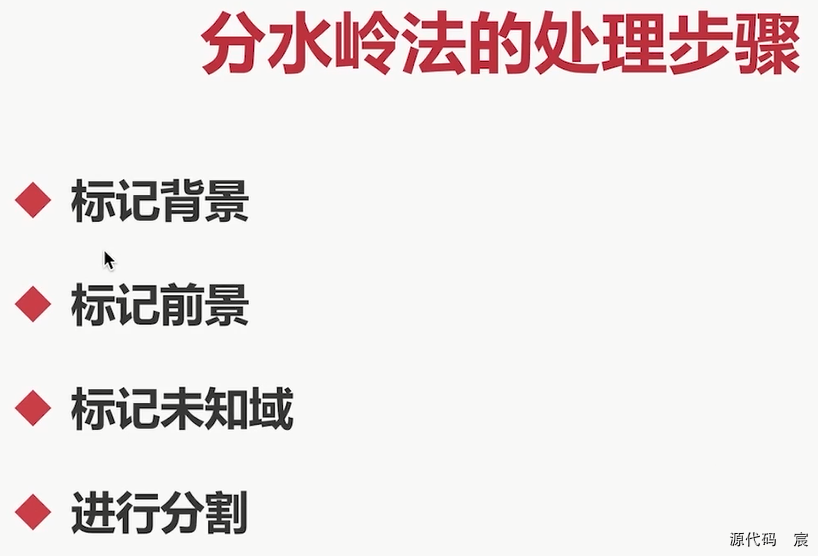

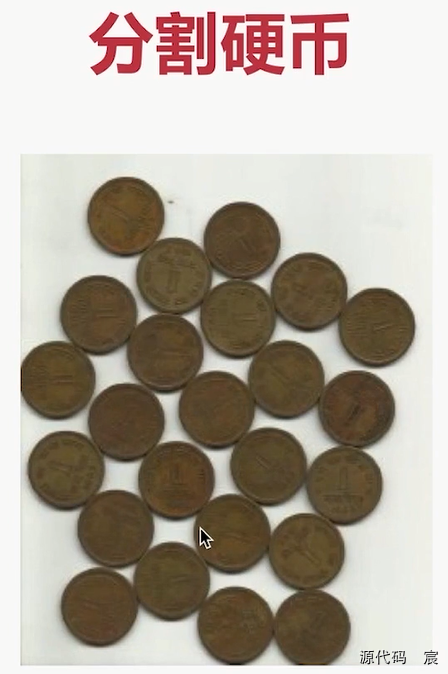

实战-分水岭法(一)

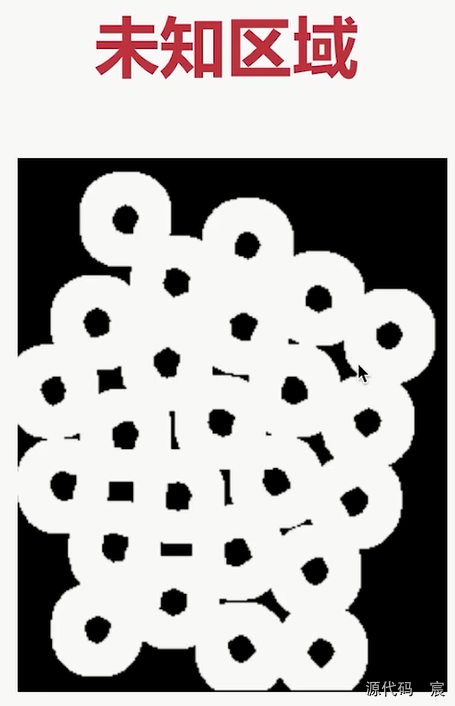

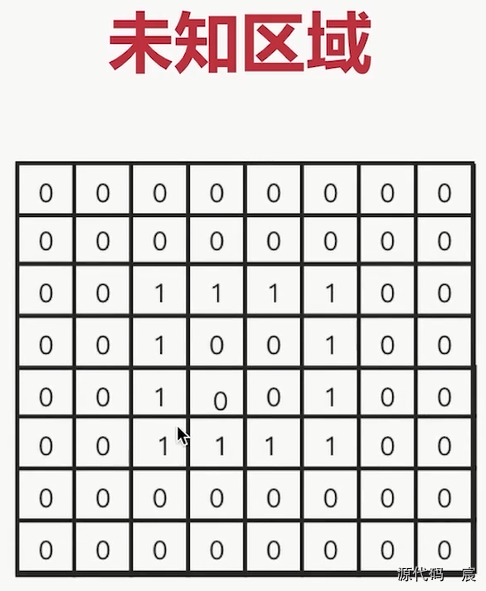

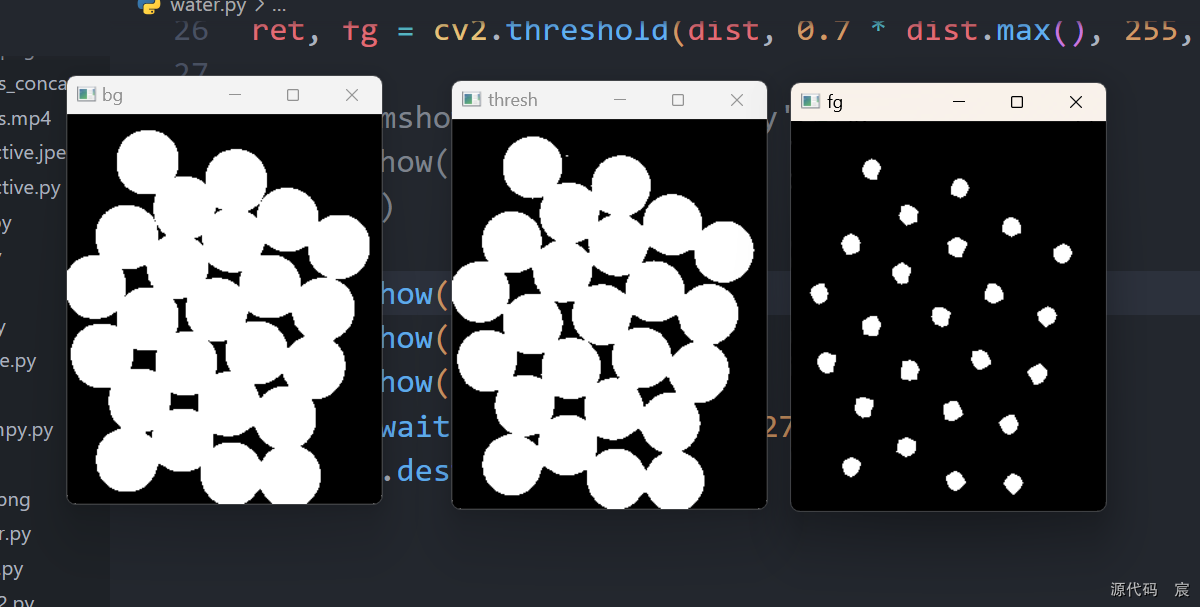

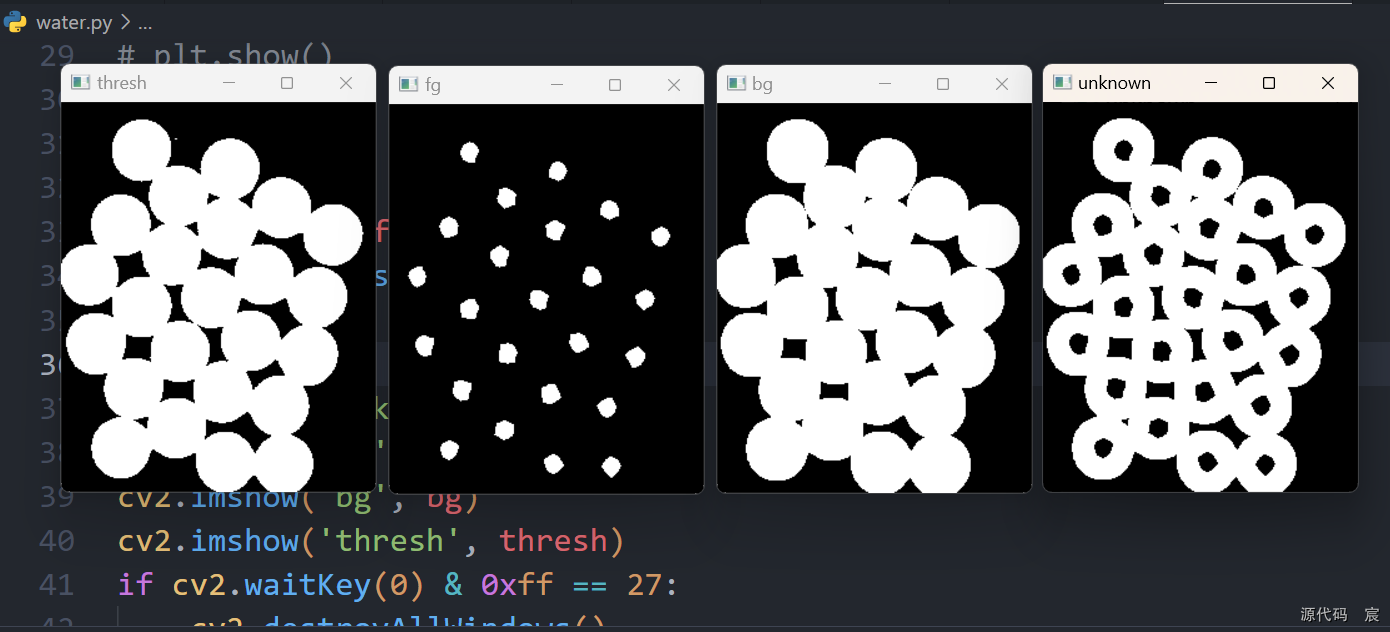

未知区域就是背景-前景

# -*- coding: utf-8 -*-

import cv2

import numpy as np#获取背景

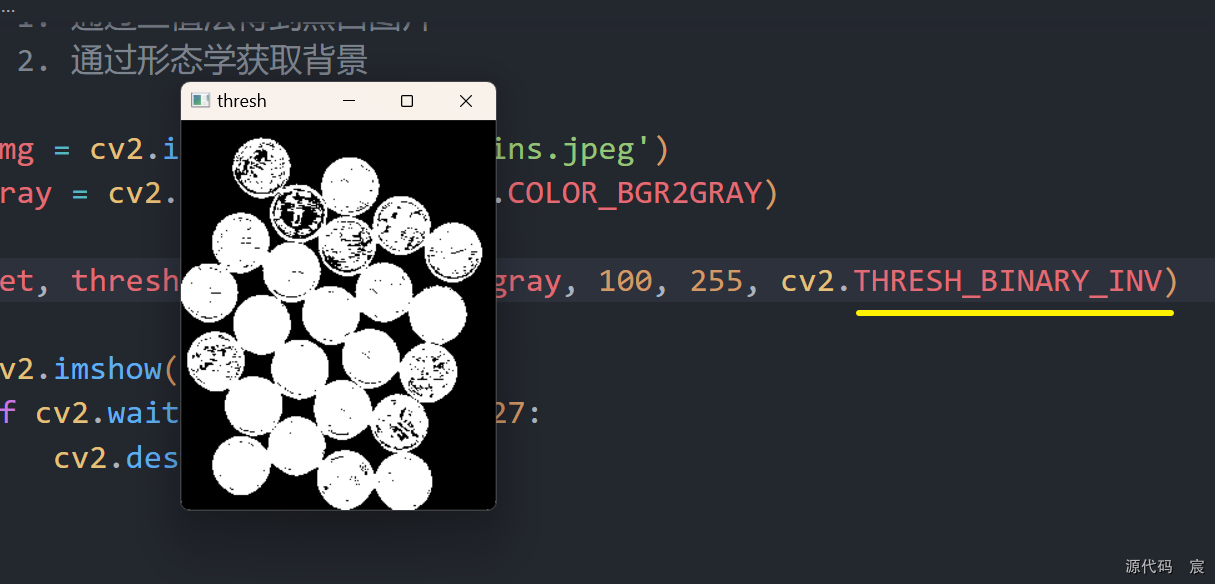

# 1. 通过二值法得到黑白图片

# 2. 通过形态学获取背景img = cv2.imread('./water_coins.jpeg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)ret, thresh = cv2.threshold(gray, 100, 255, cv2.THRESH_BINARY)cv2.imshow('thresh', thresh)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

# -*- coding: utf-8 -*-

import cv2

import numpy as np#获取背景

# 1. 通过二值法得到黑白图片

# 2. 通过形态学获取背景img = cv2.imread('./water_coins.jpeg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)cv2.imshow('thresh', thresh)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

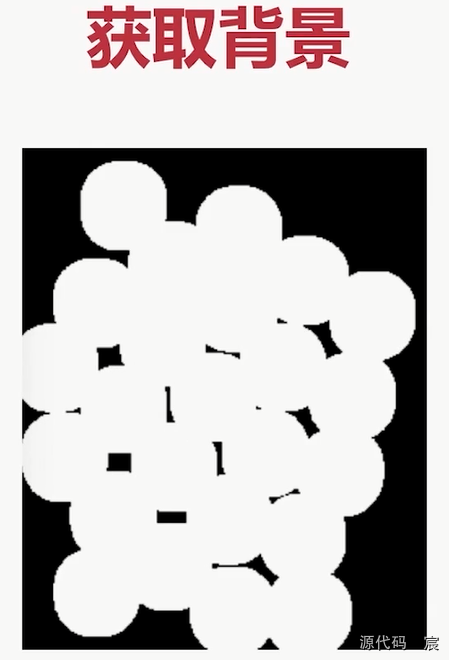

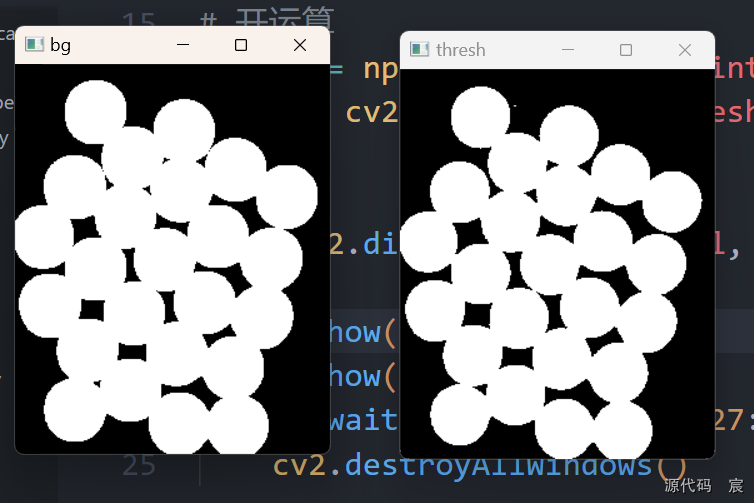

把硬币膨胀,背景缩小,保证缩小后的背景里没有硬币,并且开运算可以去除背景的噪声

# -*- coding: utf-8 -*-

import cv2

import numpy as np#获取背景

# 1. 通过二值法得到黑白图片

# 2. 通过形态学获取背景img = cv2.imread('./water_coins.jpeg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)# 二值化

ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)# 开运算

kernel = np.ones((3, 3), np.int8)

open1 = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel, iterations=2)# 膨胀

bg = cv2.dilate(open1, kernel, iterations=1)cv2.imshow('bg', bg)

cv2.imshow('thresh', thresh)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

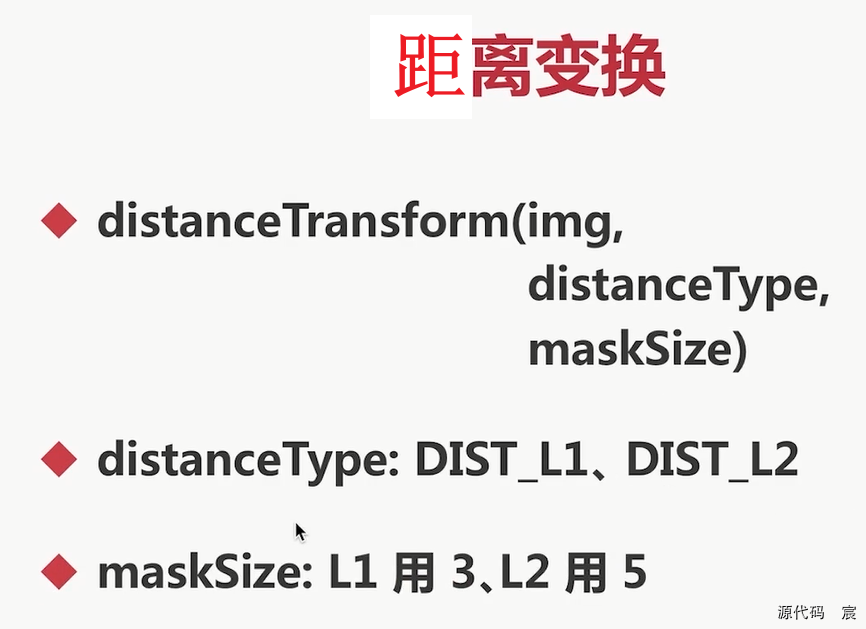

实战-分水岭法(二)

# -*- coding: utf-8 -*-

import cv2

import numpy as np

from matplotlib import pyplot as plt#获取背景

# 1. 通过二值法得到黑白图片

# 2. 通过形态学获取背景img = cv2.imread('./water_coins.jpeg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)# 二值化

ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)# 开运算

kernel = np.ones((3, 3), np.int8)

open1 = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel, iterations=2)# 膨胀

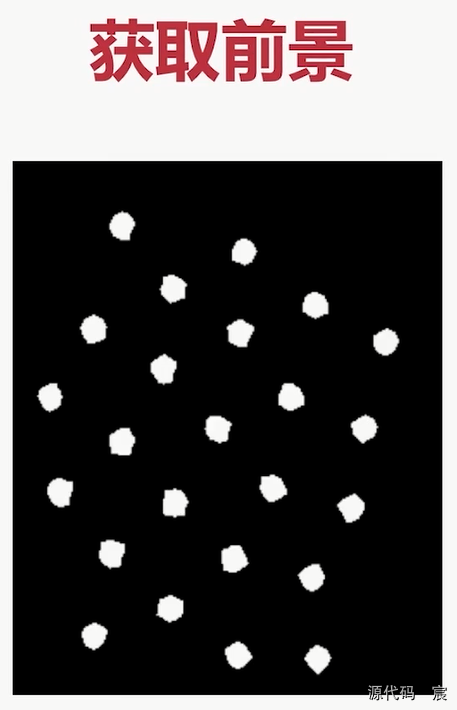

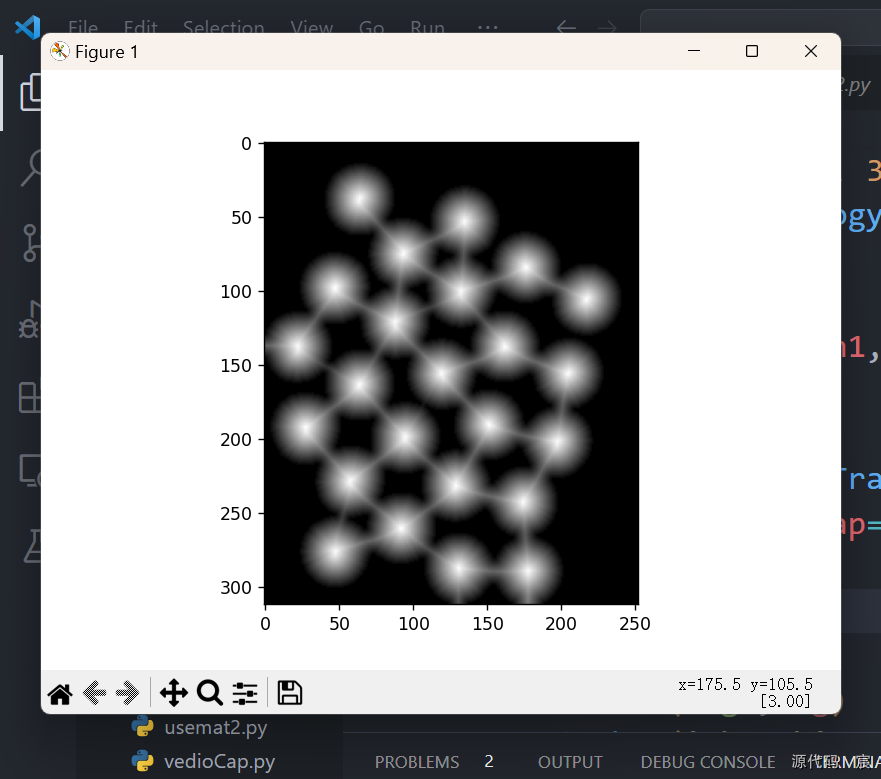

bg = cv2.dilate(open1, kernel, iterations=1)# 获取前景物体

dist = cv2.distanceTransform(open1, cv2.DIST_L2, 5);

plt.imshow(dist, cmap='gray')

plt.show()

exit()cv2.imshow('bg', bg)

cv2.imshow('thresh', thresh)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

# -*- coding: utf-8 -*-

import cv2

import numpy as np

from matplotlib import pyplot as plt#获取背景

# 1. 通过二值法得到黑白图片

# 2. 通过形态学获取背景img = cv2.imread('./water_coins.jpeg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)# 二值化

ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)# 开运算

kernel = np.ones((3, 3), np.int8)

open1 = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel, iterations=2)# 膨胀

bg = cv2.dilate(open1, kernel, iterations=1)# 获取前景物体

dist = cv2.distanceTransform(open1, cv2.DIST_L2, 5);ret, fg = cv2.threshold(dist, 0.7 * dist.max(), 255, cv2.THRESH_BINARY)# plt.imshow(dist, cmap='gray')

# plt.show()

# exit()cv2.imshow('fg', fg)

cv2.imshow('bg', bg)

cv2.imshow('thresh', thresh)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

# -*- coding: utf-8 -*-

import cv2

import numpy as np

from matplotlib import pyplot as plt#获取背景

# 1. 通过二值法得到黑白图片

# 2. 通过形态学获取背景img = cv2.imread('./water_coins.jpeg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)# 二值化

ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)# 开运算

kernel = np.ones((3, 3), np.int8)

open1 = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel, iterations=2)# 膨胀

bg = cv2.dilate(open1, kernel, iterations=1)# 获取前景物体

dist = cv2.distanceTransform(open1, cv2.DIST_L2, 5);ret, fg = cv2.threshold(dist, 0.7 * dist.max(), 255, cv2.THRESH_BINARY)# plt.imshow(dist, cmap='gray')

# plt.show()

# exit()# 获取未知区域

fg = np.uint8(fg)

unknown = cv2.subtract(bg, fg)cv2.imshow('unknown', unknown)

cv2.imshow('fg', fg)

cv2.imshow('bg', bg)

cv2.imshow('thresh', thresh)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

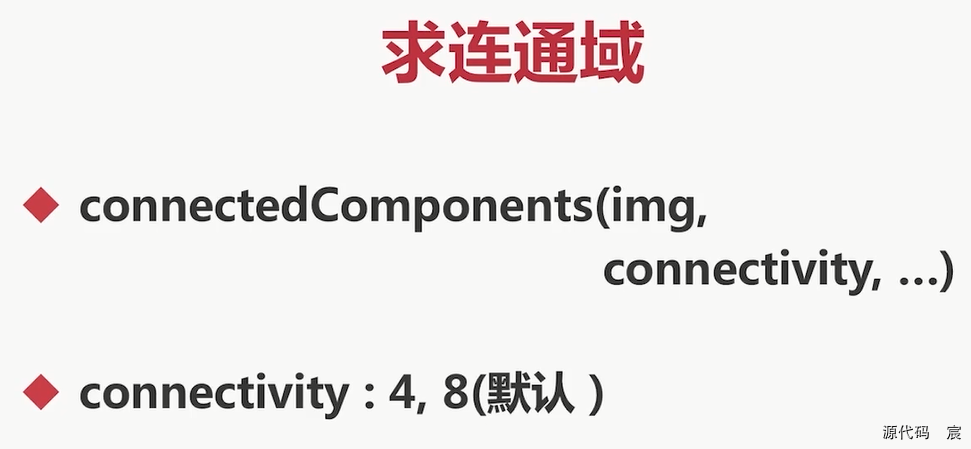

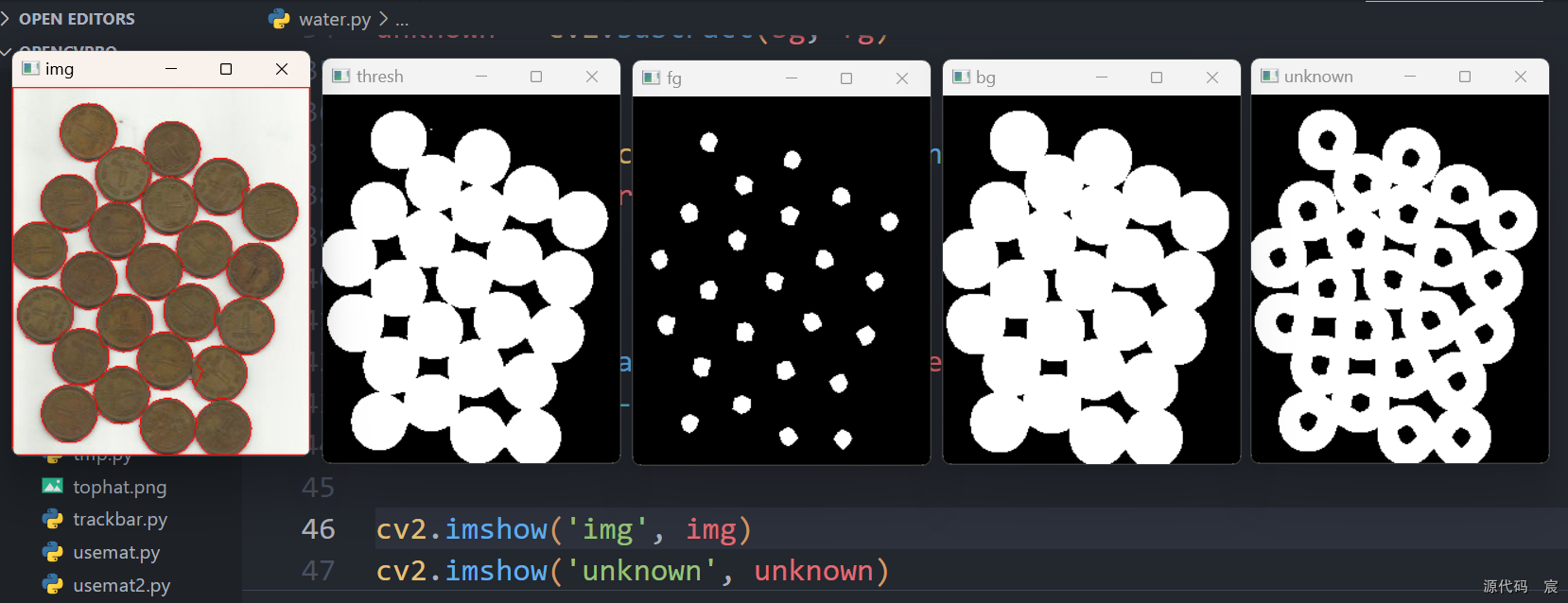

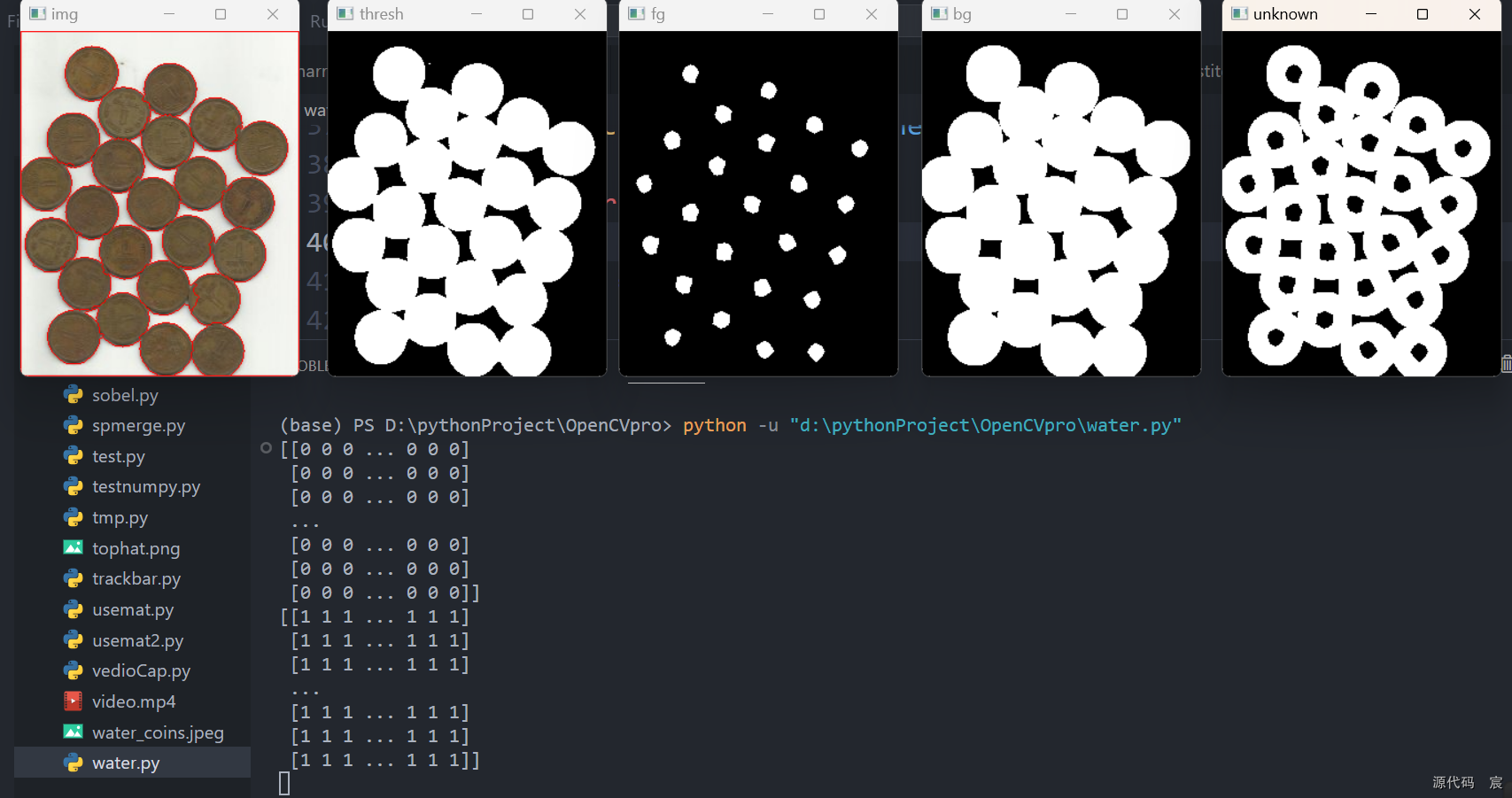

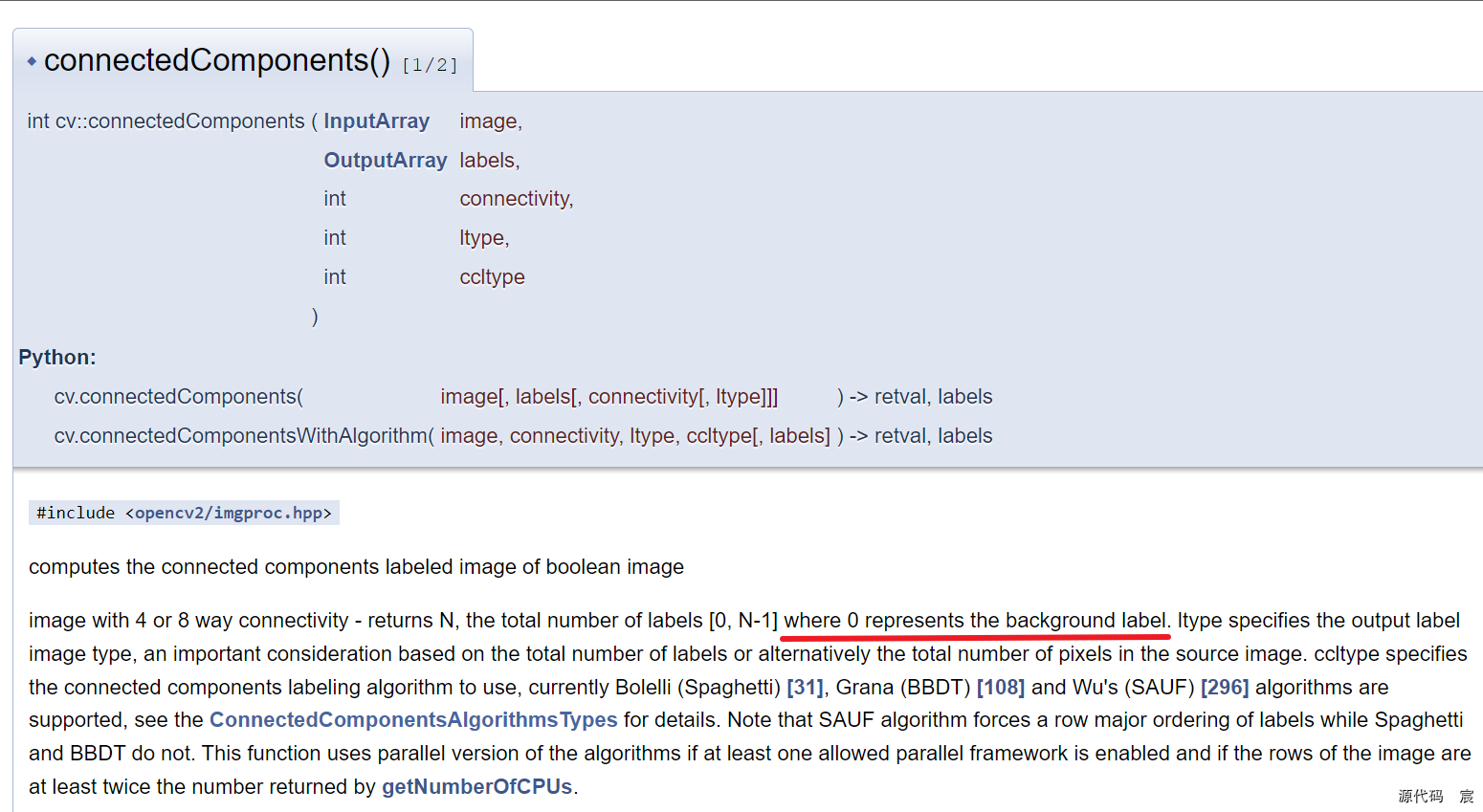

计算前景色的连通域

# -*- coding: utf-8 -*-

import cv2

import numpy as np

from matplotlib import pyplot as plt#获取背景

# 1. 通过二值法得到黑白图片

# 2. 通过形态学获取背景img = cv2.imread('./water_coins.jpeg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)# 二值化

ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)# 开运算

kernel = np.ones((3, 3), np.int8)

open1 = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel, iterations=2)# 膨胀

bg = cv2.dilate(open1, kernel, iterations=1)# 获取前景物体

dist = cv2.distanceTransform(open1, cv2.DIST_L2, 5);ret, fg = cv2.threshold(dist, 0.7 * dist.max(), 255, cv2.THRESH_BINARY)# plt.imshow(dist, cmap='gray')

# plt.show()

# exit()# 获取未知区域

fg = np.uint8(fg)

unknown = cv2.subtract(bg, fg)# 创建连通域

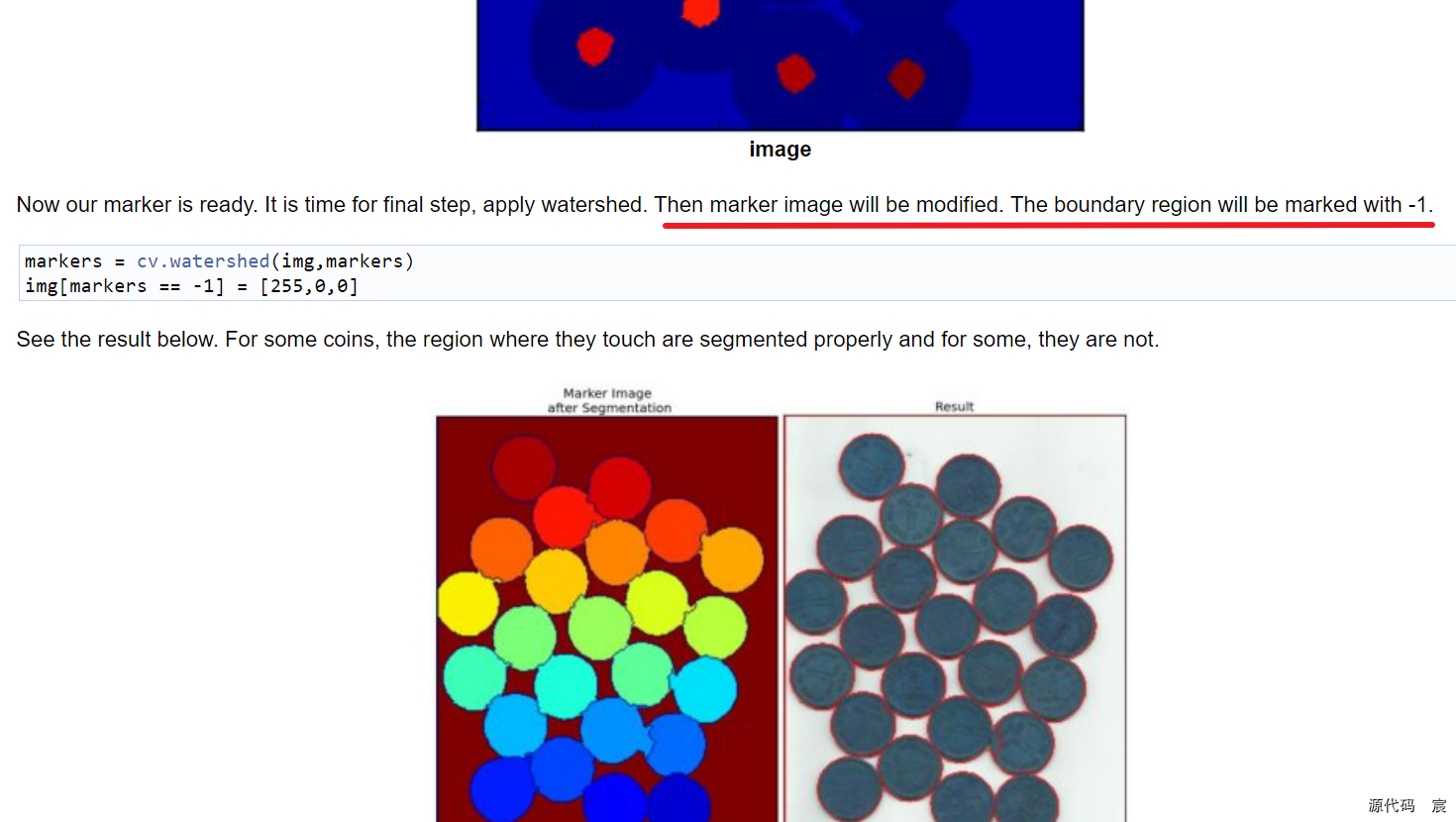

ret, marker = cv2.connectedComponents(fg)

print(marker)

marker = marker + 1

print(marker)

marker[unknown == 255] = 0# 进行图像分割

result = cv2.watershed(img, marker)

img[result == -1] = [0, 0, 255]cv2.imshow('img', img)

cv2.imshow('unknown', unknown)

cv2.imshow('fg', fg)

cv2.imshow('bg', bg)

cv2.imshow('thresh', thresh)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

详细操作见官方文档

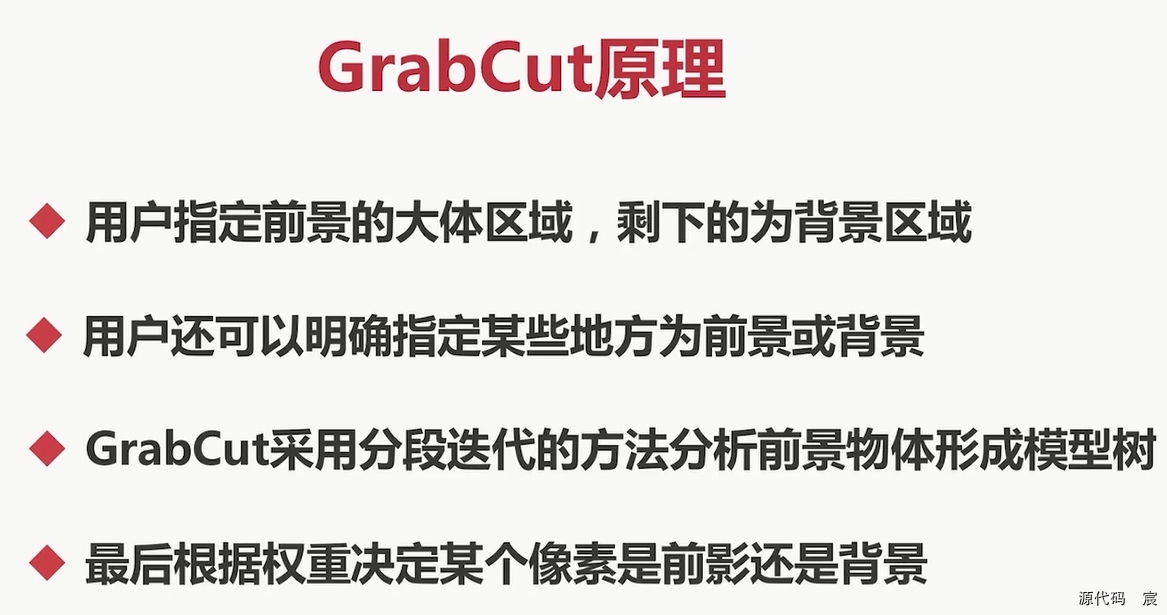

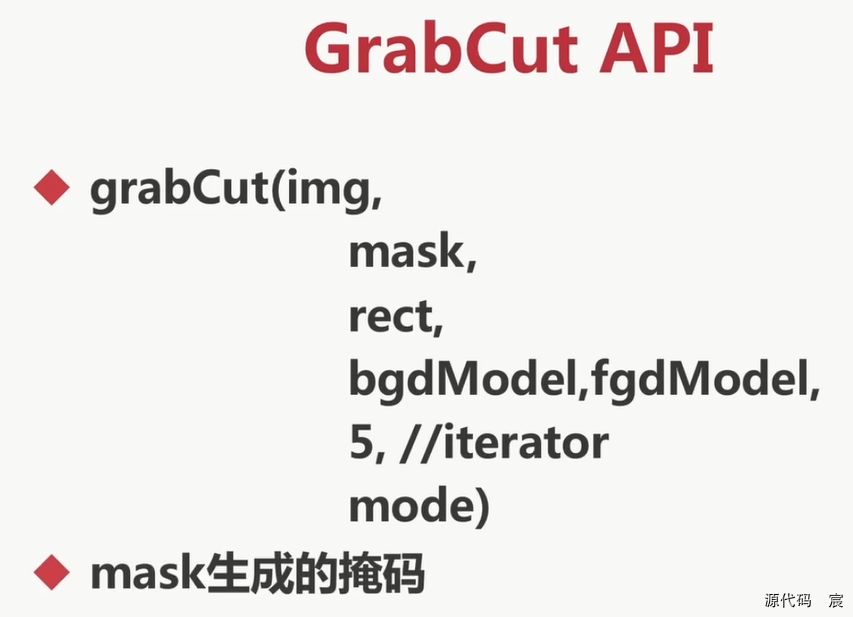

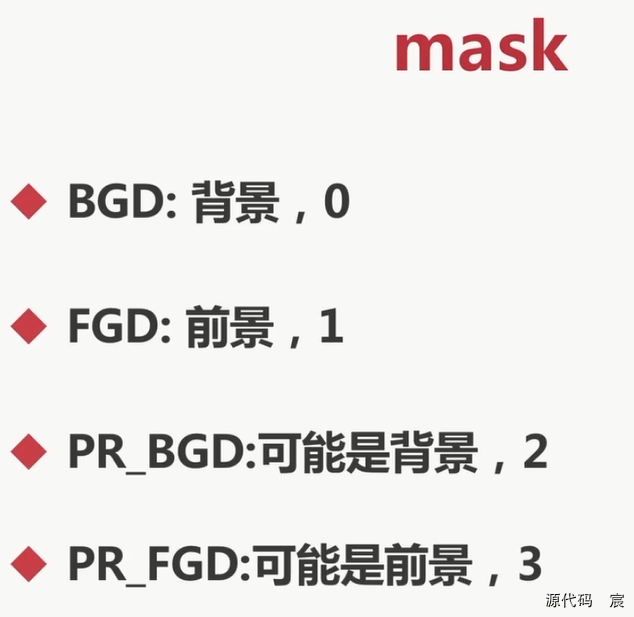

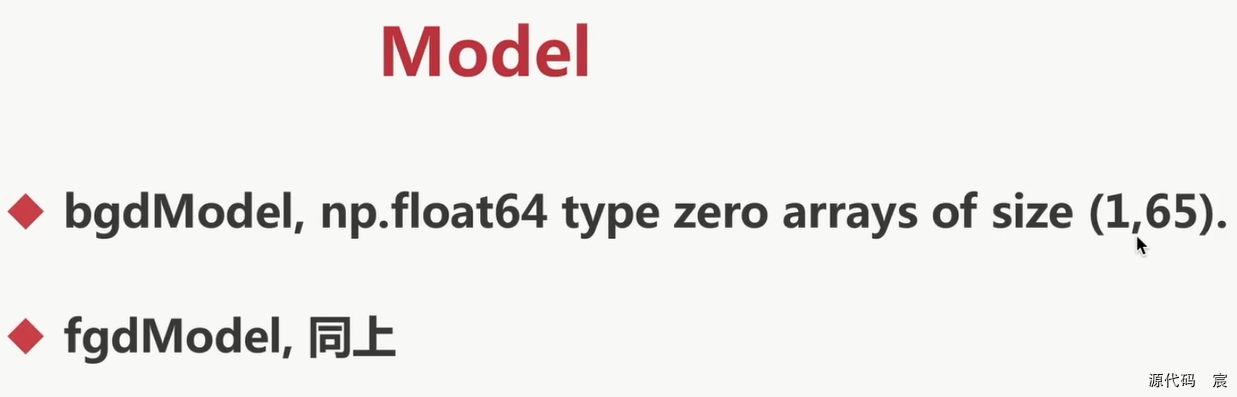

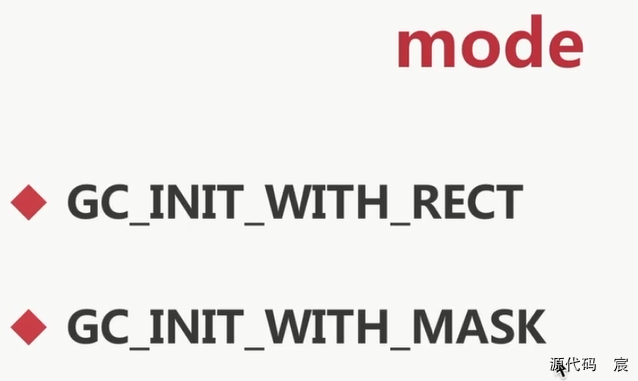

GrabCut基本原理

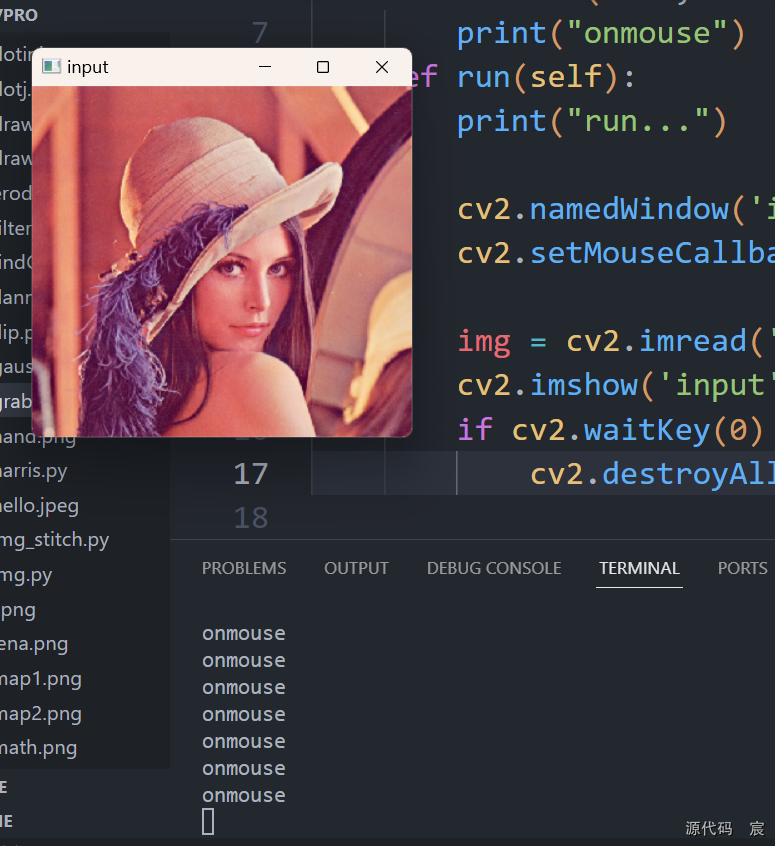

实战-GrabCut主体程序的实现

# -*- coding: utf-8 -*-

import cv2

import numpy as npclass App:def onmouse(self, event, x, y, flags, param):print("onmouse")def run(self):print("run...")cv2.namedWindow('input', cv2.WINDOW_NORMAL)cv2.setMouseCallback('input', self.onmouse)img = cv2.imread('./lena.png')cv2.imshow('input', img)if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()App().run()

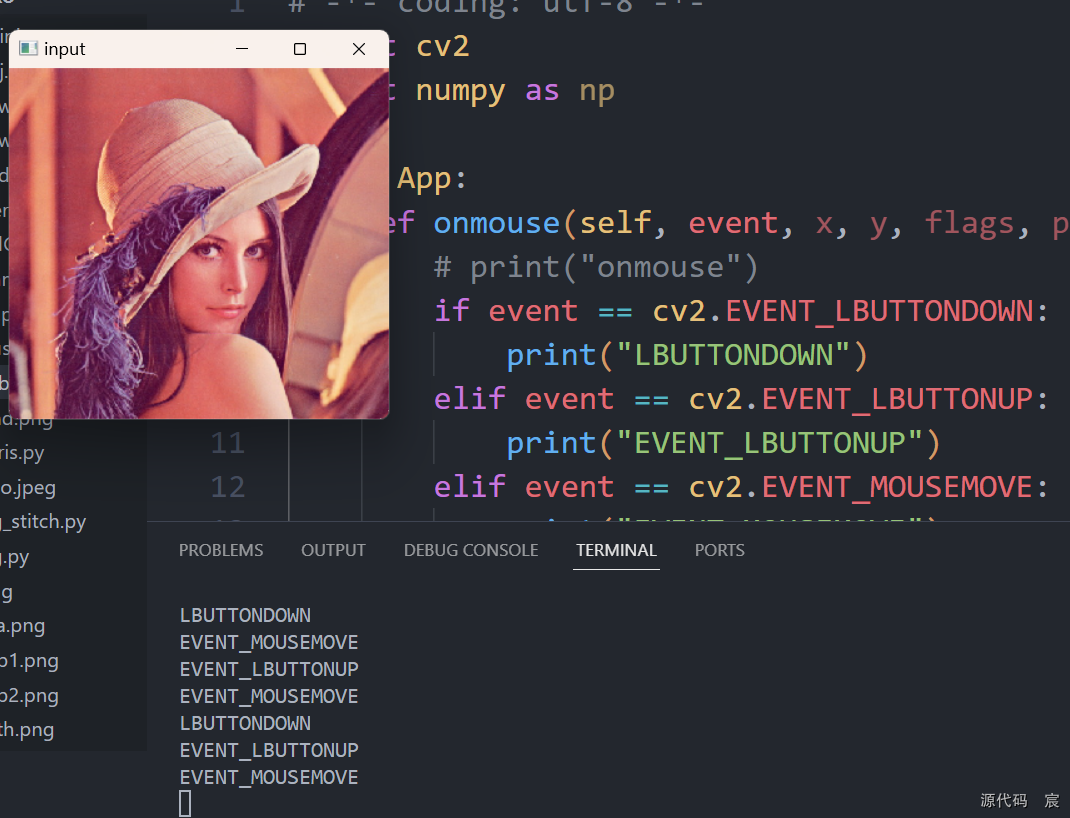

实战-GrabCut鼠标事件的处理

# -*- coding: utf-8 -*-

import cv2

import numpy as npclass App:def onmouse(self, event, x, y, flags, param):# print("onmouse")if event == cv2.EVENT_LBUTTONDOWN:print("LBUTTONDOWN")elif event == cv2.EVENT_LBUTTONUP:print("EVENT_LBUTTONUP")elif event == cv2.EVENT_MOUSEMOVE:print("EVENT_MOUSEMOVE")def run(self):print("run...")cv2.namedWindow('input', cv2.WINDOW_NORMAL)cv2.setMouseCallback('input', self.onmouse)img = cv2.imread('./lena.png')cv2.imshow('input', img)if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()App().run()

# -*- coding: utf-8 -*-

import cv2

import numpy as npclass App:startX = 0startY = 0def onmouse(self, event, x, y, flags, param):# print("onmouse")if event == cv2.EVENT_LBUTTONDOWN:self.startX = xself.startY = y# print("LBUTTONDOWN")elif event == cv2.EVENT_LBUTTONUP:cv2.rectangle(self.img, (self.startX, self.startY), (x, y), (0, 255, 0), 2)# print("EVENT_LBUTTONUP")elif event == cv2.EVENT_MOUSEMOVE:print("EVENT_MOUSEMOVE")def run(self):print("run...")cv2.namedWindow('input', cv2.WINDOW_NORMAL)cv2.setMouseCallback('input', self.onmouse)# 隐式的定义成员变量self.img = cv2.imread('./lena.png')while True:cv2.imshow('input', self.img)if cv2.waitKey(100) & 0xff == 27:cv2.destroyAllWindows()breakApp().run()

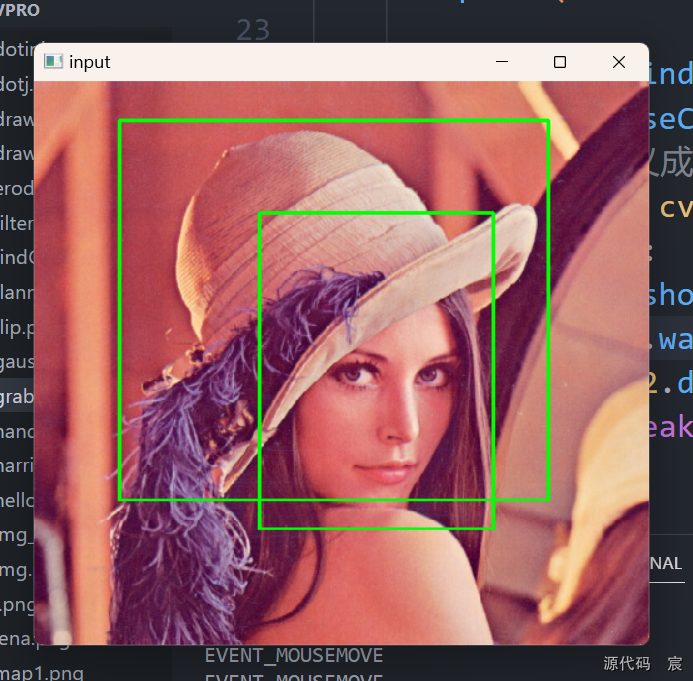

# -*- coding: utf-8 -*-

import cv2

import numpy as npclass App:startX = 0startY = 0flag_rect = Falsedef onmouse(self, event, x, y, flags, param):# print("onmouse")if event == cv2.EVENT_LBUTTONDOWN:self.flag_rect = Trueself.startX = xself.startY = y# print("LBUTTONDOWN")elif event == cv2.EVENT_LBUTTONUP:cv2.rectangle(self.img, (self.startX, self.startY), (x, y), (0, 255, 0), 3)self.flag_rect = False# print("EVENT_LBUTTONUP")elif event == cv2.EVENT_MOUSEMOVE:if self.flag_rect == True:#鼠标每次移动的时候再拷贝一份img2到img用于显示(img会一直刷新,所以之前鼠标移动画的不会影响后面)self.img = self.img2.copy()cv2.rectangle(self.img, (self.startX, self.startY), (x, y), (255, 255, 0), 3)# print("EVENT_MOUSEMOVE")def run(self):print("run...")cv2.namedWindow('input', cv2.WINDOW_NORMAL)cv2.setMouseCallback('input', self.onmouse)# 隐式的定义成员变量self.img = cv2.imread('./lena.png')self.img2 = self.img.copy()while True:cv2.imshow('input', self.img)if cv2.waitKey(100) & 0xff == 27:cv2.destroyAllWindows()breakApp().run()

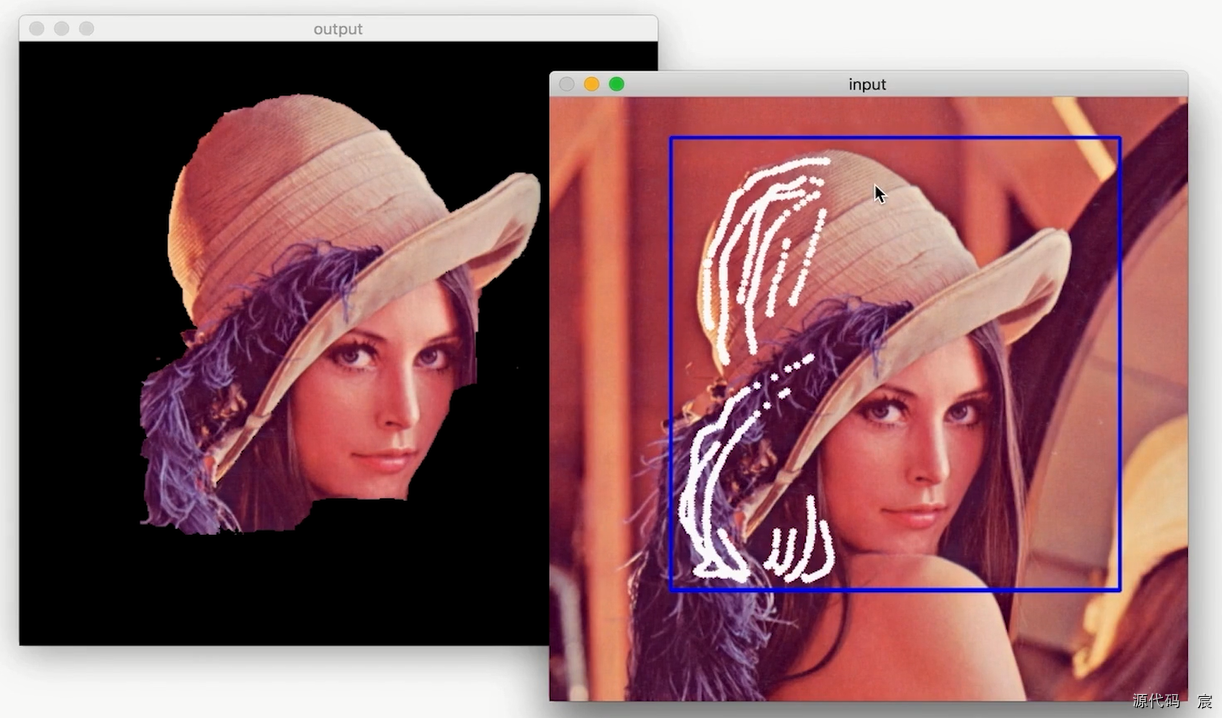

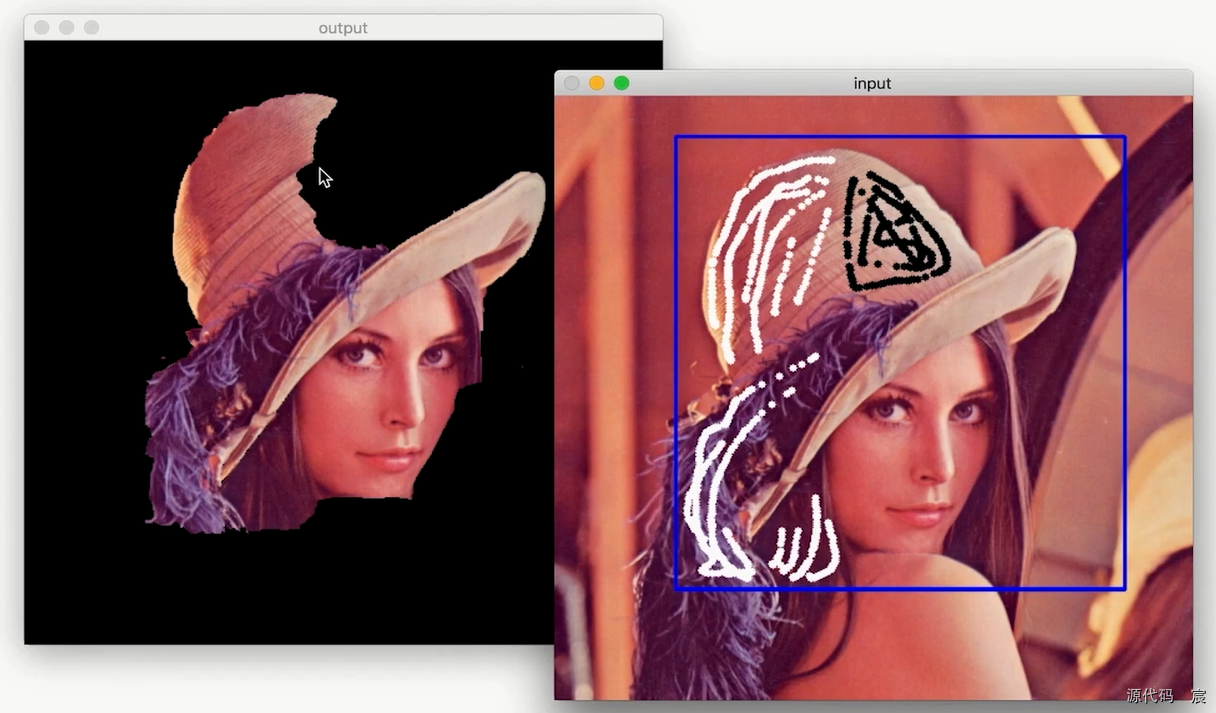

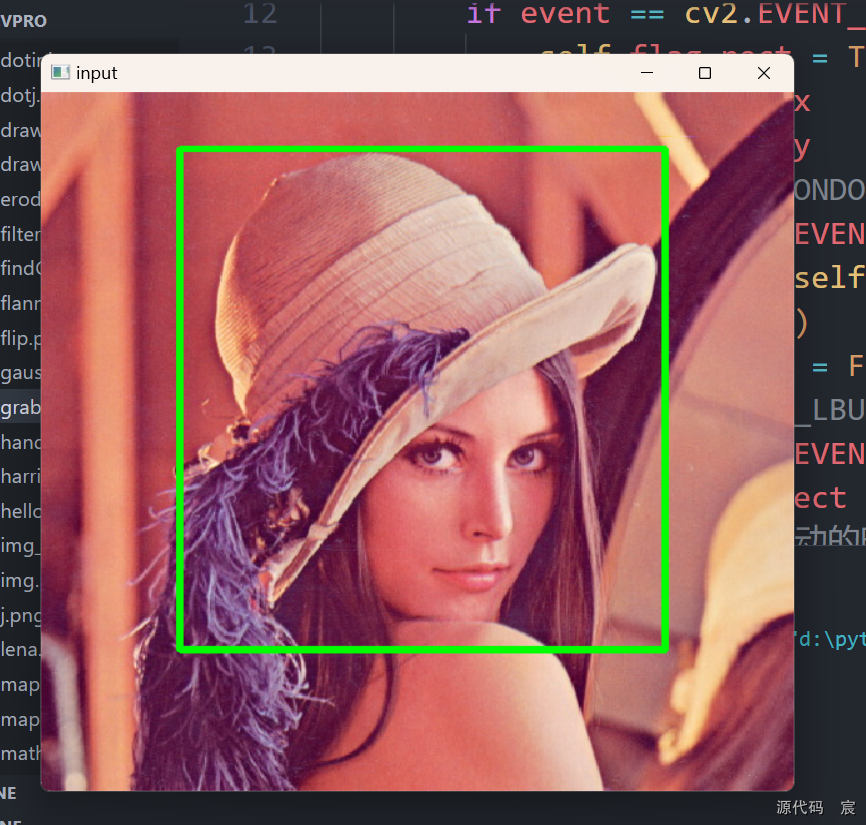

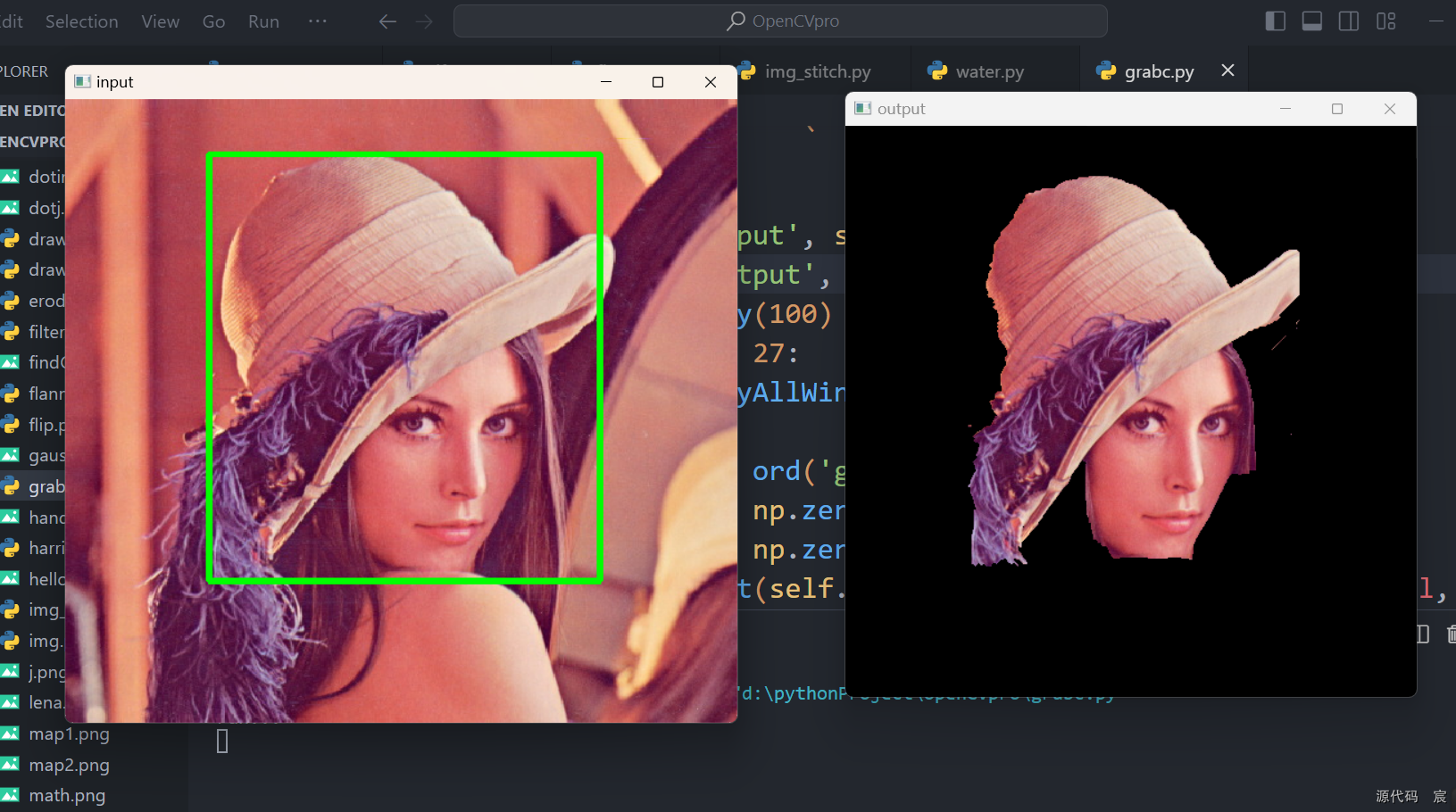

实战-调用GrabCut实现图像分割

# -*- coding: utf-8 -*-

import cv2

import numpy as npclass App:startX = 0startY = 0flag_rect = Falserect = (0, 0, 0, 0)def onmouse(self, event, x, y, flags, param):# print("onmouse")if event == cv2.EVENT_LBUTTONDOWN:self.flag_rect = Trueself.startX = xself.startY = y# print("LBUTTONDOWN")elif event == cv2.EVENT_LBUTTONUP:cv2.rectangle(self.img, (self.startX, self.startY), (x, y), (0, 255, 0), 3)self.flag_rect = Falseself.rect = (min(self.startX, x), min(self.startY, y), abs(self.startX - x), abs(self.startY - y))# print("EVENT_LBUTTONUP")elif event == cv2.EVENT_MOUSEMOVE:if self.flag_rect == True:#鼠标每次移动的时候再拷贝一份img2到img用于显示(img会一直刷新,所以之前鼠标移动画的不会影响后面)self.img = self.img2.copy()cv2.rectangle(self.img, (self.startX, self.startY), (x, y), (255, 255, 0), 3)# print("EVENT_MOUSEMOVE")def run(self):print("run...")cv2.namedWindow('input', cv2.WINDOW_NORMAL)cv2.setMouseCallback('input', self.onmouse)# 隐式的定义成员变量self.img = cv2.imread('./lena.png')self.img2 = self.img.copy()self.mask = np.zeros(self.img.shape[:2], dtype=np.uint8)self.output = np.zeros(self.img.shape, np.uint8)while True:cv2.imshow('input', self.img)cv2.imshow('output', self.output)k = cv2.waitKey(100)if k & 0xff == 27:cv2.destroyAllWindows()breakif k & 0xff == ord('g'):bgdmodel = np.zeros((1, 65), np.float64)fgdmodel = np.zeros((1, 65), np.float64)cv2.grabCut(self.img2, self.mask, self.rect, bgdmodel, fgdmodel, 1, cv2.GC_INIT_WITH_RECT)mask2 = np.where((self.mask==1) | (self.mask == 3), 255, 0).astype('uint8')self.output = cv2.bitwise_and(self.img2, self.img2, mask=mask2)App().run()

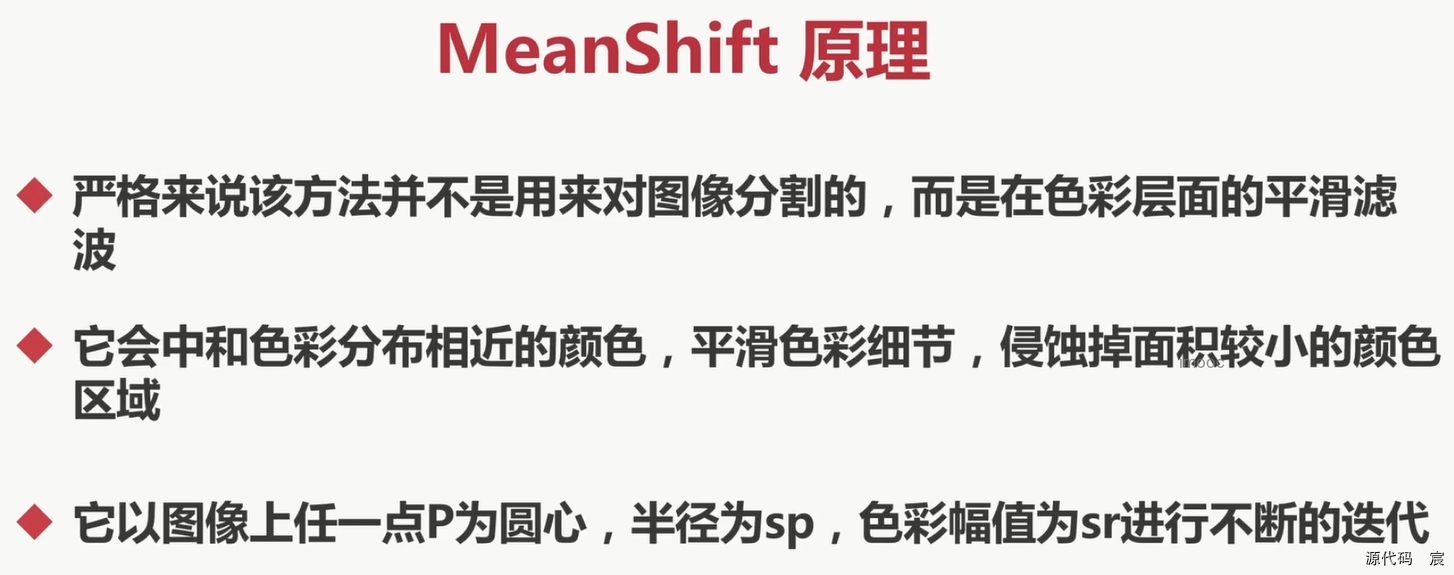

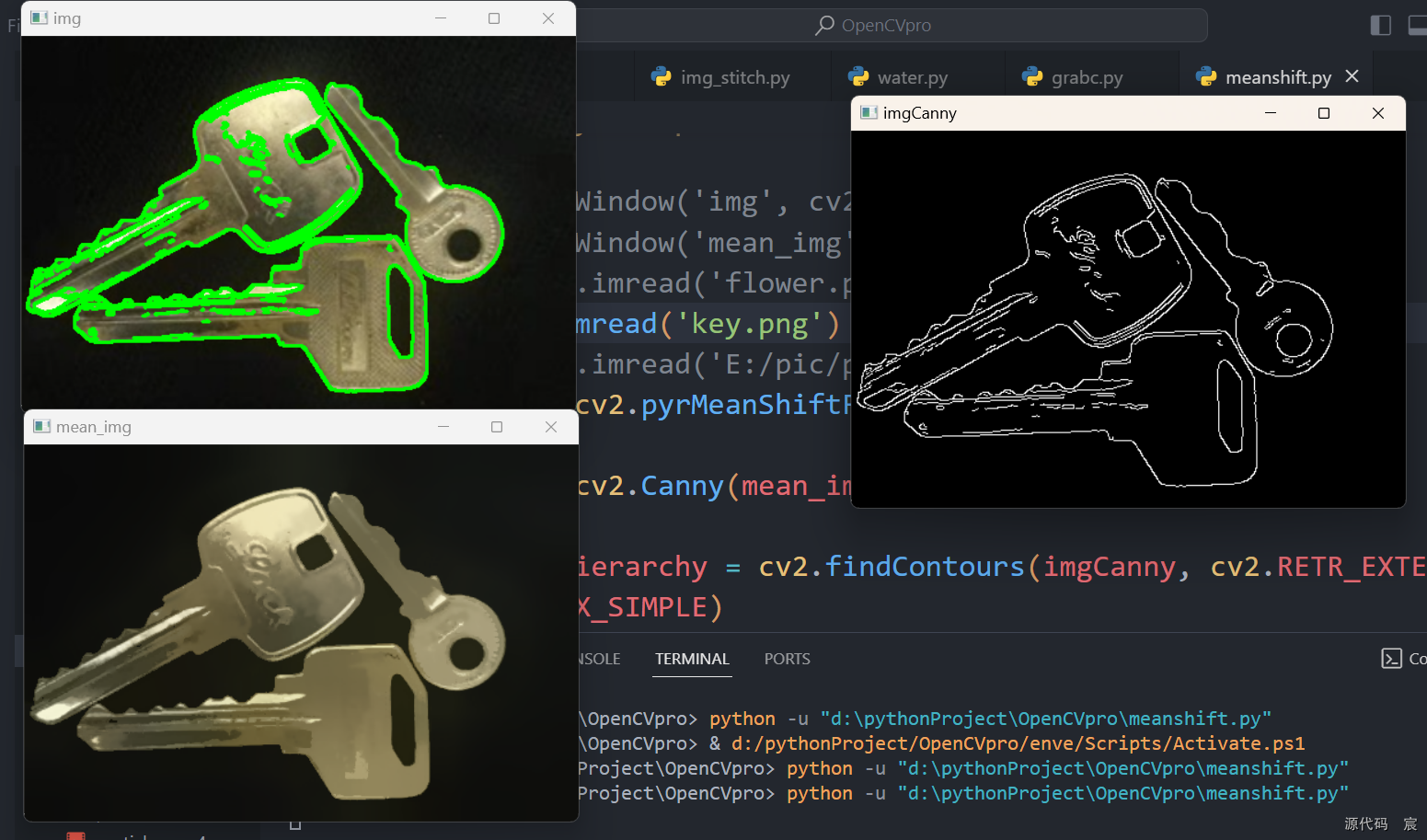

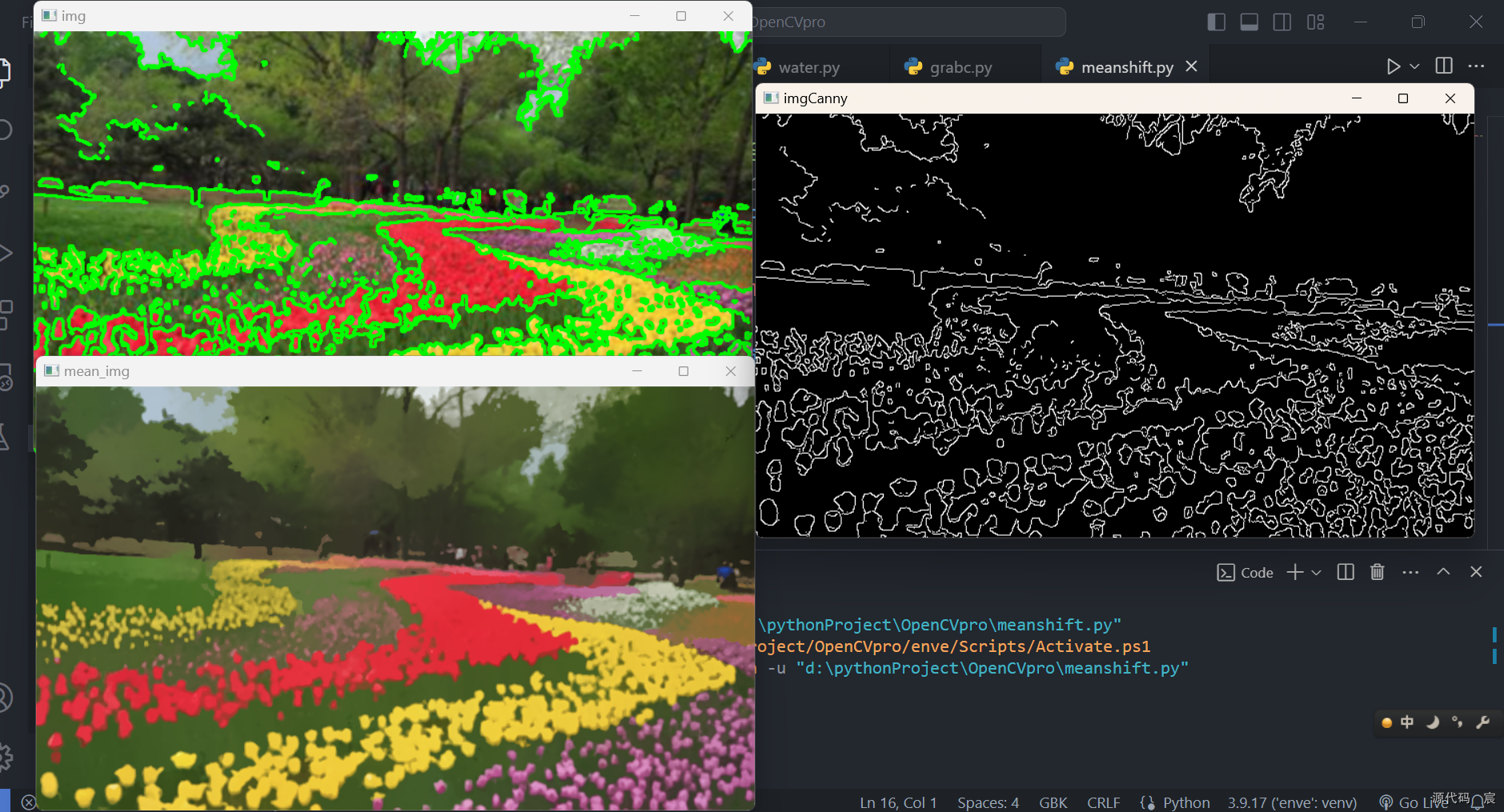

meanshift图像分割

基于色彩进行图像分割

sp越大模糊程度越大,sp越小模糊程度越小

sr越大,连成一片区域的可能性越大

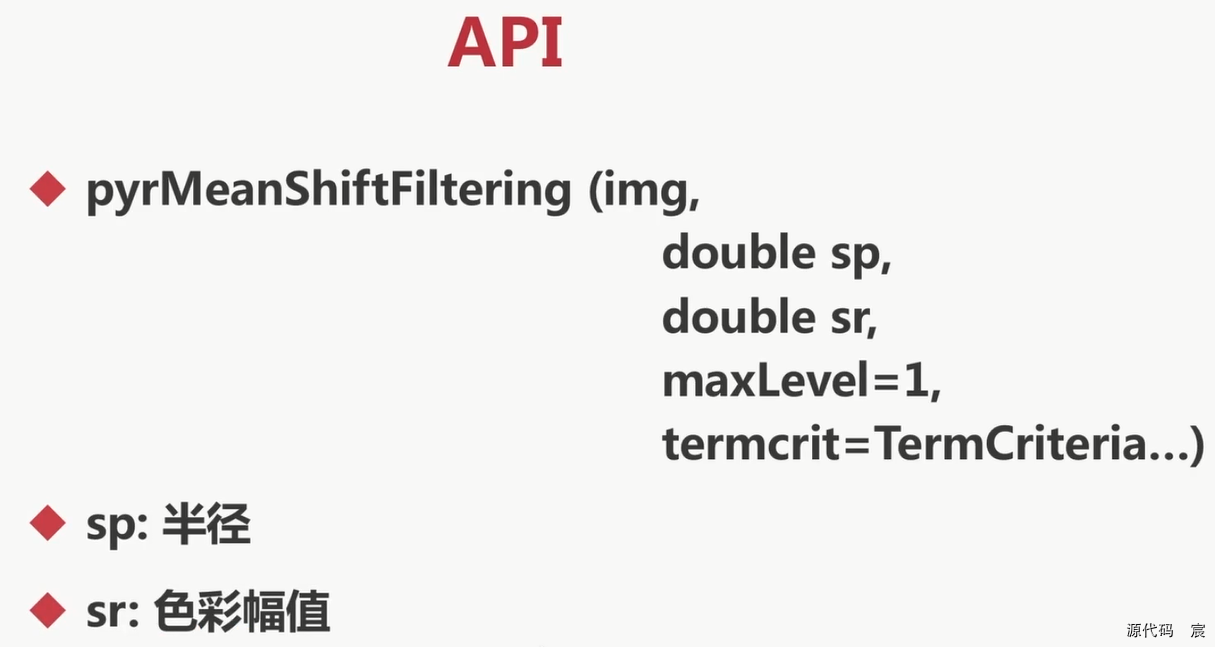

# -*- coding: utf-8 -*-

import cv2

import numpy as npimg = cv2.imread('flower.png')

mean_img = cv2.pyrMeanShiftFiltering(img, 20, 30)cv2.imshow('img', img)

cv2.imshow('mean_img', mean_img)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

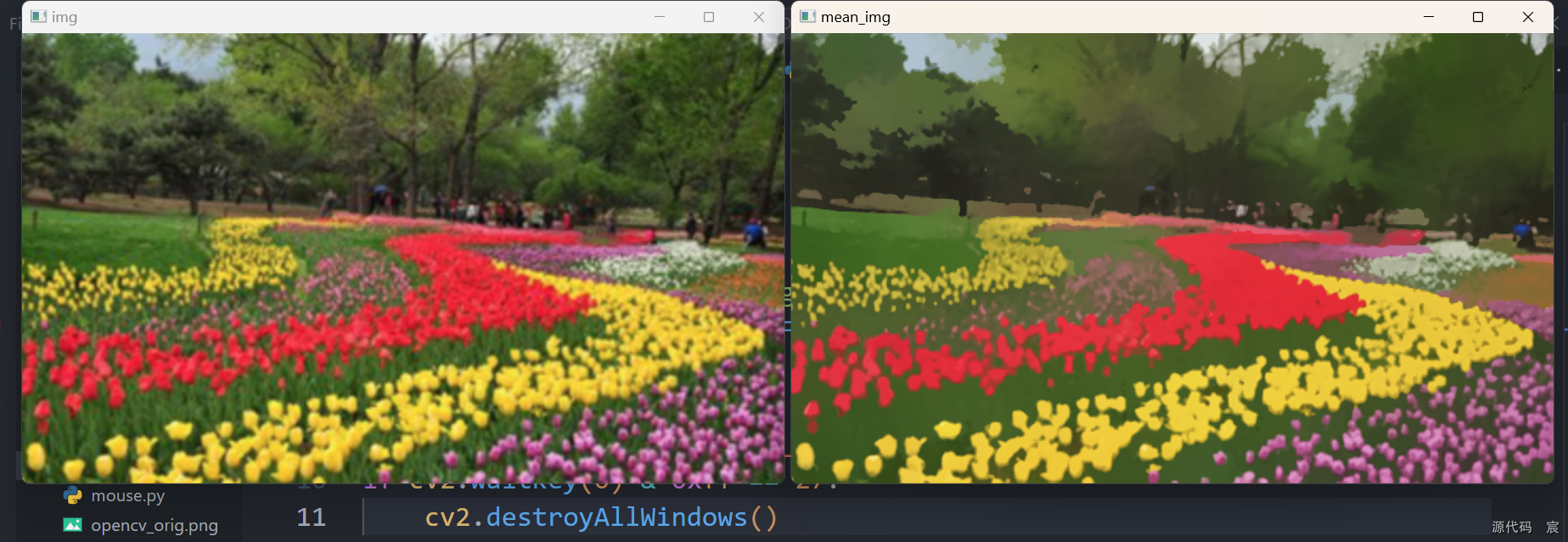

# -*- coding: utf-8 -*-

import cv2

import numpy as np# cv2.namedWindow('img', cv2.WINDOW_NORMAL)

# cv2.namedWindow('mean_img', cv2.WINDOW_NORMAL)

img = cv2.imread('flower.png')

# img = cv2.imread('E:/pic/picc/IMG_20230610_192709.jpg')

mean_img = cv2.pyrMeanShiftFiltering(img, 20, 30)imgCanny = cv2.Canny(mean_img, 150, 200)cv2.imshow('img', img)

cv2.imshow('mean_img', mean_img)

cv2.imshow('imgCanny', imgCanny)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

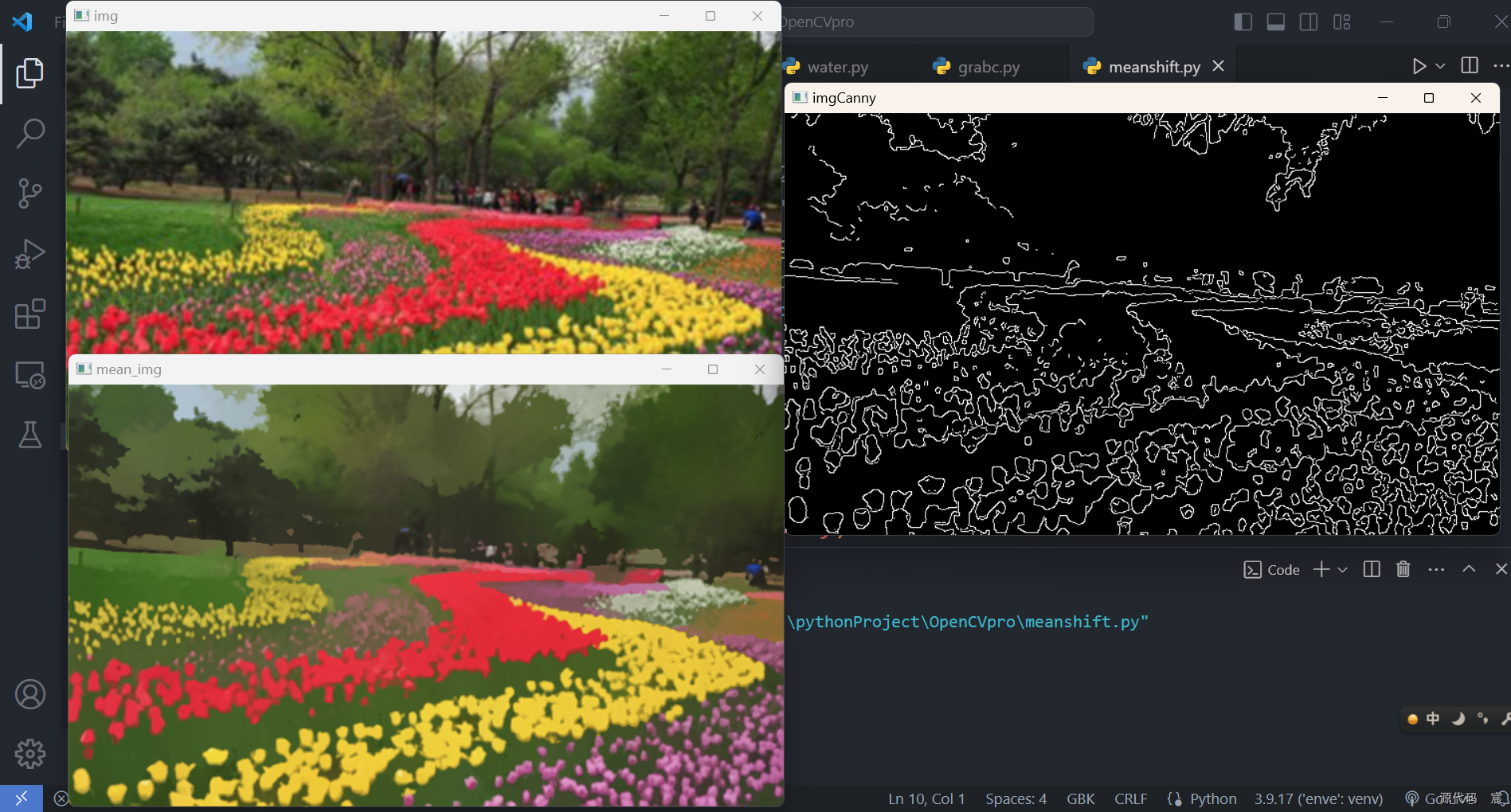

# -*- coding: utf-8 -*-

import cv2

import numpy as np# cv2.namedWindow('img', cv2.WINDOW_NORMAL)

# cv2.namedWindow('mean_img', cv2.WINDOW_NORMAL)

# img = cv2.imread('flower.png')

img = cv2.imread('key.png')

# img = cv2.imread('E:/pic/picc/IMG_20230610_192709.jpg')

mean_img = cv2.pyrMeanShiftFiltering(img, 20, 30)imgCanny = cv2.Canny(mean_img, 150, 200)contours, hierarchy = cv2.findContours(imgCanny, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)cv2.drawContours(img, contours, -1, (0, 255, 0), 2)cv2.imshow('img', img)

cv2.imshow('mean_img', mean_img)

cv2.imshow('imgCanny', imgCanny)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

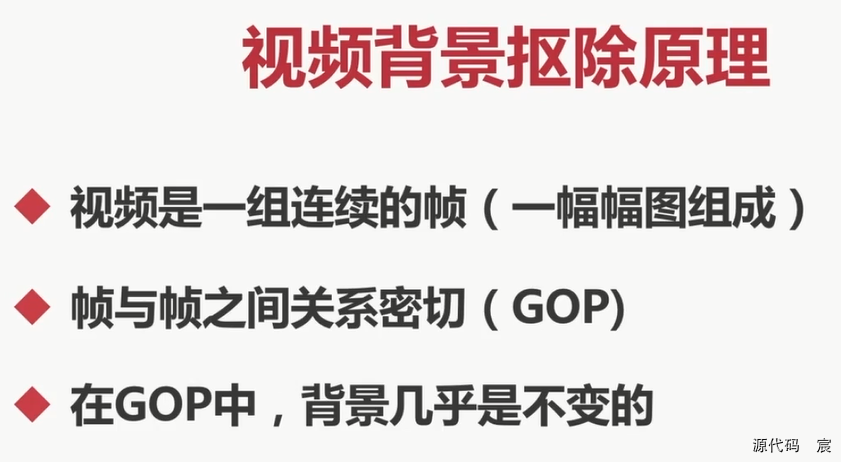

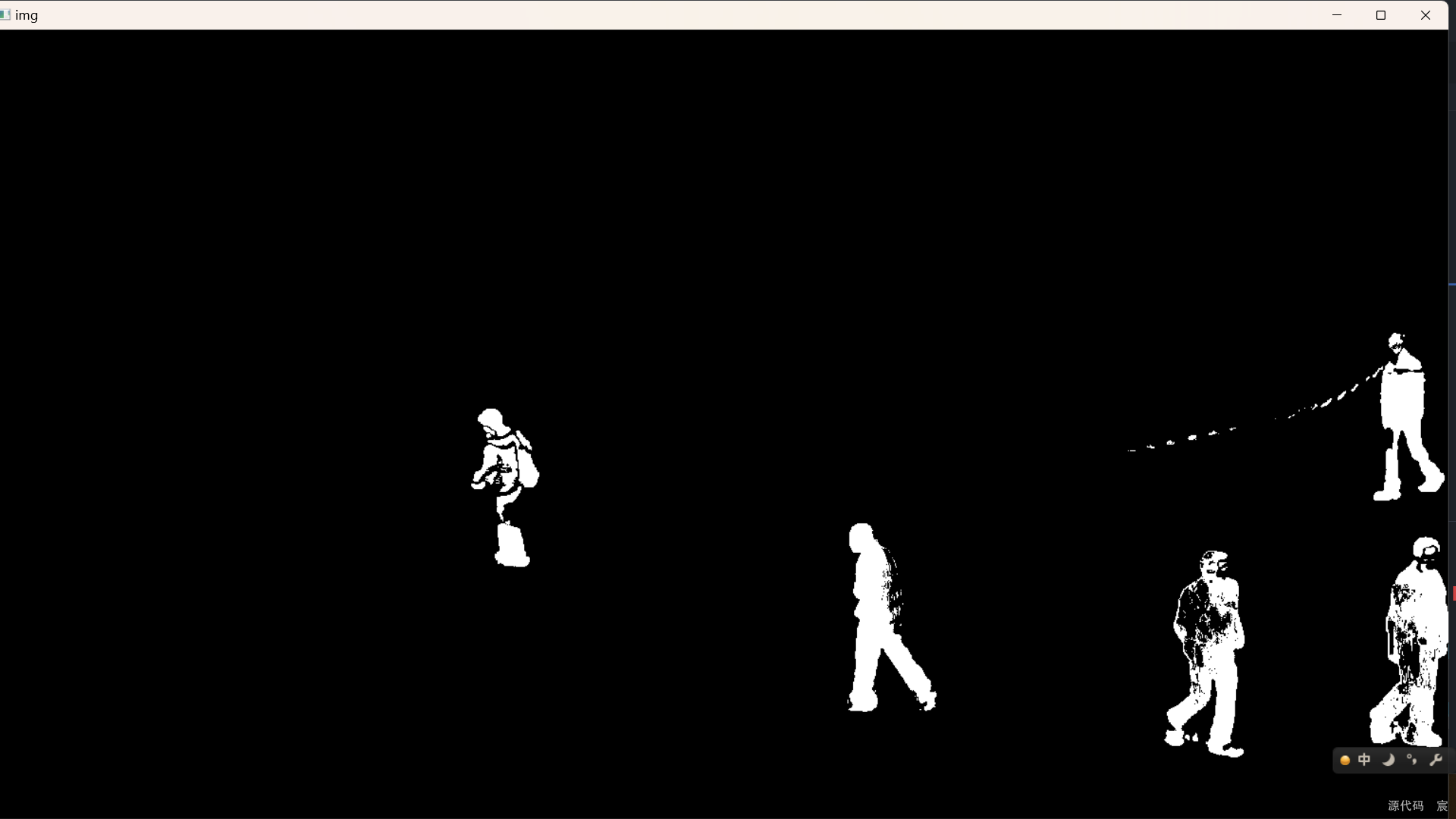

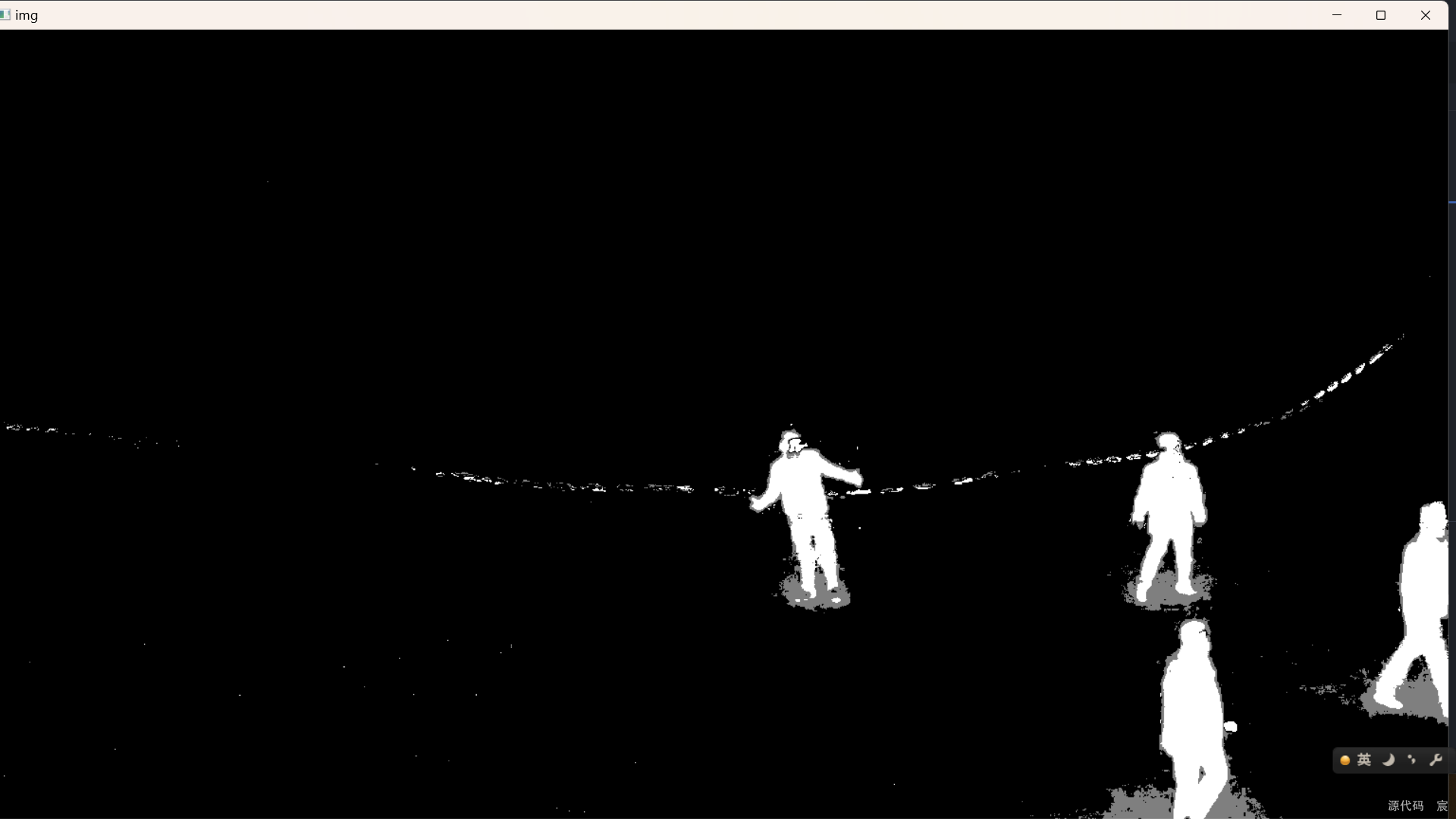

视频前后景分离

# -*- coding: utf-8 -*-

import cv2

import numpy as npcap = cv2.VideoCapture('./MyVideo.wmv')

mog = cv2.bgsegm.createBackgroundSubtractorMOG()while True:ret, frame = cap.read()fgmask = mog.apply(frame)cv2.imshow('img', fgmask)k = cv2.waitKey(40)if k & 0xff == 27:break

cap.release()

cv2.destroyAllWindows()

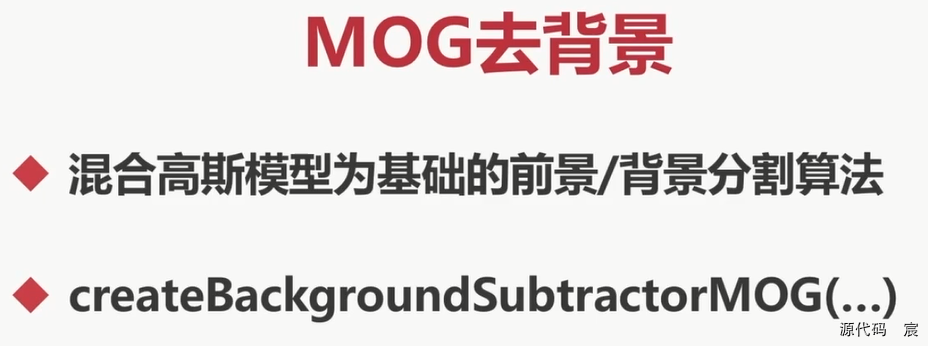

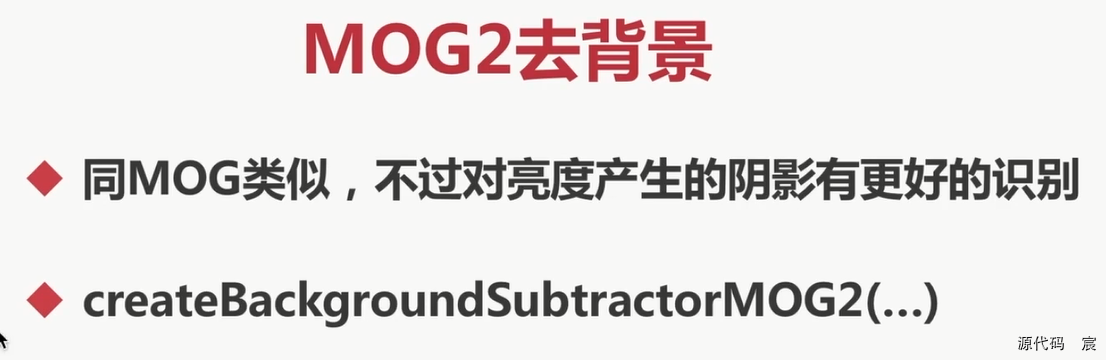

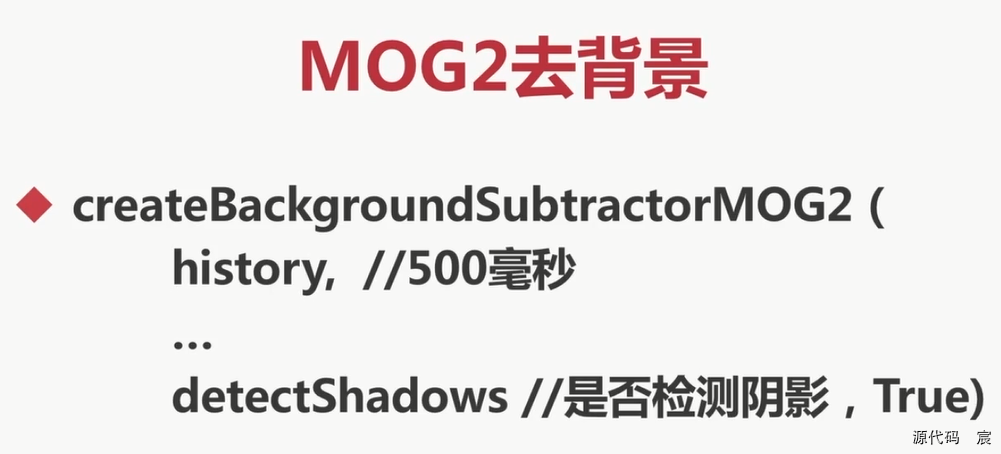

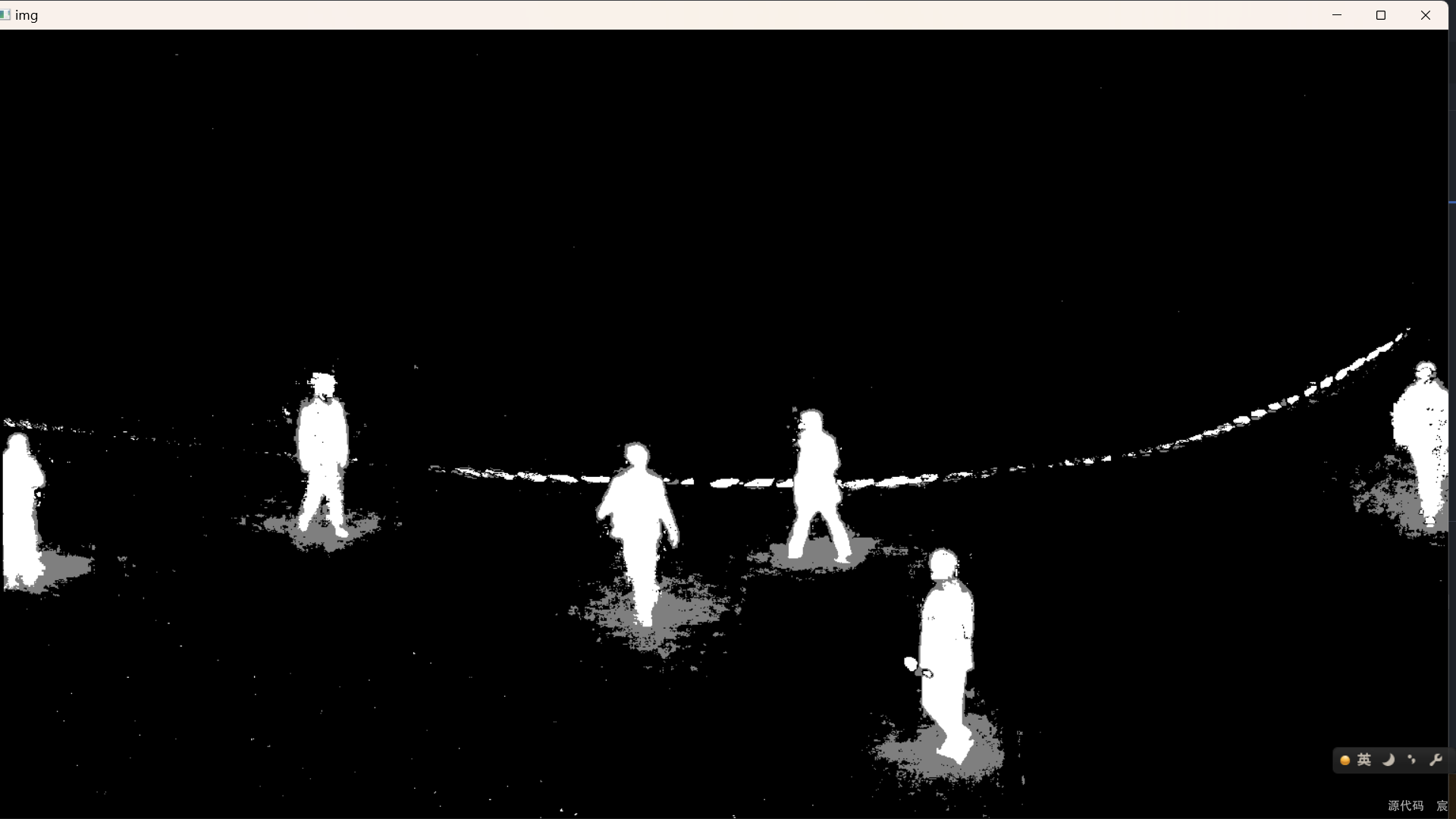

其它对视频前后影分离的方法

# -*- coding: utf-8 -*-

import cv2

import numpy as npcap = cv2.VideoCapture('./MyVideo.wmv')

# mog = cv2.bgsegm.createBackgroundSubtractorMOG()

# 优点:可以计算出阴影部分

# 缺点:会产生很多噪点

mog = cv2.createBackgroundSubtractorMOG2()while True:ret, frame = cap.read()fgmask = mog.apply(frame)cv2.imshow('img', fgmask)k = cv2.waitKey(40)if k & 0xff == 27:break

cap.release()

cv2.destroyAllWindows()

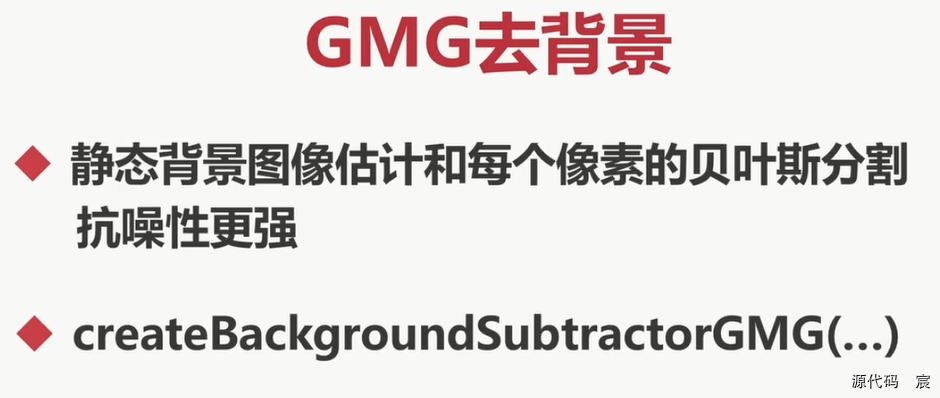

# -*- coding: utf-8 -*-

import cv2

import numpy as npcv2.namedWindow('img', cv2.WINDOW_NORMAL)

cap = cv2.VideoCapture('./MyVideo.wmv')

# mog = cv2.bgsegm.createBackgroundSubtractorMOG()

# 优点:可以计算出阴影部分

# 缺点:会产生很多噪点

# mog = cv2.createBackgroundSubtractorMOG2()# 优点:可以算出阴影部分,同时减少了噪点

# 缺点:如果采用默认值,则在开始一段时间内没有任何信息显示

# 解决办法:调整初始参考帧数量

mog = cv2.bgsegm.createBackgroundSubtractorGMG()while True:ret, frame = cap.read()fgmask = mog.apply(frame)cv2.imshow('img', fgmask)k = cv2.waitKey(40)if k & 0xff == 27:break

cap.release()

cv2.destroyAllWindows()

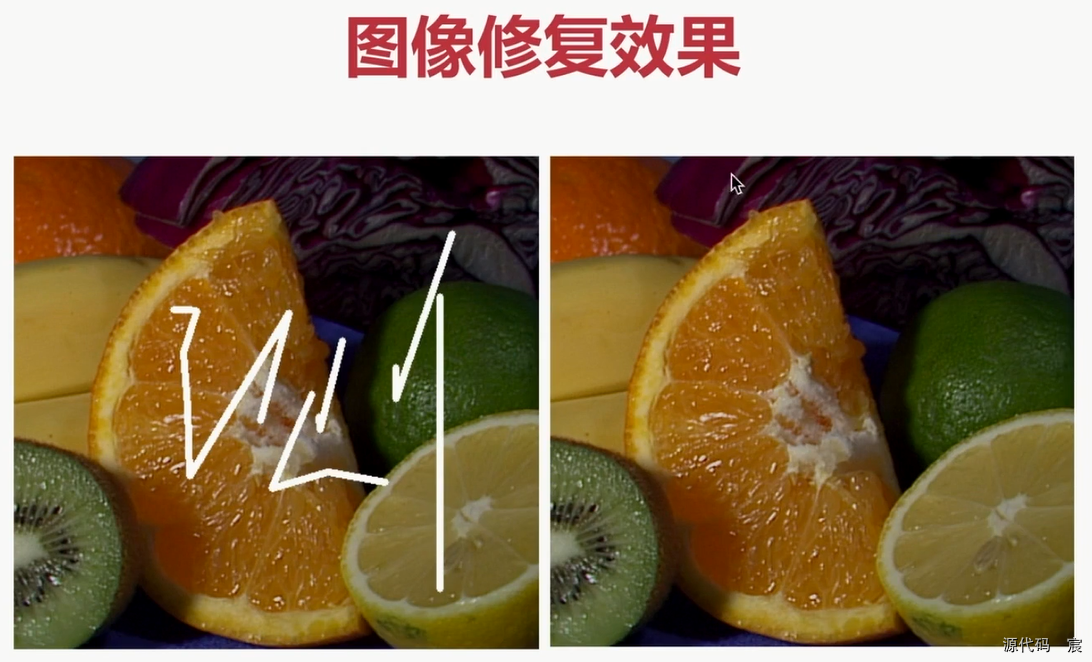

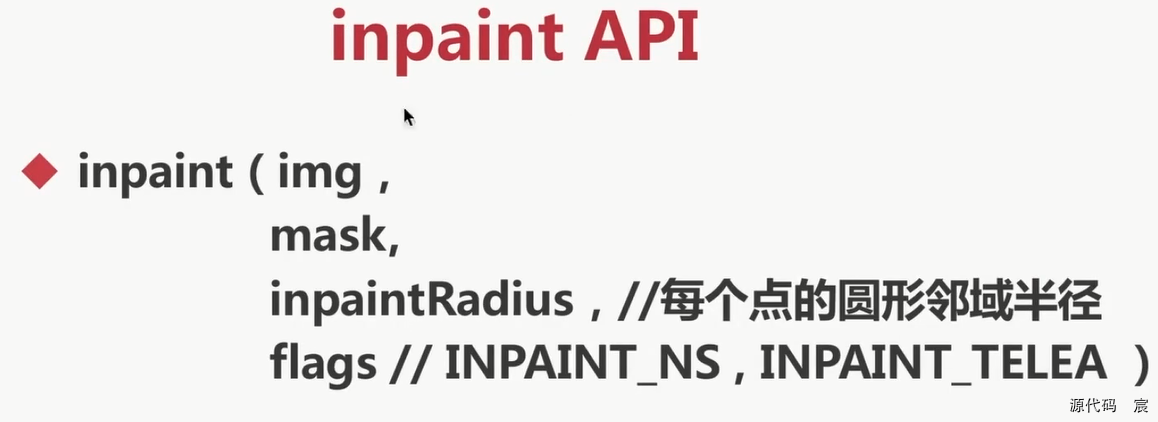

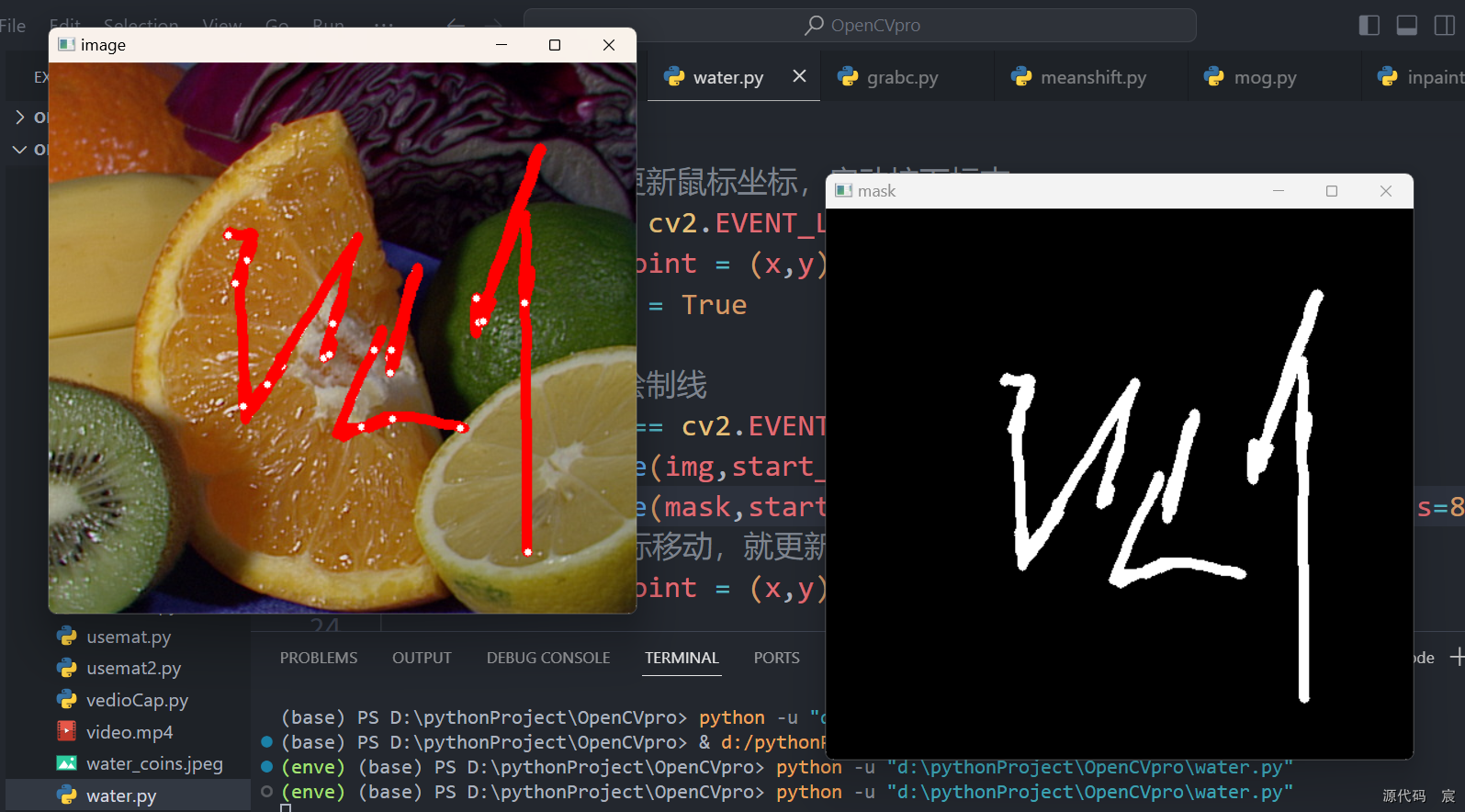

图像修复

生成掩码

# -*- coding: utf-8 -*-

import cv2

import numpy as npstart_point=(0,0) #鼠标开始坐标

lb_down = False #鼠标左键按下的标志,bool型def mouse_event(event,x,y,flags,param):global start_point,end_point,lb_down #如果全局变量是int或者str,那么如果想要在函数中对函数变量进行修改,则需要#先在函数内,声明其为global,再进行修改,如果是list或者dict则可以直接修改#左键按下,更新鼠标坐标,启动按下标志if event == cv2.EVENT_LBUTTONDOWN: start_point = (x,y)lb_down = True#鼠标移动,绘制线elif event == cv2.EVENT_MOUSEMOVE and lb_down: cv2.line(img,start_point,(x,y),(0,0,255),thickness=8)cv2.line(mask,start_point,(x,y),(255,255,255),thickness=8)#只要鼠标移动,就更新鼠标的坐标 start_point = (x,y) #左键释放elif event == cv2.EVENT_LBUTTONUP: #鼠标点击后直接释放鼠标的时候也会绘制一个点cv2.line(img,start_point,(x,y),(255,255,255),thickness=5) cv2.line(mask,start_point,(x,y),(255,255,255),thickness=5)lb_down = Falsecv2.namedWindow('image') #新建窗口,用来进行鼠标操作

img = cv2.imread('./inpaint.png')

mask = np.zeros(img.shape, np.uint8) #创建一个黑色mask图像cv2.setMouseCallback('image',mouse_event) #设置鼠标回调while True:cv2.imshow('image',img)cv2.imshow('mask',mask)if cv2.waitKey(1)==ord('q'): #waitKey参数不能写0,写0就需要键盘输入才会继续break

cv2.imwrite("./mask.png", mask)

cv2.destroyAllWindows()

# -*- coding: utf-8 -*-

import cv2

import numpy as npimg = cv2.imread('inpaint.png')

mask = cv2.imread('mask.png', 0)dst = cv2.inpaint(img, mask, 5, cv2.INPAINT_TELEA)cv2.imshow('dst', dst)

cv2.imshow('img', img)

if cv2.waitKey(0) & 0xff == 27:cv2.destroyAllWindows()

效果很差。。。

之后我会持续更新,如果喜欢我的文章,请记得一键三连哦,点赞关注收藏,你的每一个赞每一份关注每一次收藏都将是我前进路上的无限动力 !!!↖(▔▽▔)↗感谢支持!

)