5分钟使用Python爬取豆瓣TOP250电影榜

本视频的演示步骤:

- 使用requests爬取网页

- 使用BeautifulSoup实现数据解析

- 借助pandas将数据写出到Excel

这三个库的详细用法,请看我的其他视频课程

import requests

from bs4 import BeautifulSoup

import pandas as pd

`

# 1、下载共10个页面的HTML# 构造分页数字列表

page_indexs = range(0, 250, 25)

[*range(0, 250, 25)]

list(range(0, 250, 25) )def download_all_htmls():"""下载所有列表页面的HTML,用于后续的分析"""htmls = []for idx in page_indexs:url = f"https://movie.douban.com/top250?start={idx}&filter="print("craw html:", url)r = requests.get(url,headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)"})if r.status_code != 200:raise Exception("error")htmls.append(r.text)return htmlshtmls = download_all_htmls()craw html: https://movie.douban.com/top250?start=0&filter=

craw html: https://movie.douban.com/top250?start=25&filter=

craw html: https://movie.douban.com/top250?start=50&filter=

craw html: https://movie.douban.com/top250?start=75&filter=

craw html: https://movie.douban.com/top250?start=100&filter=

craw html: https://movie.douban.com/top250?start=125&filter=

craw html: https://movie.douban.com/top250?start=150&filter=

craw html: https://movie.douban.com/top250?start=175&filter=

craw html: https://movie.douban.com/top250?start=200&filter=

craw html: https://movie.douban.com/top250?start=225&filter=# 2、解析HTML得到数据

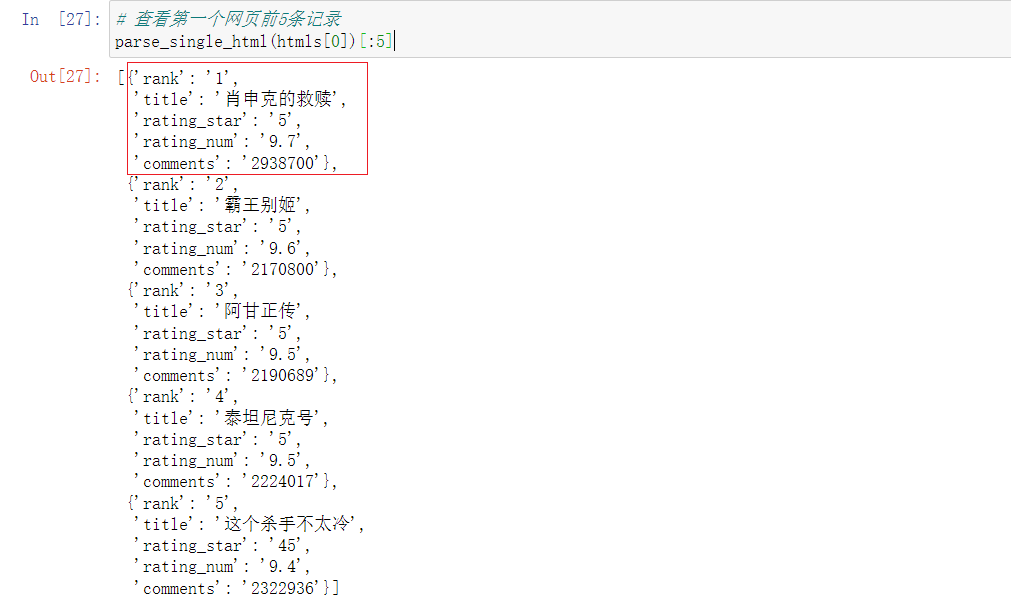

def parse_single_html(html):"""解析单个HTML,得到数据@return list({"link", "title", [label]})"""soup = BeautifulSoup(html, 'html.parser')article_items = (soup.find("div", class_="article").find("ol", class_="grid_view").find_all("div", class_="item"))datas = []for article_item in article_items:rank = article_item.find("div", class_="pic").find("em").get_text()info = article_item.find("div", class_="info")title = info.find("div", class_="hd").find("span", class_="title").get_text()stars = (info.find("div", class_="bd").find("div", class_="star").find_all("span"))rating_star = stars[0]["class"][0]rating_num = stars[1].get_text()comments = stars[3].get_text()datas.append({"rank":rank,"title":title,"rating_star":rating_star.replace("rating","").replace("-t",""),"rating_num":rating_num,"comments":comments.replace("人评价", "")})return datas# 查看第一个网页前5条记录

parse_single_html(htmls[0])[:5]# 3. 执行所有的HTML页面的解析

all_datas = []

for html in htmls:'''extend() 函数用于在列表末尾一次性追加另一个序列中的多个值该方法没有返回值,但会在已存在的列表中添加新的列表内容'''all_datas.extend(parse_single_html(html))

``

all_datas

len(all_datas)# 4. 将结果存入excel

df = pd.DataFrame(all_datas)

df.to_excel("豆瓣电影TOP250.xlsx")

extend方法:

aList = [123, ‘xyz’, ‘zara’, ‘abc’, 123];

bList = [2009, ‘manni’,2009];

aList.extend(bList)

aList

)

)

)