- 创建feaderSpider项目:feapder create -p feapderSpider,已创建可忽略

- 进入feapderSpider目录:cd .\ feapderSpider\spiders

- 创建爬虫:feapder create -s airSpiderDouban,选择AirSpider爬虫模板,可跳过1、2直接创建爬虫文件

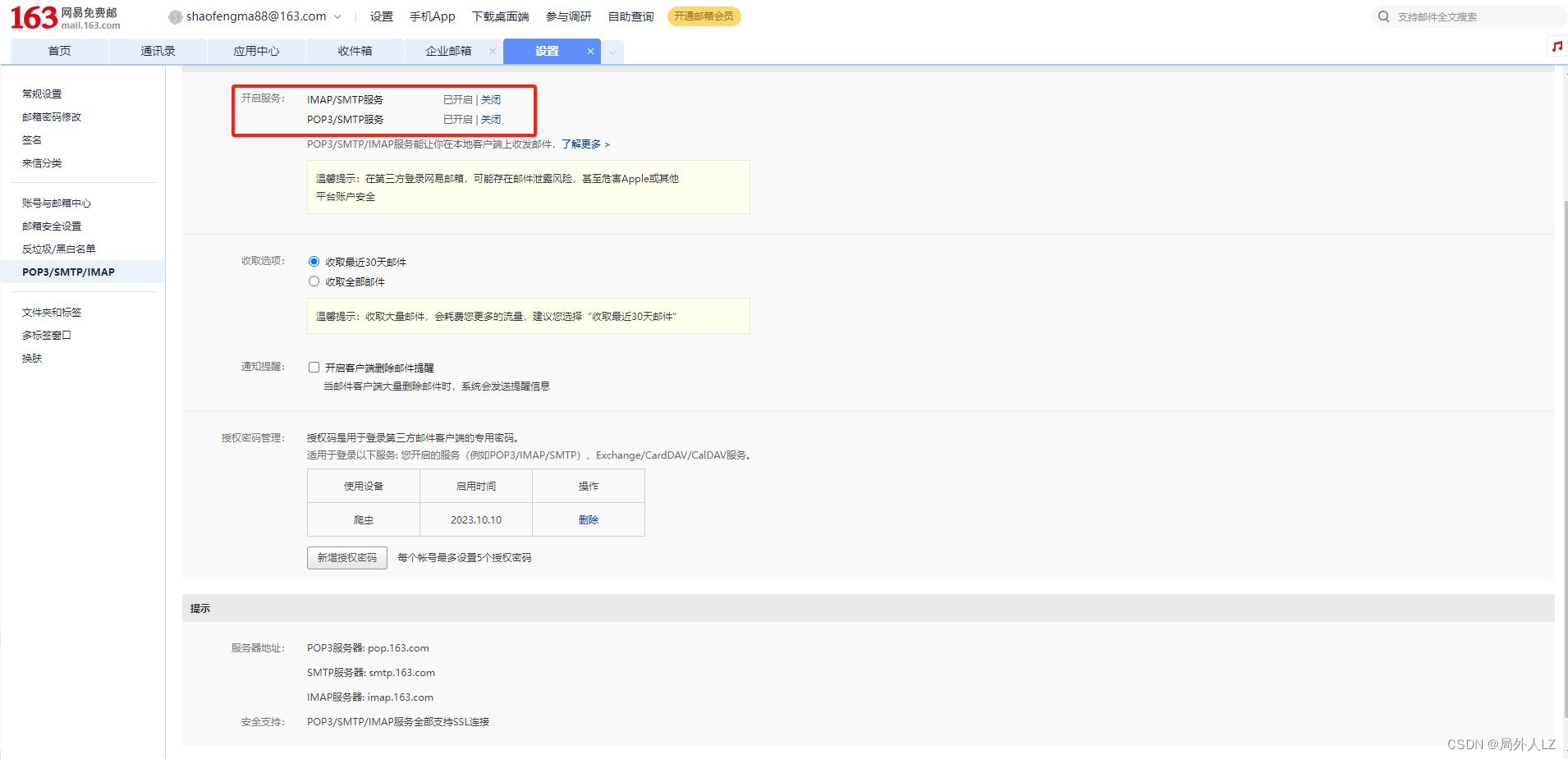

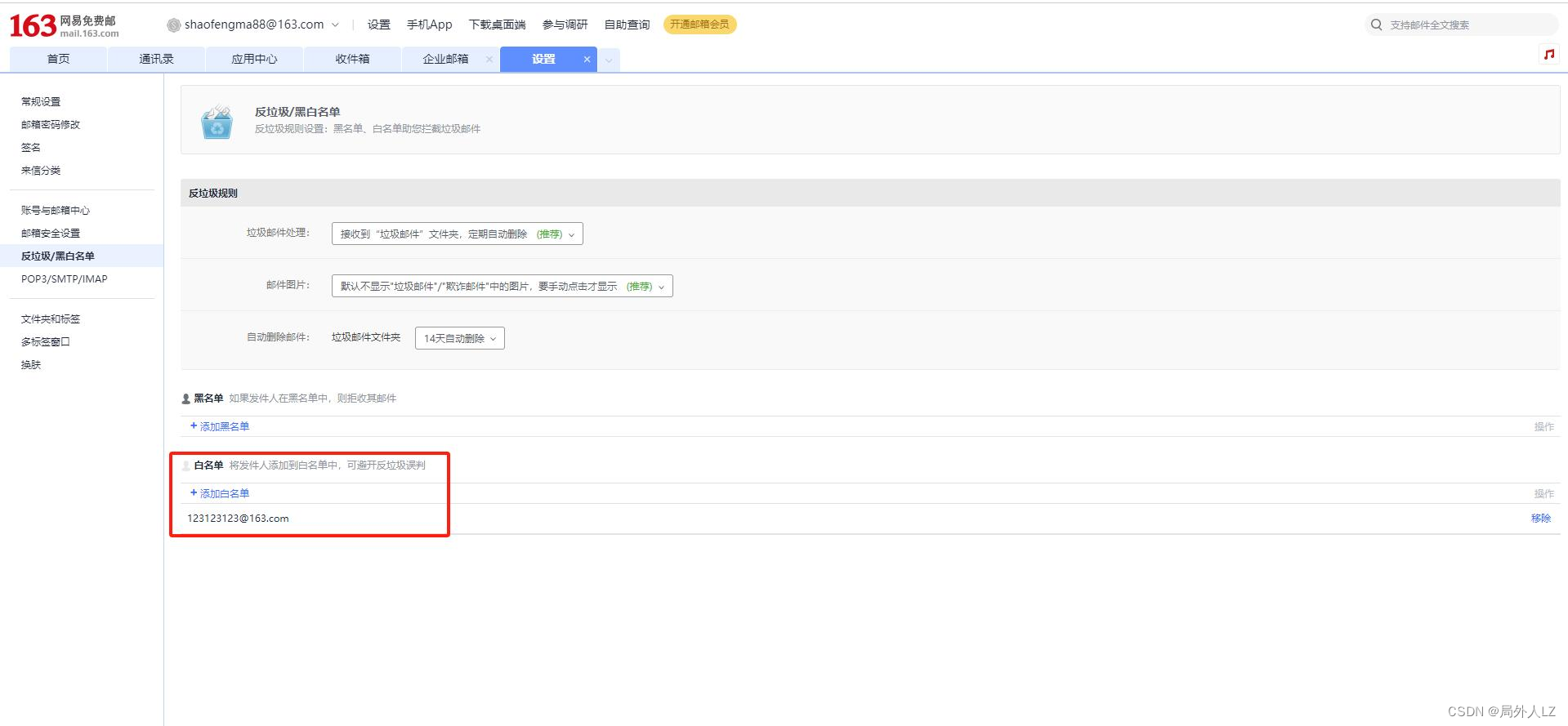

- 配置邮件报警:报警配置163邮箱,https://feapder.com/#/source_code/%E6%8A%A5%E8%AD%A6%E5%8F%8A%E7%9B%91%E6%8E%A7

- setting.py打开mysql配置,无setting.py文件,运行命令feapder create --setting

# # MYSQL MYSQL_IP = "localhost" MYSQL_PORT = 3306 MYSQL_DB = "video" MYSQL_USER_NAME = "root" MYSQL_USER_PASS = "root"# # REDIS # # ip:port 多个可写为列表或者逗号隔开 如 ip1:port1,ip2:port2 或 ["ip1:port1", "ip2:port2"] REDISDB_IP_PORTS = "localhost:6379" REDISDB_USER_PASS = "" REDISDB_DB = 0 # 连接redis时携带的其他参数,如ssl=True REDISDB_KWARGS = dict() # 适用于redis哨兵模式 REDISDB_SERVICE_NAME = ""# # 去重 ITEM_FILTER_ENABLE = True # item 去重 ITEM_FILTER_SETTING = dict(filter_type=1, # 永久去重(BloomFilter) = 1 、内存去重(MemoryFilter) = 2、 临时去重(ExpireFilter)= 3、轻量去重(LiteFilter)= 4name="douban" )# # 邮件报警 EMAIL_SENDER = "123123123@163.com" # 发件人 EMAIL_PASSWORD = "EYNXMBWJKMLZFTKQ" # 授权码 EMAIL_RECEIVER = ["123123123@163.com"] # 收件人 支持列表,可指定多个 EMAIL_SMTPSERVER = "smtp.163.com" # 邮件服务器 默认为163邮箱 - 创建item:feapder create -i douban,选择item,需要现在数据库创建表

CREATE TABLE IF NOT EXISTS douban(id INT AUTO_INCREMENT,title VARCHAR(255),rating FLOAT,quote VARCHAR(255),intro TEXT,PRIMARY KEY(id)) - 修改douban_item.py文件

# -*- coding: utf-8 -*- """ Created on 2023-10-08 16:17:51 --------- @summary: --------- @author: Administrator """from feapder import Itemclass DoubanItem(Item):"""This class was generated by feapdercommand: feapder create -i douban"""__table_name__ = "douban"__unique_key__ = ["title","quote","rating","title"] # 指定去重的key为 title、quote,最后的指纹为title与quote值联合计算的md5def __init__(self, *args, **kwargs):super().__init__(**kwargs)# self.id = Noneself.intro = Noneself.quote = Noneself.rating = Noneself.title = None - 爬虫文件:air_spider_douban.py

# -*- coding: utf-8 -*- """ Created on 2023-10-06 15:36:09 --------- @summary: --------- @author: Administrator """import feapder from items.douban_item import DoubanItem from feapder.network.user_agent import get as get_ua from requests.exceptions import ConnectTimeout,ProxyError from feapder.utils.email_sender import EmailSender import feapder.setting as settingclass AirSpiderDouban(feapder.AirSpider):def __init__(self, thread_count=None):super().__init__(thread_count)self.request_url = 'https://movie.douban.com/top250'def start_requests(self):yield feapder.Request(self.request_url)def download_midware(self, request):request.headers = {'User-Agent': get_ua()}return requestdef parse(self, request, response):video_list = response.xpath('//ol[@class="grid_view"]/li')for li in video_list:item = DoubanItem()item['title'] = li.xpath('.//div[@class="hd"]/a/span[1]/text()').extract_first()item['rating'] = li.xpath('.//div[@class="bd"]//span[@class="rating_num"]/text()').extract_first()item['quote'] = li.xpath('.//div[@class="bd"]//p[@class="quote"]/span/text()').extract_first()detail_url = li.xpath('.//div[@class="hd"]/a/@href').extract_first()if detail_url:yield feapder.Request(detail_url, callback=self.get_detail_info, item=item)# 获取下一页数据next_page_url = response.xpath('//div[@class="paginator"]//link[@rel="next"]/@href').extract_first()if next_page_url:yield feapder.Request(next_page_url,callback=self.parse)def get_detail_info(self, request, response):item = request.itemdetail = response.xpath('//span[@class="all hidden"]/text()').extract_first() or ''if not detail:detail = response.xpath('//div[@id="link-report-intra"]/span[1]/text()').extract_first() or ''item['intro'] = detail.strip()yield itemdef exception_request(self, request, response, e):prox_err = [ConnectTimeout,ProxyError]if type(e) in prox_err:request.del_proxy()def end_callback(self):with EmailSender(setting.EMAIL_SENDER,setting.EMAIL_PASSWORD) as email_sender:email_sender.send(setting.EMAIL_RECEIVER, title='python',content="爬虫结束")if __name__ == "__main__":AirSpiderDouban(thread_count=5).start() - feapder create -p feapderSpider根据该命令创建的项目下会有main文件,除了单独运行爬虫文件,可以在main文件中运行,一般用于运行多个爬虫

from feapder import ArgumentParser from spiders import *def crawl_air_spider_douban():"""AirSpider爬虫"""spider = air_spider_douban.AirSpiderDouban()spider.start()if __name__ == "__main__":parser = ArgumentParser(description="爬虫练习")parser.add_argument("--crawl_air_spider_douban", action="store_true", help="豆瓣AirSpide", function=crawl_air_spider_douban)parser.run("crawl_air_spider_douban")

python爬虫之feapder.AirSpider轻量爬虫案例:豆瓣

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/122386.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

ubuntu18.4(后改为20.4)部署chatglm2并进行基于 P-Tuning v2 的微调

下载驱动

NVIDIA显卡驱动官方下载地址 下载好对应驱动并放在某个目录下,

在Linux系统中安装NVIDIA显卡驱动前,建议先卸载Linux系统自带的显卡驱动nouveau。

禁用nouveau 首先,编辑黑名单配置。

vim /etc/modprobe.d/blacklist.conf

在文件的最后添加…

C++编译与运行:其三、虚函数、虚表和多态

对于非虚非静态成员函数的调用,是在编译期间就明确了的。简单地说,调用的时候将this指针传入函数,后面根据this指针指向的对象执行程序。但是在虚函数中却不一样。

一、虚函数执行原理:虚函数指针和虚函数表

虚函数表࿱…

Vue--》简易资金管理系统后台项目实战(前端)

今天开始使用 vue3 + ts + node 搭建一个简易资金管理系统的前后端分离项目,因为前后端分离所以会分两个专栏分别讲解前端与后端的实现,后端项目文章讲解可参考:后端链接,我会在前后端的两类专栏的最后一篇文章中会将项目代码开源到我的github上,大家可以自行去进行下载运…

SpringCloud 微服务全栈体系(五)

第七章 Feign 远程调用

先来看我们以前利用 RestTemplate 发起远程调用的代码: 存在下面的问题: 代码可读性差,编程体验不统一 参数复杂 URL 难以维护 Feign 是一个声明式的 http 客户端,官方地址:https://github.…

CTF-Web(3)文件上传漏洞

笔记目录 CTF-Web(2)SQL注入CTF-Web(3)文件上传漏洞 1.WebShell介绍 (1)一句话木马定义 一种网页后门,以asp、php、jsp等网页文件形式存在的一种命令执行环境,而 一句话木马往往只有一行WebShell代码。 作用: 攻击获得网站控制权限 查看、修改…

实战经验分享FastAPI 是什么

FastAPI 是什么?FastAPI实战经验分享

FastAPI 是一个先进、高效的 Python Web 框架,专门用于构建基于 Python 的 API。它是…

读图数据库实战笔记02_图数据建模

1. 概念

1.1. 实体

1.1.1. 通常用名词来表示

1.1.2. 描述一个领域中的事物或者事物类型

1.1.2.1. 汽车

1.1.2.2. 用户

1.1.2.3. 地理位置

1.1.3. 在逻辑模型和技术实现过程中,实体通常会变成“顶点”

1.2. 关系

1.2.1. 用动词(或动词短语&#…

【错误解决方案】ModuleNotFoundError: No module named ‘cPickle‘

1. 错误提示

在python程序中试图导入一个名为cPickle的模块,但Python提示找不到这个模块。

错误提示:ModuleNotFoundError: No module named cPickle 2. 解决方案

实际上,cPickle是Python的pickle模块的一个C语言实现,通常用于…

【计算机网络笔记】Web缓存/代理服务器技术

系列文章目录

什么是计算机网络? 什么是网络协议? 计算机网络的结构 数据交换之电路交换 数据交换之报文交换和分组交换 分组交换 vs 电路交换 计算机网络性能(1)——速率、带宽、延迟 计算机网络性能(2)…

【2021集创赛】Robei杯一等奖:基于Robei EDA工具的隔离病房看护机器人设计

本作品参与极术社区组织的有奖征集|秀出你的集创赛作品风采,免费电子产品等你拿~活动。 团队介绍

参赛单位:重庆交通大学 队伍名称:一丘之貉 指导老师:毕波 李艾星 参赛队员:郁航 张坤 秦衡 总决赛奖项:Robei杯一等奖…

HarmonyOS 3.1 第三方包导入

文章目录 导入第三方库第三方库概述如何获取三方库zxing库什么是zxing库安装和卸载使用zxing 导入第三方库

第三方库概述

第三方库是指由第三方开发人员或组织编写的、可在你的应用程序中使用的软件库。这些库通常提供了一些功能和工具,可以简化你的开发工作。

在…

内网穿透工具之NATAPP(一)

使用工具前,有必要了解一下什么是内网穿透吧! 内网穿透简单来说就是将内网外网通过natapp隧道打通,让内网的数据让外网可以获取。比如常用的办公室软件等,一般在办公室或家里,通过拨号上网,这样办公软件只有在本地的局…

SpringCloud 微服务全栈体系(六)

第八章 Gateway 服务网关

Spring Cloud Gateway 是 Spring Cloud 的一个全新项目,该项目是基于 Spring 5.0,Spring Boot 2.0 和 Project Reactor 等响应式编程和事件流技术开发的网关,它旨在为微服务架构提供一种简单有效的统一的 API 路由管…

【Selenium】提高测试爬虫效率:Selenium与多线程的完美结合

前言 使用Selenium 创建多个浏览器,这在自动化操作中非常常见。 而在Python中,使用 Selenium threading 或 Selenium ThreadPoolExecutor 都是很好的实现方法。 应用场景:

创建多个浏览器用于测试或者数据采集;使用Selenium 控…

larvel 中的api.php_Laravel 开发 API

Laravel10中提示了Target *classController does not exist,为什么呢? 原因是:laravel8开始写法变了。换成了新的写法了 解决方法一: 在路由数组加入App\Http\Controllers\即可。 <?phpuse Illuminate\Support\Facades\Route;…

Linux常见命令(持续更新)

Linux命令 Linux查看文件句柄 要查看Linux中的文件句柄,可以通过使用命令lsof或lsof -p <进程ID>。下面是两种方法的解释: 方法一:使用lsof命令查看文件句柄 打开终端。

输入命令lsof,然后按下回车键。

这将显示当前系统上…

LeetCode热题100——哈希表

哈希表 1.两数之和2.字母异位词分组3.最长连续序列 1.两数之和

给定一个整数数组 nums 和一个整数目标值 target,请你在该数组中找出 和为目标值 target 的那 两个 整数,并返回它们的数组下标。可以按任意顺序返回答案。

// 题解思路:使用哈…

WSL——ubuntu中anaconda换源(conda、pip)

1、conda

打开Ubuntu,输入下列命令。 conda config --set show_channel_urls yes 在文件管理器地址栏,输入:\\wsl$。打开Ubuntu根路径,其中显示了.condarc文件。 以文本形式打开,并输入要换的源,保存即可。…

部署chatglm2并进行基于 P-Tuning v2 的微调)

)

)

文件上传漏洞)

)

)

)

)