-

libraryIO的链接:https://libraries.io/pypi/langchain-decorators

-

来colab玩玩它的demo

-

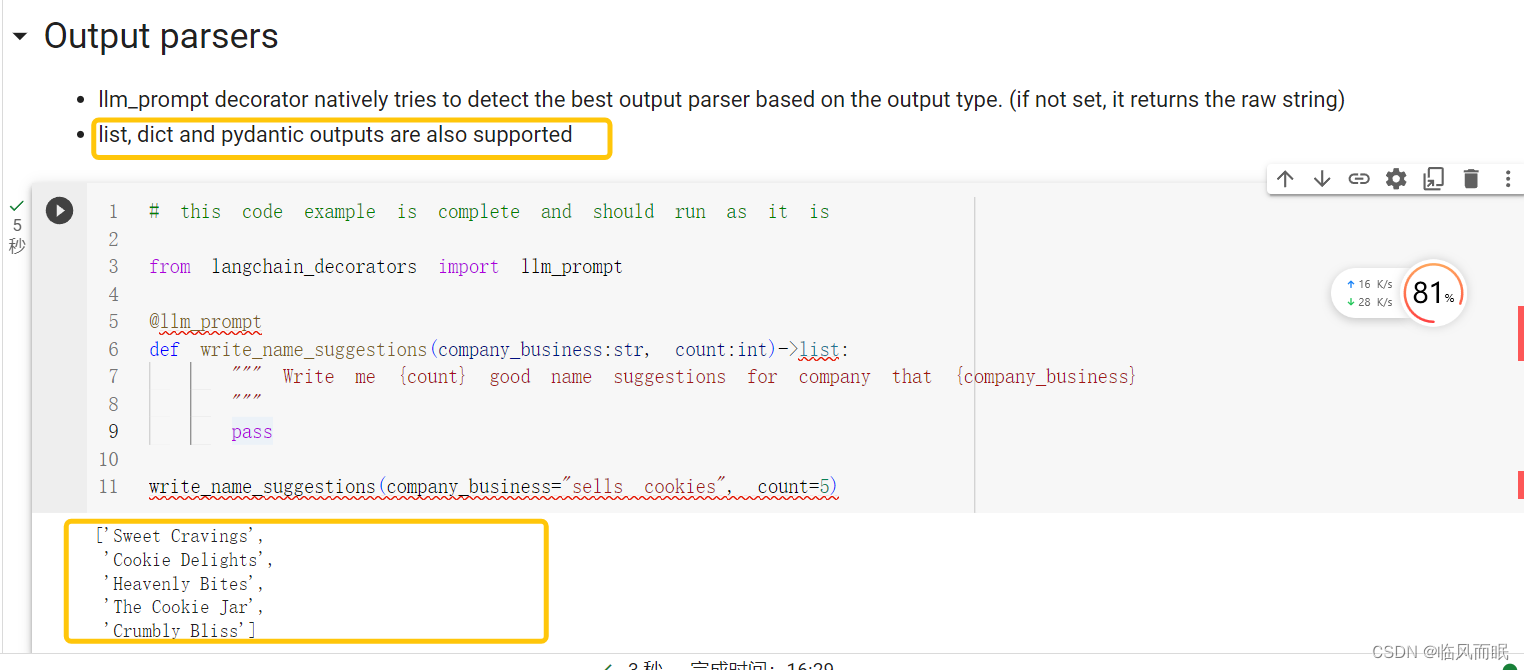

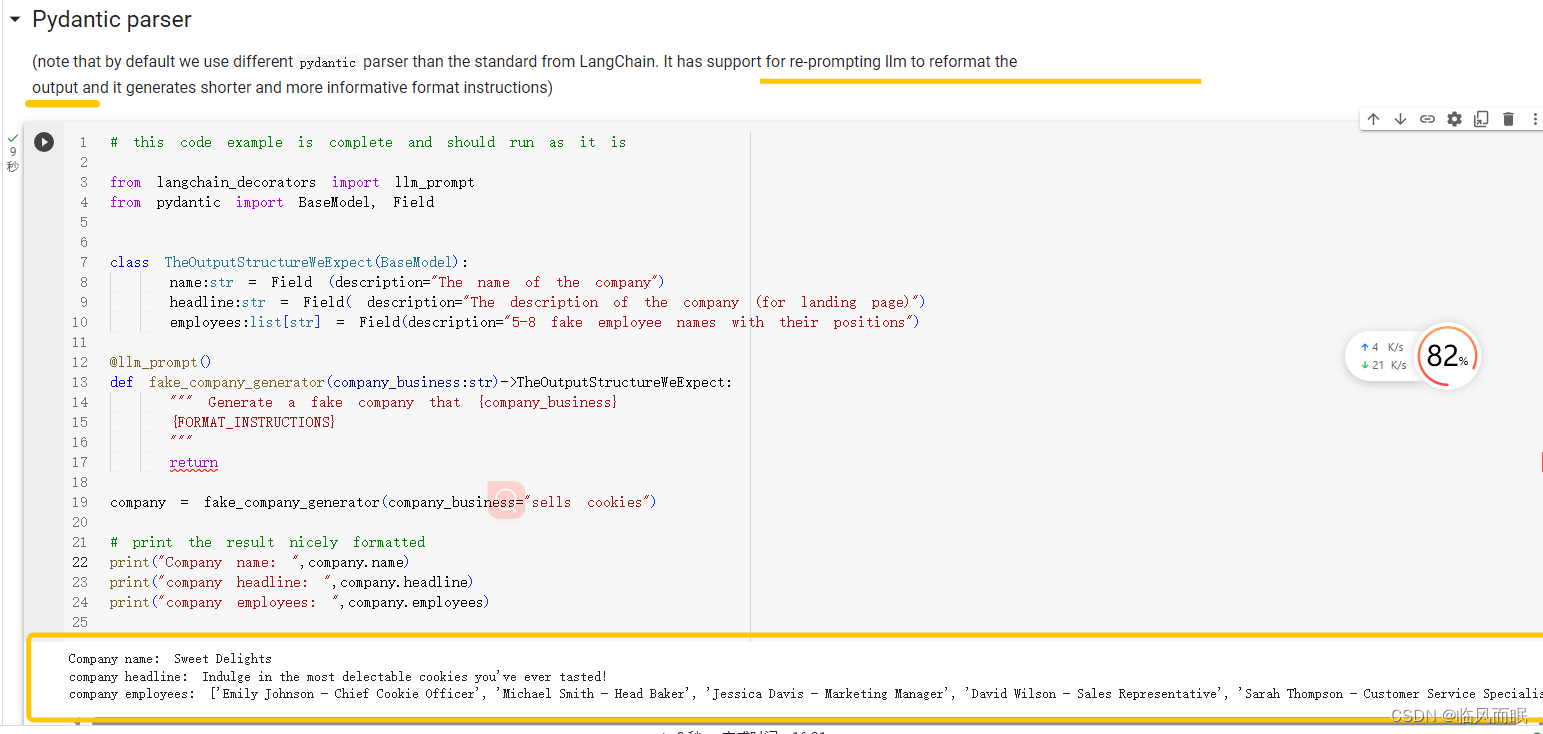

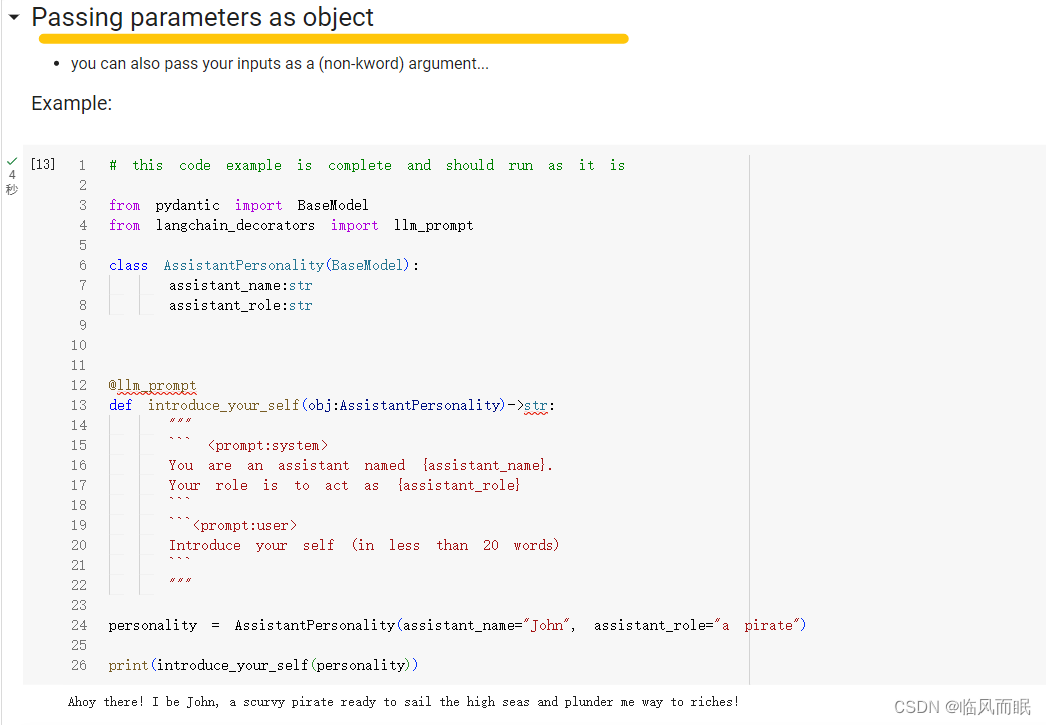

感觉这确实是个挺好用的库

- 想到之前纯调prompt来控制输出格式的痛苦,这个可太有效了

- 想到之前纯调prompt来控制输出格式的痛苦,这个可太有效了

-

cool~

-

最下面这个react的多智能体例子很好玩,来看看!

from typing import List from langchain_decorators import llm_prompt from langchain.agents import load_tools from langchain.tools.base import BaseTool from textwrap import dedent from langchain_decorators import PromptTypes from langchain_decorators.output_parsers import JsonOutputParser import jsontools = load_tools([ "llm-math"], llm=GlobalSettings.get_current_settings().default_llm)# you may, or may not use pydantic as your base class... totally up to you class MultilingualAgent:def __init__(self, tools:List[BaseTool],result_language:str=None) -> None:self.tools = tools# we can refer to our field in all out promptsself.result_language = result_languageself.agent_scratchpad = "" # we initialize our scratchpadself.feedback = "" # we initialize our feedback if we get some error# other settingsself.iterations=10self.agent_format_instructions = dedent("""\# Reasoning... write your reasoning here ...# Tool```json{{"tool": name of the tool to use,"tool_input": the input for the tool}}```# Observationoutput from the tool... repeat this # Reasoning, # Tool, # Observation sequence multiple times until you know the final answer, when you write:# Final answer... write the final answer """)@propertydef tools_description(self)->str: # we can refer to properties in out prompts tooreturn "\n".join([f" - {tool.name}: {tool.description}" for tool in self.tools])# we defined prompt type here, which will make @llm_prompt(prompt_type=PromptTypes.AGENT_REASONING, output_parser="markdown", stop_tokens=["Observation"], verbose=True)def reason(self)->dict:"""The system prompt:```<prompt:system>You are an assistant that uses reasoning and tools to help user. You use tools for the task the tool is designed to. Before answering the question and/or using the tool, you should write down the explanation. Here is the list of tools available:{tools_description}Use this format:{agent_format_instructions}{? in {result_language}?} here ...{?Make sure to write the final answer in in {result_language}!?} ```User question:```<prompt:user>{question}```Scratchpad:```<prompt:assistant>{agent_scratchpad}``````<prompt:user>{feedback}```"""returndef act(self, tool_name:str, tool_input:str)->str:tool = next((tool for tool in self.tools if tool.name.lower()==tool_name.lower()==tool_name.lower()))if tool is None:self.feedback = f"Tool {tool_name} is not available. Available tools are: {self.tools_description}"returnelse:try:result = tool.run(tool_input)except Exception as e:if self.feedback is not None:# we've already experienced an error, so we are not going to try forever... let's raise this oneraise eself.feedback = f"Tool {tool_name} failed with error: {e}.\nLet's fix it and try again."tool_instructions = json.dumps({"tool":tool.name, "tool_input":tool_input})self.agent_scratchpad += f"# Tool\n```json\n{tool_instructions}\n```\n# Observation\n\nResult from tool {tool_name}:\n\t{result}\n"def run(self, question):for i in range(self.iterations):reasoning = self.reason(question=question)if reasoning.get("Final answer") is not None:return reasoning.get("Final answer")else:tool_info = reasoning.get("Tool")tool_name, tool_input = (None, None)if tool_info:tool_info_parsed = JsonOutputParser().parse(tool_info)tool_name = tool_info_parsed.get("tool")tool_input = tool_info_parsed.get("tool_input")if tool_name is None or tool_input is None:self.feedback = "Your response was not in the expected format. Please make sure to response in correct format:\n" + self.agent_format_instructions continueself.act(tool_name, tool_input)raise Exception(f"Failed to answer the question after {self.iterations} iterations. Last result: {reasoning}")agent = MultilingualAgent(tools=tools, result_language="German" )result = agent.run("What is the surface of a sphere with radius with diameter of 100km?")print("\n\nHere is the agent's answer:", result) -

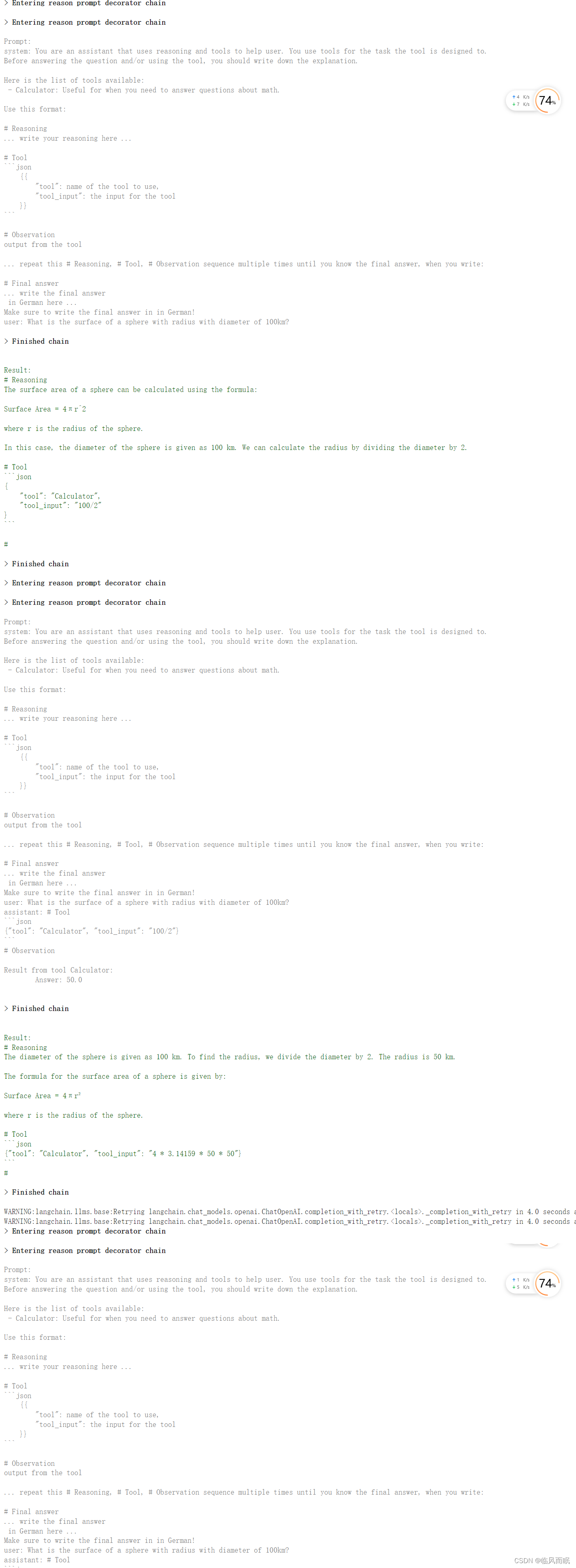

下面是输出

-

这个包确实能带来不少方便~

LangChain-Decorators 包学习

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/105935.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

The SDK location is inside Studio install location 解决

The SDK location is inside Studio install location 解决

安装 Android Studio SDK 时提示:The SDK location is inside Studio install location 解决 问题: 由于 SDK 与 编辑器(Android Studio)的安装在同一目录下所以报错。

解决 你需要在 Andro…

第三节:利用运行按钮运行VBA代码

《VBA之Excel应用》(10178983)是非常经典的,是我推出的第七套教程,定位于初级,目前是第一版修订。这套教程从简单的录制宏开始讲解,一直到窗体的搭建,内容丰富,实例众多。大家可以非…

leetcode-198.打家劫舍

1. 题目 2. 解答

dp[i]表示第i个位置的偷窃最大金额; room[i]表示第i间房间的现金; dp[0] room[0]; dp[1] max(room[0], room[1]); dp[i] max(dp[i-1], dp[i-2] room[i]);

#include <stdio.h>int max(int a, int b)

{return a > b? a:b;…

高校教务系统登录页面JS分析——重庆交通大学

高校教务系统密码加密逻辑及JS逆向 本文将介绍高校教务系统的密码加密逻辑以及使用JavaScript进行逆向分析的过程。通过本文,你将了解到密码加密的基本概念、常用加密算法以及如何通过逆向分析来破解密码。 本文仅供交流学习,勿用于非法用途。 一、密码加…

Neo4j入门基础:CREATE,DELETE,SET,REMOVE(看不懂我直接吃...)

1. 创建节点

1.1 创建一个节点

create (s:student1)创建一个标签为student1的节点

1.2 创建多个节点

create (s2:student2),(s3:student3)同时创建两个节点,标签分别为:student2,student3

1.3 创建节点并附带(多个࿰…

【部署】Linux Shell脚本部署java程序 (jar包)

文章目录 前言 前言

#!/usr/bin/env bash

#可变参数变量#部署磁盘路径

baseDirPath/data/apps/java/smj-exchange-upload

#jar包名称

packageNamesmj-exchange-upload.jar #命令启动包名 xx.jar的xxpid #进程pid#检测pid

getPid(){echo "检测状态-----------------------…

当想为SLB申请公网域名时,缩写是什么意思

SLB的缩写是Server Load Balancer,即服务器负载均衡器。

是一种内网吗?

不,SLB(Server Load Balancer)是一种位于应用程序和网络之间的设备或服务,用于在多个服务器之间分发流量、负载均衡以及提供高可用性。它通常…

教你快速解决unity无法添加脚本bug

原因一

就是报错的字面意思,文件名与类名不一致! 原因二

与版本不兼容的汉化导致系统出错!

解决办法一

使用原版本自带的英文显示

解决办法二

更换汉化包

我其实更加推荐使用英文版本的Unity!

云耀服务器L实例部署Nextcloud企业云盘系统|华为云云耀云服务器L实例评测使用体验

文章目录 Nextcloud简介1.1 部署华为云云耀服务器L实例1.1.1 云耀服务器L实例购买1.1.2 云耀服务器L实例初始化配置1.1.3 远程登录云耀服务器L实例 2. 云耀服务器L实例中间件部署2.1 安装配置环境2.1.1 安装基本工具2.1.2 安装MariaDB2.1.3 安装Nginx2.1.4 安装PHP 3. 安装Next…

svn项目同步到gitLab

安装git

确保安装了git

新建一个文件夹svn-git

在文件夹中新建userinfo.txt文件,映射svn用户,这个文件主要是用于将SVN用户映射为Git用户(昵称及其邮箱)。

userinfo.txt具体格式如下:

admin admin <admin163.com>

lis…

vscode插件路径转移C盘之外盘

改变vscode系统路径

最近C盘路径不够了,网上的工具使用没那么精细,还不如自己手动看每个文件夹大小。在整理过长遇到vscode插件路径转移,方法如下: 桌面图标右键点击属性 改变–extensions-dir后面参数就可以了。

【计算机网络笔记】数据交换之报文交换和分组交换

系列文章目录报文交换分组交换存储-转发报文交换 vs 分组交换总结 系列文章目录

什么是计算机网络? 什么是网络协议? 计算机网络的结构 数据交换之电路交换 报文交换

报文:源(应用)发送的信息整体。比如一个文件、一…

LuatOS-SOC接口文档(air780E)-- i2s - 数字音频

示例

-- 这个库属于底层适配库, 具体用法请查阅示例

-- demo/multimedia

-- demo/tts

-- demo/record常量 常量 类型 解释 i2s.MODE_I2S number I2S标准,比如ES7149 i2s.MODE_LSB number LSB格式 i2s.MODE_MSB number MSB格式,比如TM8211

…

ORACLE XXX序列 goes below MINVALUE 无法实例化的处理办法

--序列增加区分

--删除未使用序列表

DECLARE V_CNT INT;

BEGINSELECT COUNT(*) INTO V_CNT FROM USER_SEQUENCES WHERE SEQUENCE_NAME SEQ_INTELLECT_BIZ_DETAIL_ID;IF V_CNT1 THEN BEGINEXECUTE IMMEDIATE DROP SEQUENCE SEQ_INTELLECT_BIZ_DETAIL_ID;END;END IF;

END;

/

---…

【算法与数据结构】--高级算法和数据结构--排序和搜索

一、常见排序算法

以下是一些常见的排序算法,包括冒泡排序、选择排序、插入排序、快速排序和归并排序。每种排序算法的讲解以及附带C#和Java示例:

1.1 冒泡排序 (Bubble Sort)

讲解: 冒泡排序是一种简单的比较排序算法。它多次遍历待排序的…

Linux系统conda虚拟环境离线迁移移植

本人创建的conda虚拟环境名为yys(每个人的虚拟环境名不一样,替换下就行)

以下为迁移步骤:

1.安装打包工具将虚拟环境打包: conda install conda-pack conda pack -n yys -o yys.tar.gz

2.将yys.tar.gz上传到服务器&…

PyTorch入门教学——dir()函数和help()函数的应用

1、简介

已知PyTorch是一个工具包,其中包含许多功能函数。dir()函数和help()函数是学习PyTorch包的重要法宝。 dir():能让我们知道工具包以及工具包中的分隔区有什么东西。help():能让我们知道每个工具是如何使用的,即工具的使用…

Rust闭包 - Fn/FnMut/FnOnce traits,捕获和传参

Rust闭包: 是一类能够 捕获周围作用域中变量 的 函数 |参数| {函数体} 参数及返回值类型可推导,无需显示标注类型唯一性,确定后不可更改函数体为单个表达式时,{}可省略 文章目录 引言1 分类 Fn / FnMut / FnOnce2 关键词 move3 闭包作为参数…

最新文章

- java基础-12 : 单列集合(Collection) - 详解

- 2025 年 11 月 ESB 集成平台,API 管理集成平台,集易云集成平台公司最新推荐,聚焦资质、案例、售后的五家机构深度解读!

- 三场比赛(四)

- mssql public权限sql注入

- 2025 年 11 月 SAP 集成平台,EDI 集成平台,ERP 集成平台公司最新推荐,聚焦资质、案例、售后的五家机构深度解读!

- Luogu P11361 [NOIP2024] 编辑字符串 题解

- AI辅助编程下的软件分层设计:让生成的代码井然有序

- 2025年钢管总成加工厂权威推荐榜单:液压钢管总成/硬管总成品牌/免焊接钢管总成源头厂家精选

)

)

-- i2s - 数字音频)

函数和help()函数的应用)